McTighe, J., & Ferrara, S. (1998). Assessing Learning in the Classroom. Student Assessment Series. NEA Professional Library, Distribution Center, PO Box 2035, Annapolis Junction, MD 20701-2035.

- Assess teaching and learning, not the student and grades

- “The primary purpose of classroom assessment is to inform teaching and improve learning, not to sort and select students or to justify a grade.” (McTighe & Ferrara, 1998, p.1)

- Latin roots

- “the term assessment is derived from the Latin root assidere meaning “to sit beside.” (McTighe & Ferrara, 1998, p.2)

- “Assidere suggests that, in addition to tests and projects, classroom assessments include informal methods of “sitting beside,” observing, and conversing with students as a means of understanding and describing what they know and can do.” (McTighe & Ferrara, 1998, p.2)

- Types of assessment

- Tests

- Rigid format: time limits, paper and pencil, silent

- Limited set of responses: limited access to source material

- Evaluation

- Make judgements regarding quality, value, or worth

- Pre-set criteria

- Summative assessment

- culminating assessment that provides a summary report

- Formative assessment

- Ongoing diagnostic

- Helps teachers adjust instruction

- Improve student performance

- Determine previous knowledge

- Determine ongoing understandings and misconceptions

- Tests

- Large scale assessment

- Usually standardized tests

- High-stakes

- Educational accountability

- Norm referenced

- Easier interpretation

- Comparison with others

- Averages to determine your position

- Criterion referenced

- Compared to reestablished standards

- Usually standardized tests

- Classroom assessments

- Diagnose student

- Inform parents

- Improve practice

- Effective Classroom Assessment

- Inform teaching and improve learning

- Performance-based assessments

- Focus instruction and evaluation

- Students understand criteria for quality

- Students get feedback and revise their work

- Peer- and self-evaluation

- Performance-based assessments

- Multiple sources of information

- Single test is like a single photograph

- Frequent sampling

- Use array of methods

- Create a Photo Album instead of single photo at the end

- Different times

- Different lenses

- Different compositions

- Create a Photo Album instead of single photo at the end

- Valid, reliable, and fair measurements

- Validity: How well it measures what it is intended to measure

- Reliability: If repeated, would you get the same results?

- Fairness: give students equal chances to show what they know and can do without biases or preconceptions

- Ongoing

- Inform teaching and improve learning

- Content Standards

- Declarative knowledge

- what do students understand (facts, concepts, principles, generalizations)

- Procedural knowledge

- what do we want students to be able to do (skills, processes, strategies)

- Attitudes, values, or habits of mind

- how we would like students to be disposed to act (appreciate the arts, treat people with respect, avoid impulse behavior)

- Declarative knowledge

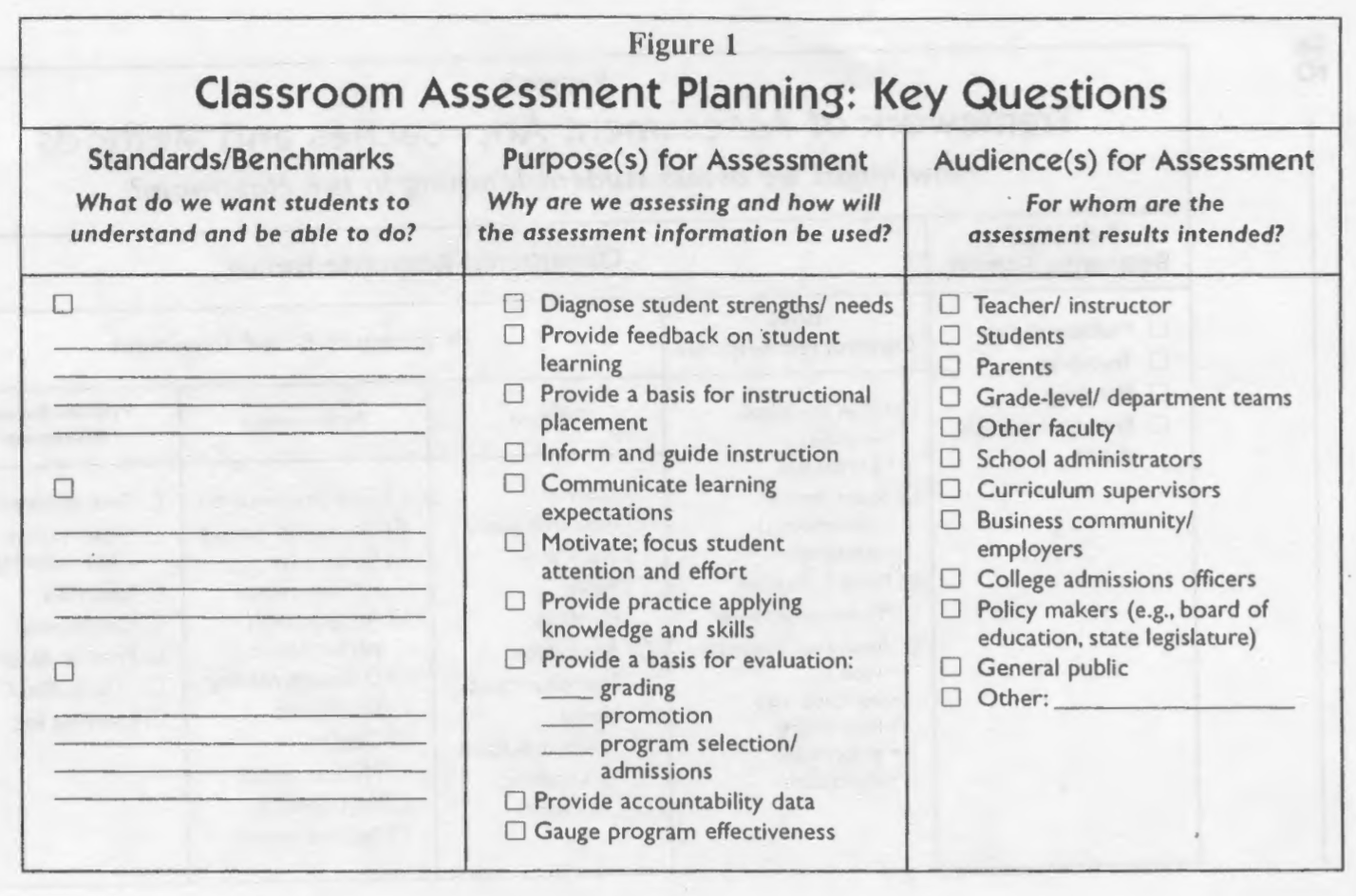

- Purpose & Audience

- Why are we assessing?

- How will the assessment results be used?

- Who are the results intended for?

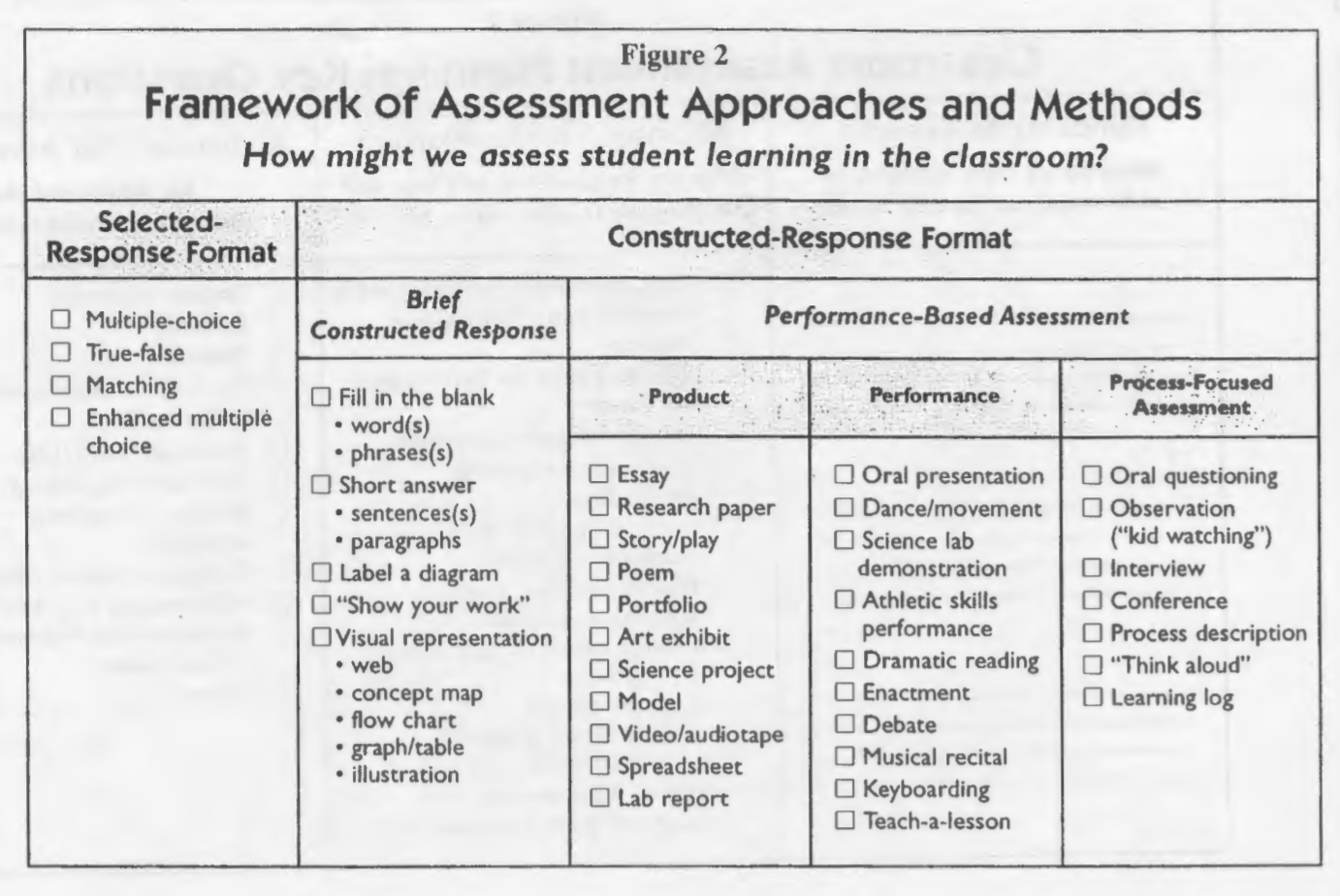

- Assessment Approaches and Methods

- Approach – what do you want students to do?

- Select a response

- Construct a response

- Create a product

- Provide and observable performance

- Describe their thinking/learning process

- Selected-Response Format

- Positive

- Wide range of knowledge can be ‘tested’

- Easy to implement

- Easy to evaluate and compare

- Fast

- Negative

- Assess knowledge and skills in isolation and out of context

- Not able to assess critical thinking, creativity, oral communication, and social skills

- Real-world does not have single correct answers

- Focuses students on acquisition of facts rather than understanding and thoughtful application of knowledge

- Positive

- Constructed-Response Format

- Brief Constructed Response

- Short written answers

- Visual representations

- Positive

- Students have a better opportunity to show what they know

- Easier to construct and evaluate than other constructed responses

- Negative

- Does not assess attitudes, values, or habits of mind

- Require judgement-based evaluation – low reliability and fairness

- Performance-Based Assessment

- Requires students to apply knowledge and skills rather than recalling and recognizing

- Associated terminology:

- Authentic assessment

- Rubrics

- Anchors

- Standards

- Content standards – what students should know

- Performance standards – how well students should perform

- Opportunity-to-learn standards – is the context right

- Positive

- Content-specific knowledge

- Integration of knowledge across subject-areas

- Life-long learning competencies

- Negative

- Do not yield a single correct answer or solution – allows for wide range of responses (also positive)

- Types

- Product

- “Authentic” since it resembles work done outside of school

- Portfolio to document, express individuality, reflect, observe progress, peer- and self-evaluation

- Criteria must be identified and communicated with students

- Performance

- Can observe directly application of knowledge

- Students are more motivated and put greater effort when presenting to ‘real’ audiences

- Time- and labor-intensive

- Process-focused assessment

- Information on learning strategies and thinking processes

- Gain insights into the underlying cognitive processes

- Examples

- “How are these two things alike and different?”

- “Think out loud”

- Continuous and formative

- Product

- Brief Constructed Response

- Approach – what do you want students to do?

- Evaluation Methods and Roles

- Scoring Rubric (Rubrica – red earth used to mark something of significance)

- Evaluative criteria

- Fixed scales

- Description of how to discriminate levels of understanding, quality, or proficiency

- Holistic Rubric

- Overall impression of quality and levels of performance

- Used for summative purposes

- Analytic Rubric

- Level of performance along two or more separate traits

- Used in day-to-day evaluations in classroom

- Generic Rubric

- General criteria for evaluating student’s performance

- Applied to a variety of disciplines

- Task-specific Rubric

- Designed to be used in a specific assessment task

- Anchors

- Examples that accompany a scoring rubric

- Rating scales

- Bipolar rating scales – bad & good, relevant & irrelevant

- Checklists

- Good to ensure no element is forgotten or attended to

- Written and oral comments

- Best level of feedback – communicates directly with student

- Must not be only negative feedback

- Scoring Rubric (Rubrica – red earth used to mark something of significance)

- Communication and Feedback Methods

- How to communicate results?

- Numerical scores & Letter grades

- Widely use but not descriptive

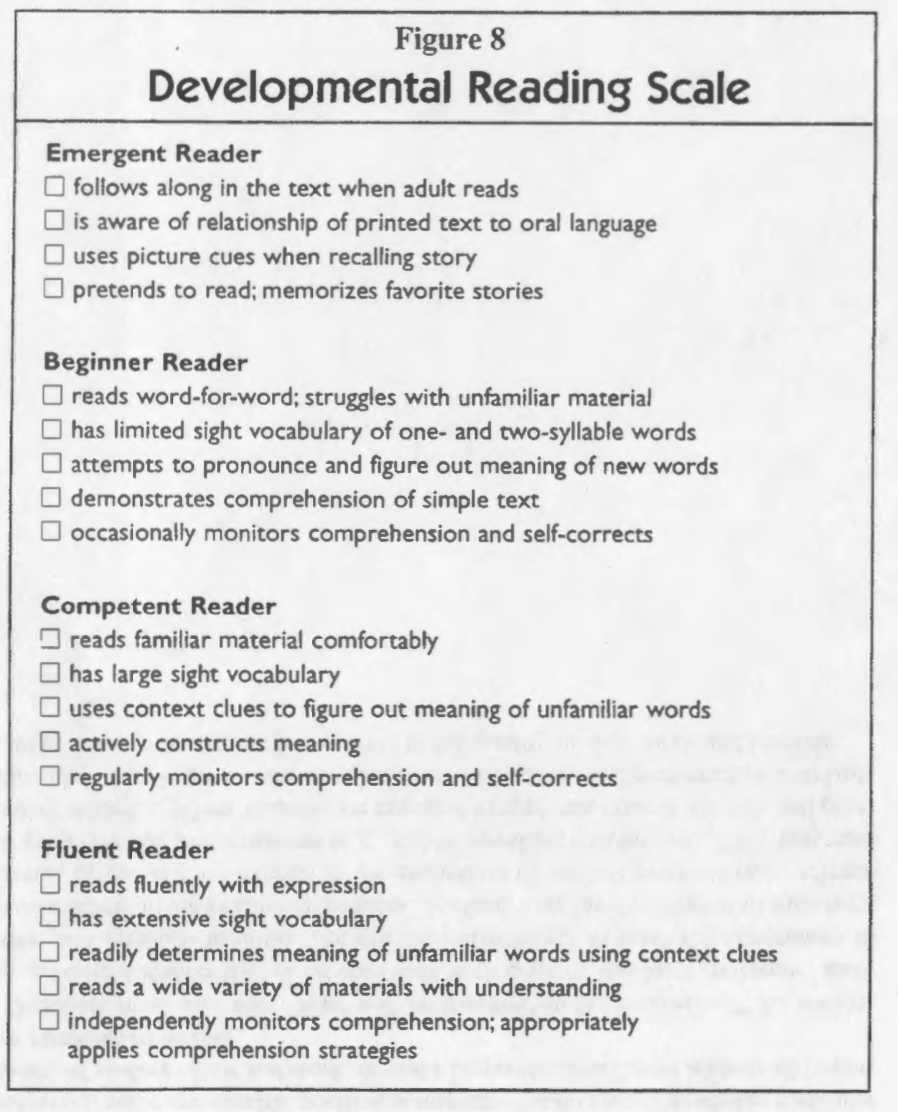

- Developmental and Proficiency Scales

- Contain description of quality and performance

- Checklists

- Careful with poorly defined categories like creativity – open to interpretations

- Written comments, narrative reports, verbal reports, and conferences

- Communicate directly with each student

- Time-consuming

- Assessment not only measures outcomes but also invokes the values, the how, and the what of learning,

- Great glossary at the end of this paper.

Coffey, J. (2003). Involving Students in Assessment. In J. Atkin & J. Coffey (Eds.) Everyday Assessment in the Science Classroom. Arlington, VA: National Science Teachers Association. pp. 75-87.

- Assessment is an opportunity for learning

- “Whether it comes after teaching, while teaching, or by teaching, we often think of assessment as something done to students, not with them.” (Coffey, 2003, p.76)

- Teachers

- check assignments and interpret student responses

- listen closely to students’ questions so that they can gain insight into their students’ understandings

- seek to make explicit the assessment criteria so that all students know how they will be evaluated

- try to use what they learn through assessment to inform teaching, plan future learning activities, and provide relevant feedback

- constantly gauge trends in class engagement, interests, and understanding

- strive to fairly assign grades that accurately reflect what a student knows and is able to do.

- Everyday Assessment

- “Everyday assessment is a dynamic classroom activity that includes the ongoing interactions among teachers and students as well as more scheduled events, such as weekly quizzes and unit tests.” (Coffey, 2003, p.76)

- “One of the many purposes of everyday assessment is to facilitate student learning, not just measure what students have learned.” (Coffey, 2003, p.77)

- Key Features of Assessment

- explicating clear criteria (Butler and Neuman 1995)

- improving regular questioning (Fairbrother, Dilln, & Gill 1995)

- providing quality feedback (Kluger and DeNisi1996; Bangert-Drowns et al. 1991)

- encouraging student self-assessment (Sadler 1989; Wolf et al. 1991)

- Responsibility for own learning

- “When students play a key role in the assessment process they acquire the tools they need to take responsibility for their own learning.” (Coffey, 2003, p.77)

- Low performing benefited the most

- “Lower-performing students … showed the greatest improvement in performance when compared to the control class.” (Coffey, 2003, p.77)

- Learning From Connections

- “Through the students’ explicit participation in all aspects of assessment activity, they arrived at shared meaning of quality work. Teachers and students used assessment to construct the bigger picture of an area of study, concept, or subject mater area. Student participation in assessment also enabled students to take greater responsibility and direction for their on learning.” (Coffey, 2003, p.78)

- Shared Meanings of Quality Work

- Activities

- students generating their own evaluation sheets

- conversations in which students and teachers shared ideas about what constituted a salient scientific response, or a good presentation, lab in investigation, or project

- discussion of an actual piece of student work

- student’ reflections on their own work or a community exemplar

- student’ decision making as they completed a project

- Activities

- Assessment as a Means to Connect to a Bigger Picture

- “Teacher and student s leveraged test review as an opportunity to return to the bigger picture of what they had been studying. The class talked about what was going to be covered on the test o quiz so that all students knew what to expect.” (Coffey, 2003, p.84)

- Assessment as a Vehicle to facilitate Lifelong Learning

- “The test process also encompassed graded responses after the test, and students would often do test corrections after going over the test. On occasion students would write test questions and grade their own work.” (Coffey, 2003, p.84)

- Creating Meaningful Opportunities for Assessment

- Time

- Use of Traditional Assessment

- Public Displays of Work

- Reflection

- Revision

- Goal Setting

- Results

- “Despite initial resistance, as students learned assessment-related skills, demarcations between roles and responsibilities with respect to assessment blurred. They learned to take on responsibilities and many even appropriated ongoing assessment into their regular habits and repertoires.” (Coffey, 2003, p.86)

Treagust, D., Jacobowitz, R., Gallagher, J, & Parker, J. (March 2003). Embed Assessment in Your Teaching, Science Scope. pp. 36-39.

- Effective strategies for implementing embedded assessment

- Use pretests

- identify students’ personal conceptions

- misconceptions

- problems in understanding the topic

- Ask questions to elicit students’ ideas and reasoning

- “Acknowledge each student’s answers by recording them on the board or by asking other students to comment on their answers.” (Treagust, Jacobowitz, Gallagher, & Parker, 2003, p. 37)

- Conduct experiments and activities

- challenge their own ideas

- write down their findings

- share with their peers.

- Use individual writing tasks

- capture students’ understanding

- teacher can assess their progress

- Use group writing tasks

- students work together to illustrate each other’s respective understanding

- Have students draw diagrams or create models

- Use pretests

- Results

- “25 percent of students in the class taught by one of the authors were rated “Proficient” on the MEAP Science Test compared to 8 percent of other eighth grade classes in the school” (Treagust, Jacobowitz, Gallagher, & Parker, 2003, p. 39)

- “Moreover. students become more engaged in learning when their teacher gives attention to students’ ideas and learning. and adjusts teaching to nurture their development.” (Treagust, Jacobowitz, Gallagher, & Parker, 2003, p. 39)

Echevarria, J., Vogt, M, & Short, D., (2004). Making Content Comprehensible for English Learners: The SIOP Model. (2nd edition). Boston: Allyn & Bacon. pp. 21-33

- Sheleterd Instruction Observation Protocol (SIOP)

- Content Objectives

- Language Objectives

- Content Concepts

- Supplementary Materials

- Adaptation of Content

- Meaningful Activities