Assignment:

Research Description & Reflection (RDR) #2: The Interview Process

Response:

“Tech Adoption” The Interview Process

Interviewee: Anita Lin, iHub Manager with SVEF,

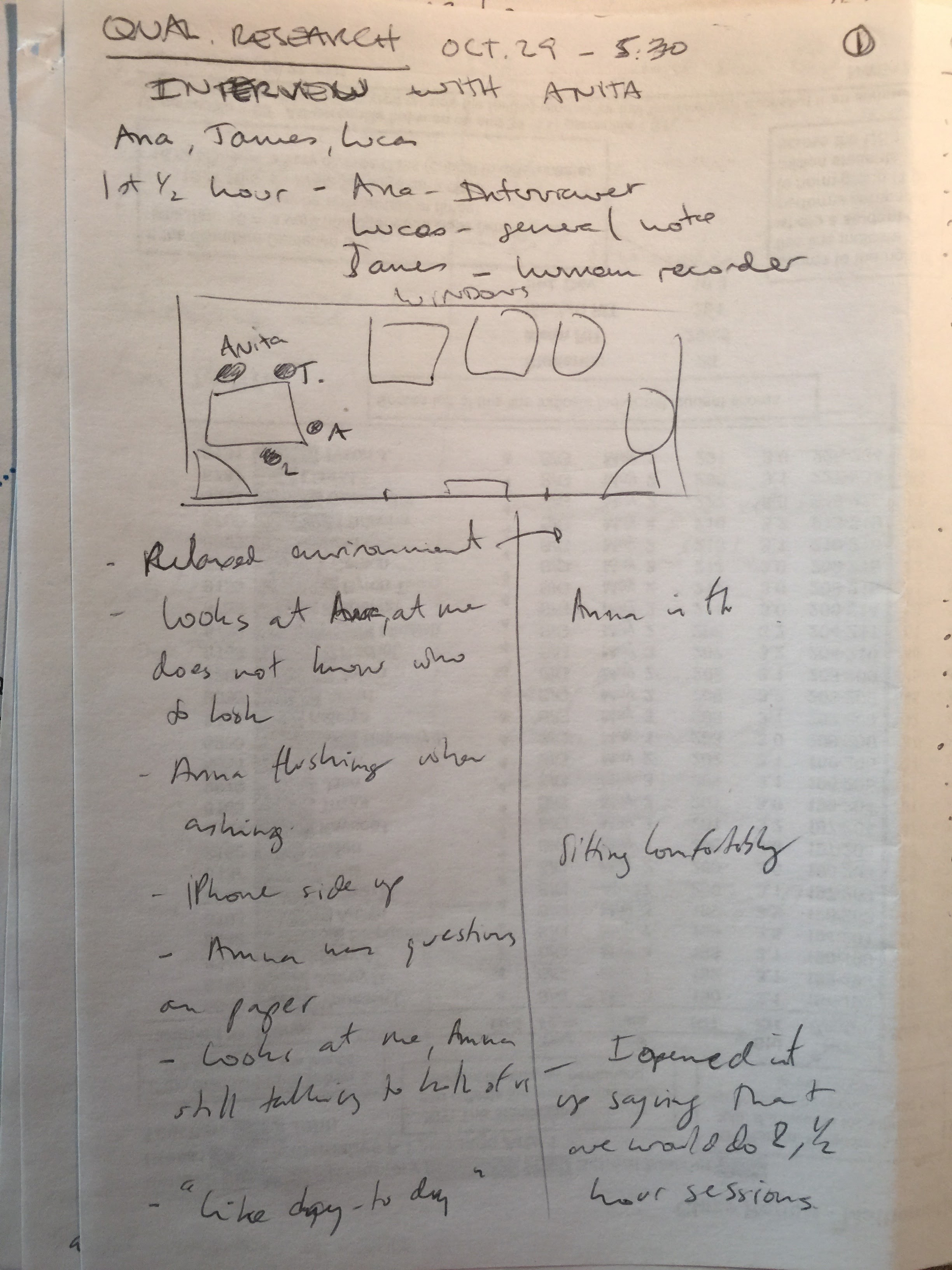

Interview #1: Oct. 29, 2015 5:15pm – 6:15pm Lathrop 101, Stanford

Interview #2: Nov. 5, 2015 6:00pm – 7:00pm Public Library, Mountain View

Researchers: Ana Cuellar, James Leo, Lucas Longo

Strategy

We decided to interview Anita due to her knowledge of the overall process of Tech Adoption. We only observed the orientation phase, one of several along the process: pitch night, selection, orientation, pilot, and feedback. The first interview involved getting the big picture about what the company does, what the process looks like, and what is her role in it. The second interview was more directed at understanding if this facilitation process was effective.

First-round Questions:

Background (introductory icebreakers)

- Thank you for letting us observe the event – how did it go for you?

Grand tour questions

- In your own words, what does SVEF do?

- Tell us about your specific responsibilities as iHub Manager at SVEF.

- Walk us through a typical day as iHub Manager at SVEF.

Program Specifics

- What’s the process that startups go through with iHub prior to pitch night?

- Prior to orientation?

- After orientation?

- To what extent is iHub involved after orientation?

- What other events/resources do you provide with similar goals/priorities?

Second-round Questions

Thank you!

1Mini recap (overall organizational goals and specific program goals)

- What was the most exciting part of the few days since we last talked?

- If you had to summarize iHub’s value proposition in one sentence, what would it be?

- Are startups and educators trying to organize this on their own already?

- If not, why? If yes, how do you facilitate more productive collaboration?

- What do you think is the ideal relationship between startups and educators?

- How would you say iHub is facilitating this ideal relationship today?

- In our last interview, you talked about the opportunities for educators to give feedback to engineers. Are there any opportunities for educators and entrepreneurs to give feedback to iHub?

- During our first interview, we started talking about SVEF’s metrics for success. Could you share those with us again?

- Why are these metrics the ones being used?

- If you could invest unlimited resources in measuring iHub’s success, what metrics for success would you prioritize?

- Are any educators left out of this process when they would like to be involved?

- Are there any geographic areas you don’t serve but wish you could?

Transcript

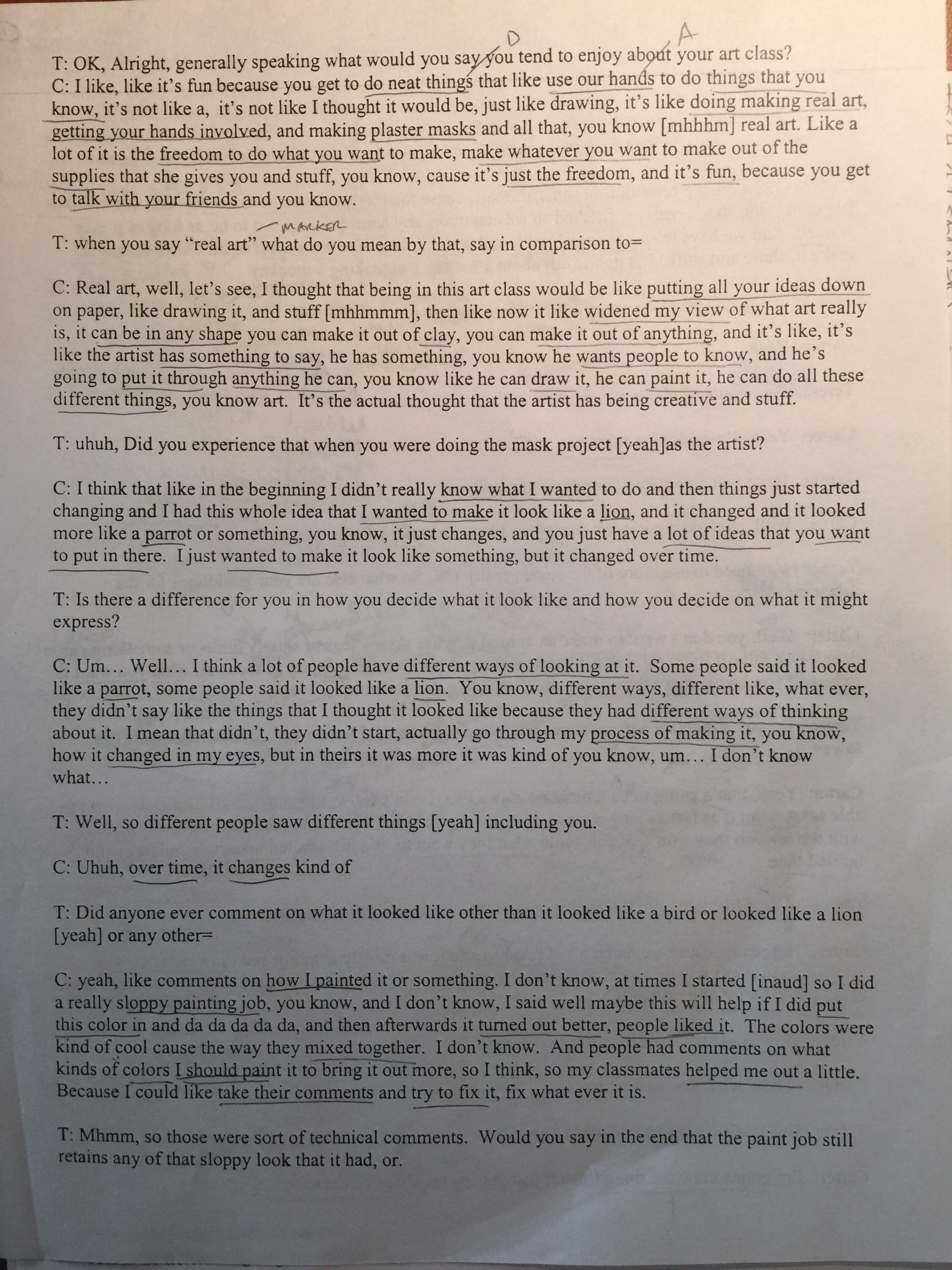

We recorded both interviews with both an iPhone and a laptop for backup. We took turns as interviewer, human recorder, and general note taker. Now follows 15 minutes (26:40 to 41:55) of the first interview when I was the interviewer. This is the second half of the interview when Ana and I switched roles.

Guide

| [words] | other person speaking |

| (words) | phenomena description |

| … | pause in speaking |

Interviewer: You’re good?

Participant: Umhum

Interviewer: Alright so… hum… you guys good… hum… so… I think, hum… we’re gonna dive into a little bit more about the model you mentioned

Participant: Ok

Interviewer: So if you could tell me in your own words what’s the process that the startups go through with iHub prior to Pitch night?

Participant: So we’re recruiting startups that are early stage so, what I would say we define that between Seed and Series A, hum… but I think it’s probably more broadly interpreted than that and so… We kind of reach out to contacts we have in the Bay Area and maybe a bit nationally and ask them to help us pass on the message that we are kind of looking for Ed Tech companies that are, that could be used in some classrooms specifically.

Interviewer: Ok

Participant: From there companies apply online through a, like, a Google Forms. It’s pretty simple. It’s a very short application process, but I do think we’ll probably add to that next year. hum. And then following a certain time period I convene the invites of different people to be part of a short list committee. And so our short list committee consists of venture capitalists, it consists of accelerator partners and then also people from the education community so that typically is maybe a like an EdTech coach of the school or an IT Director at a school. Hum. Potentially some educators as well. So we kind of bring together this, this committee that, from all of our applications we f it down to 12. Then we ask those 12 to pitch other pitch game and we kind of ask them “Hey, focus on things you used in STEM classrooms” and we, we invite judges that are business judges. So typically CEOs of big companies in the area and then also education leaders. So we had one. There’s also, hum… we’ve also had people who (whispers) trying to think of who else… (normal tone) we’ve had educators, we’ve also had IT Directors as well ‘cause we kind of think, you know, they’re different slices of the education world so we have both of those be there and then they pitch and then the judges ultimately select the pool of companies that we work with for the round.

Interviewer: So you mentioned there’s 12 applicants… 12 selected [uhum] and then how many go to hum, the actual orientation?

Participant: We pick between 6 to 8 companies [6 to 8] This last round we picked 6 hum, I think we have 11 pitches so that’s probably what we have.

Interviewer: Uhum… Is there any, hum… reason for this number?

Participant: For us its capacity of teachers [capacity of teachers] so we support, in our last round we supported 25 teachers. And this round we have ‘bout 13 teams of teachers. And so we kind of didn’t wanna companies to support more than 2 or 3 although I think… we… we… we didn’t wanna it to be super challenging for companies to support and also since they are early stage products, we found that some companies as they’re taking off, like, they get really busy and they’re like, completely immersive so… I think it’s to balance both the support aspect but as well is kind of the teachers that we can support also.

Interviewer: Uhum… so let’s go a little bit back, uh, what happens between the pitch night and orientation in terms of your interactions?

Participant: So we send out to our teachers and they’ve kind of, I would say vaguely, have defined the problem of need, and we’d like to kind of like focus them on the future. Make ‘em define it a lot more clearly. Hum… but have… we send out… I send out a form that kind of says, you know, “You’ve seen these companies at the pitch round. Here’s some more information about them if you’d like”. And I ask them to preference these different companies. So like, 1, 2, 3, 4 I mean we have them preference them whether or not, they’re going to work with all of them. And so, we… then they preference them and I kind of match them typically if I can I just give them their first choice of company that they’d like to work with cause I think that (mumbles), builds a lot of investment in our process, hum… and then by orientation they know who they are working with and then we kind of tell them that all of… We’ve already told them all the program requirements before but we kind of go over them at orientation and then go over… let them meet their companies for the first time.

Interviewer: Great. And how about after orientation? What happens?

Participant: So after the orientation we kind of let them go and set up their products for about a week or two depending on the time crunch we have from the end of the year and then… for the next couple of weeks they use the products in the classroom. There might be some observations but I would say these observations are mostly from a project management perspective more than like, an evaluative one. And then they submit feedback. And so we have some templates that we give them that we ask them to submit feedback from. There’s probably have a guiding question for each one and each week we’ll update that guiding question. Also we use a platform called Learn Trials which kind of gets qualitative feedback generally from these teachers about the product and includes comments but also has a rubric that they kind of use. And we’ve asked for pre and post assessments in the past that our teacher created ahm, but this probably hasn’t been… we have not been doing that. I think we need to find a better way to incorporate, so…

Interviewer: So, so… tell me a little bit more about this tool for the Qualitative Assessment. You said the name was?

Participant: Learn Trials – and so they have a rubric that assesses an ed tech company across different strands whether that’s usage, whether that’s how easy was it for it to set up. And they kind of just rate them almost like grading it you know, like give it an A, give it a B. So like kind of like over time. And we ask them to submit it in different, like different… on a routine, so every 2 weeks or something. Where you’re able to kind of see how the product performs over time.

Interviewer: And am I correct to assume that after orientation the process goes towards, until the end of the semester?

Participant: Yes – so it’s only until the end of this semester. So typically December, I want to say like 18th

Interviewer: And then what happens?

Participant: And then at the end of this orientation we SVF maybe with the help of some of our partners like LearnTrials will aggregate some of this data and will share that out with the community. Additionally for this round something we’d like to do is maybe then from our 6 companies that we work with, work with a few of them and help them… help support implementation in the school versus just a couple classrooms that a school. So we’re still figuring this spring what that exactly looks like in terms of the implementation of the, these products but that’s something that we’d like to do.

Interviewer: And when you say community you mean both teachers, schools and the EdTech companies? You share it with everyone?

Participant: Yeah

Interviewer: Hum… so what other events or resources you provide that have like similar goals or priorities? Or is this the only…

Participant: Like within our organization? [yes] Well, in terms of teachers support, like, our Elevate Program I know… I talked about how it helps students but really a big point, I think a big selling point for districts is that it helps teachers, we give a lot of teacher professional development during that time. And so I think our program is also to help teachers who are early adopters of technology, help them kind of meet other teachers at different school for early adopters, and build a cohort that understands that and kind of can refer to each other. Humm… so we also do some, some I would say professional development is not as extensive as all of it is but we kind of want to help teachers understand how to use it, EdTech in their classroom. Potentially, referencing… Our goal is to reference the SAMR model. So..

Interviewer: Uhum… And is this whole process the first cycle you guys are going through, or you have been doing this for a while?

Participant: So we started our first round in the Spring of 2014 – so this is technically round 4 but we’ve iter… like… it changes… little pieces of it change each round. So in the past when we’ve done it, when I run it, it was just I would recruit individual teachers from schools and so then I would form them onto a team so maybe a school, a teacher from school A, a teacher from school B, and a teacher from school C. And in this round I re…, we did recruitment where I recruited teacher teams. So now it’s like 3 teachers from school A, 3 teachers from school B, and then they are all using the same product at their school site so I think that helps with the piece of collaboration that was harder to come by earlier.

Interviewer: And how was it harder?

Participant: Yeah, so I think for our teachers we would like them to meet up kind of weekly. And when you’re not at the same school it’s a lot harder to meet on a weekly because maybe one night one school has their staff meeting and then the other night the other school has their staff meeting and then, you know, I think it was a lot of commitment to ask and I think a lot of teams found it really challenging and maybe would not always be there because of that. Hum… So… that was a big shift from that. But I think it really builds a community within their school. And I think, what we have heard from teachers and from districts, is that a lot of times for a school for adopt or you know, use a product across their school, its because a group of teachers have started of saying “I’ve been using this product. I really like this product. Hey, like friend over there! Please use this product with me,” and they are like, “Oh! Yeah we like it” and kind of builds momentum that way

Interviewer: Uhum… So yes, so I guess that talks to the implementation phase of the, of the software that they were trialling. Hum… could you tell of us of a, a specific hum, aaaaa, ww… what do you call this phase after orientation? the pilot? [the pilot] phase. The Pilot Phase. So. Yeah. Could you tell us one story that things went really well or things went really badly?

Participant: Sure! So, there was a product that as used in the last round where I felt like, it was really… we saw a lot of interesting things happen, hum… but they’re all like lot of qualitative metrics. So it”s called Brain Quake, and actually the CEO and cofounder, he’s the… he actually was an LDT graduate, hum… but… it’s basically this game on an iPad or… whatever, where you can play… you have little keys and you have to line the keys to get this little creature out of a jail essentially, but, what was interesting is when you turn the gears it also kind of teaches an eight number sense, so it’s like, this interesting puzzle that kids kind of enjoy solving. And so he was using this in some classrooms in the Bay Area. Also one in Gilroy and this teacher was a special ed teacher. And so she was kind of showing them this and so… What I think was really, really successful that I found was that for one of her students, they had trouble with like motors skills. And so one of the skills that they had trouble with was kind of like turning the gear on the iPad. hum. But the student actually learned to turn the gear like to the left. Cause you can turn it both ways and they were able to like, learn that skill moving like, doing a different motor skill than they had before because they really wanted to play the game. And so I thought that was like a really wonderful example of how technology can be really inspirational or like really helpful versus I think their other, you know. Well, lots of examples in literature where technology just like, you know, it’s just a replacement for something. Hum… and so… I think also a lot of the other teachers who worked with that product really, their students really enjoyed it, ‘cause it is really engaging and they were making like, connections between the fact that, you know, I’m doing math and they could see h… they could understand that, you know, if I redid this into an algebraic expression… like they were coming up with that terminology and then they were like “we could just rewrite this into an algebraic expression”. And I think that was a like a really wonderful example of a product that went really well.

Interviewer: Did that product end up being hum, adopted or implemented in the school [Yeah, so…] effectively?

Participant: That just happened this spring and I don’t think it has been yet. Hum… they’re still also like an early, you know like an early stage company so they’re, I think they’re still growing and figuring out exactly what it looks like. But I think that we are trying to support companies in that way. And we’re still figuring that out. So…

Interviewer: And was there ever hum… a big problem in a pilot?

Participant: Yeah, let me think… typically I would say the problems that we run into in a pilot is where, companies are like working with their teachers and it’s going well but then sometimes companies get really… I guess it depends, now that I think about it. In the fall of last year the was one where the company like, the developers got really busy cause they’re just, start-up just took off. And so the became pretty unresponsive with our teachers. The teachers like, emailing me, and I’m like trying to get in contact with it, and so typically when there’s not communication between these parties, it would… the pilot would not be as successful as it could because they weren’t communicating. Things weren’t changing. Hum… In the spring, one of the things… The biggest challenge we found was actually testing. So testing was happening for the first time for Common Core and so what would happen was these teachers that email me, being like “I can’t get a hold of the Chrome Book carts”. Like, they just couldn’t get access to the technology they needed to run their pilot. And so… one teacher… her district told her this before she like committed to the pilot. And she just like pulled out. Like she’s like “I just can’t do this” like “I don’t have access to these, to like, the technology that I need”. Hum… But some other teachers, they were like, one of them told me she had to like had to go to the principle and like beg to use the Chromebooks on a like… on a day that they weren’t being used, but, I think because it was the first year of testing, a lot of schools and a lot of districts were very, hum, protective of their technology cause they just wanted to make sure it went smoothly. And that totally makes sense. And so… for… because the… the testing when it… kind of… varied like when this would happen for the different schools but, some schools were more extreme in like saying, you know, were just gonna use it for all this quarter… like we… like, you know, we’re gonna lock it up and then others were like “Well… we’re not testing now so feel free to use it” So… That was a big challenge in our pilot this spring.

Summary

Overview of SVEF

- Find and corral public resources so all students can succeed in education

- They act in 3 main areas:

- Programs

- Elevate

- Flagship program

- Provides summer support to succeed in Algebra

- 1200 students last year

- Run by director and three other people

- Elevate

- Advocacy

- Get students to complete A-G requirements

- Gives SVEF contact to school districts

- Run by VP with support of consultants (4-5 people)

- Innovation (Anita’s group)

- Support growth of education innovations

- Grant from Gates Foundation to measure EdTech tools in classroom so that they work best for students, teachers, districts

- iHub’s focus is now on how we measure what is effective in EdTech

- Programs

Anita’s role

- How do we measure EdTech products in classrooms through connecting EdTech companies with teachers?

- Day-to-day:

- Administration

- Relationship management

- Recruitment

- Event planning

- Presentations

SVEF’s Role

- Align stakeholder groups

- Decrease tension

- Build capacity of teachers to advocate for products

- Support district understanding of EdTech

- Find what works well for teachers

Metrics

- Advocacy: how many districts adopt A-G

- Elevate: how many students served

- Innovation: how many schools are using iHub model

- Process of recruiting, onboarding, matching, piloting, reporting

- Feedback from board of directors

iHub’s Role

- Recruit early stage EdTech startups with in-classroom products

- Create short-list by hand picked committee

- Organize pitch night – startups present projects to school teachers

- Collect selections teachers made

- Organize orientation session between selected startups and schools to start the pilot

- Monitor pilot for approximately a quarter

- Review startup’s performance using Learn Trials software for rubrics

iHub’s New Goals

- Help startups with school level implementation – help them grow

- Provide a framework of what works to schools/districts

- Push towards providing research

- Specifically, the exploratory research/design/implementation side

- Early stage of adoption

- Using Design-Based Implementation Research (DBIR) where everyone plays a role in designing and giving feedback

- Come on where market is not very defined and provide guidance

iHub’s History

- 2014 first round

- Had individual teachers from each school instead of groups of teachers from each school

Success Story

- App Brain Quake – helped special ed learner

Fail Stories

- Startup took off and became unresponsive

- Access to tech problems in other school

iHub’s Feedback Loop

- Meetings

- Surveys after events

- One-on-one conversations with teachers

Concerns

- Possibility of early-stage tech trials having negative repercussions on learners

- Among teachers that use tech in classroom, instruction is different; they can make learning happen with different “pieces” (tools?)

- Teachers already doing this in classroom, so is it better to have them do it with no oversight/facilitation?

iHub in 10 years

- Ideal case scenario if it were to go out of business; want to identify problem and solve it

- Not sure if possible with the way that school districts and counties work due to bureaucracy and multiple stakeholders

Similar organizations

- Counties (e.g. San Mateo)

- Tend to support infrastructure/hardware, coordinate on purchasing

- Others do research with schools using the products of mature startups to inform schools whether they are investing in products that are advancing outcomes

Reflection

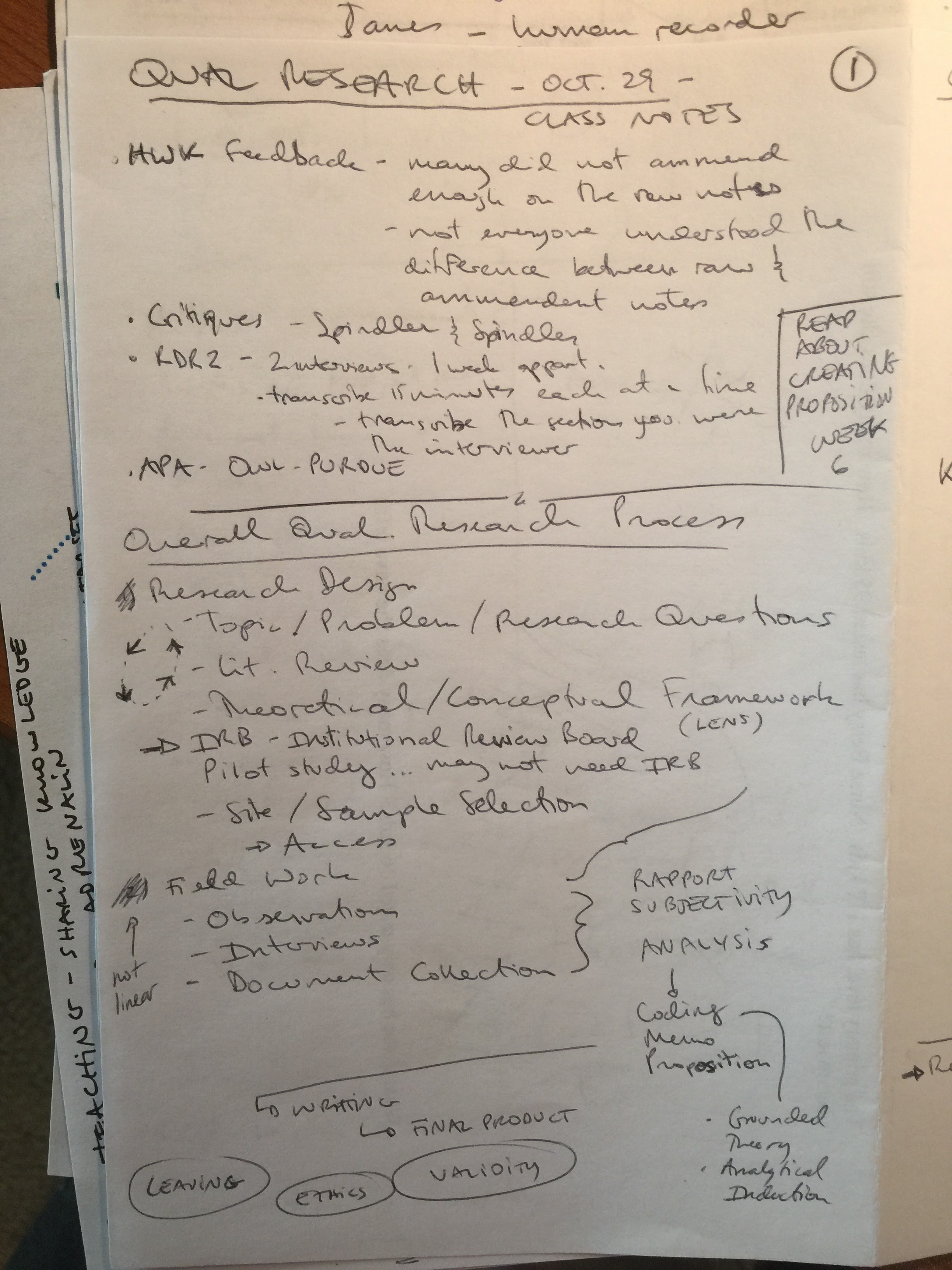

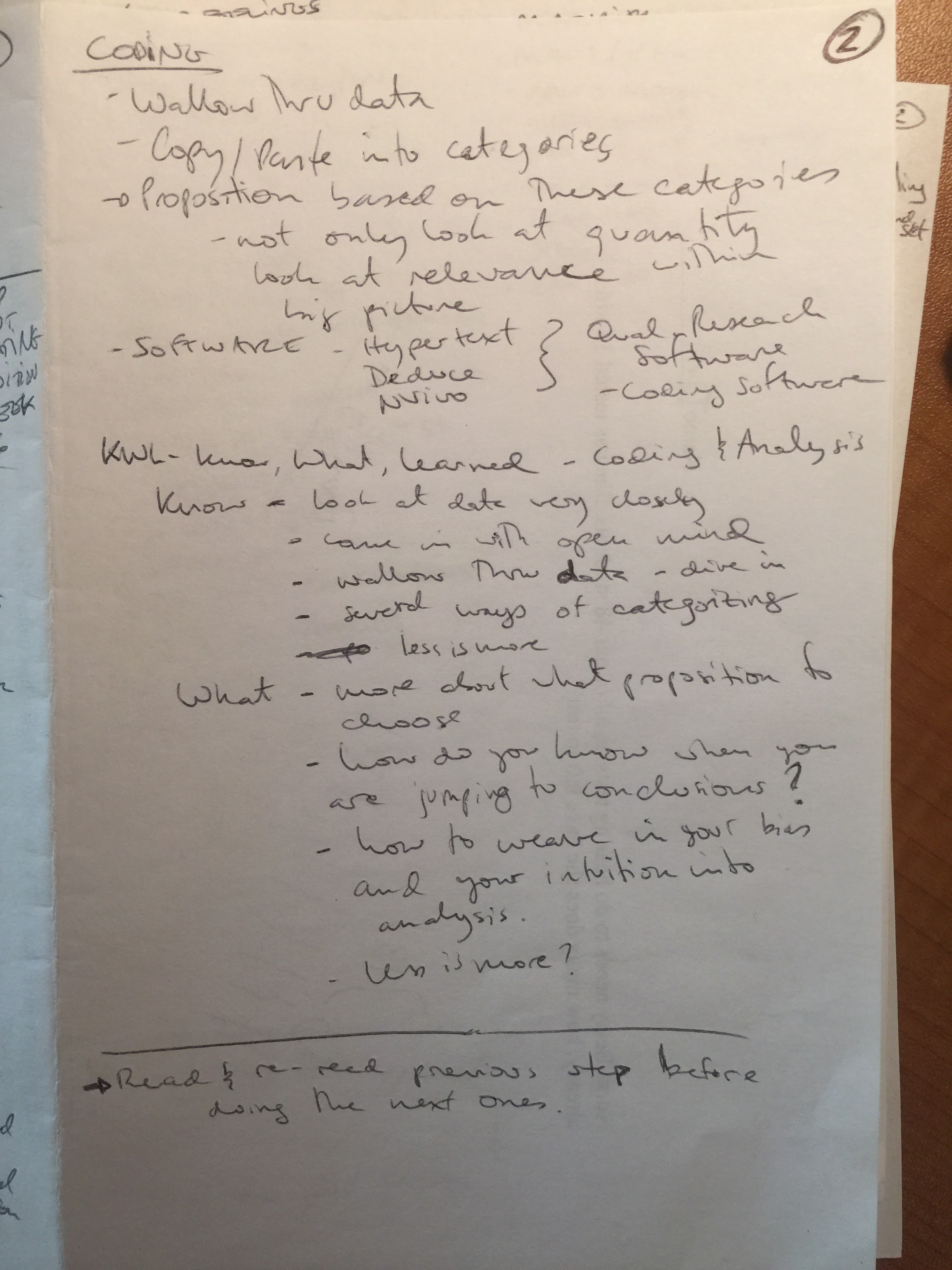

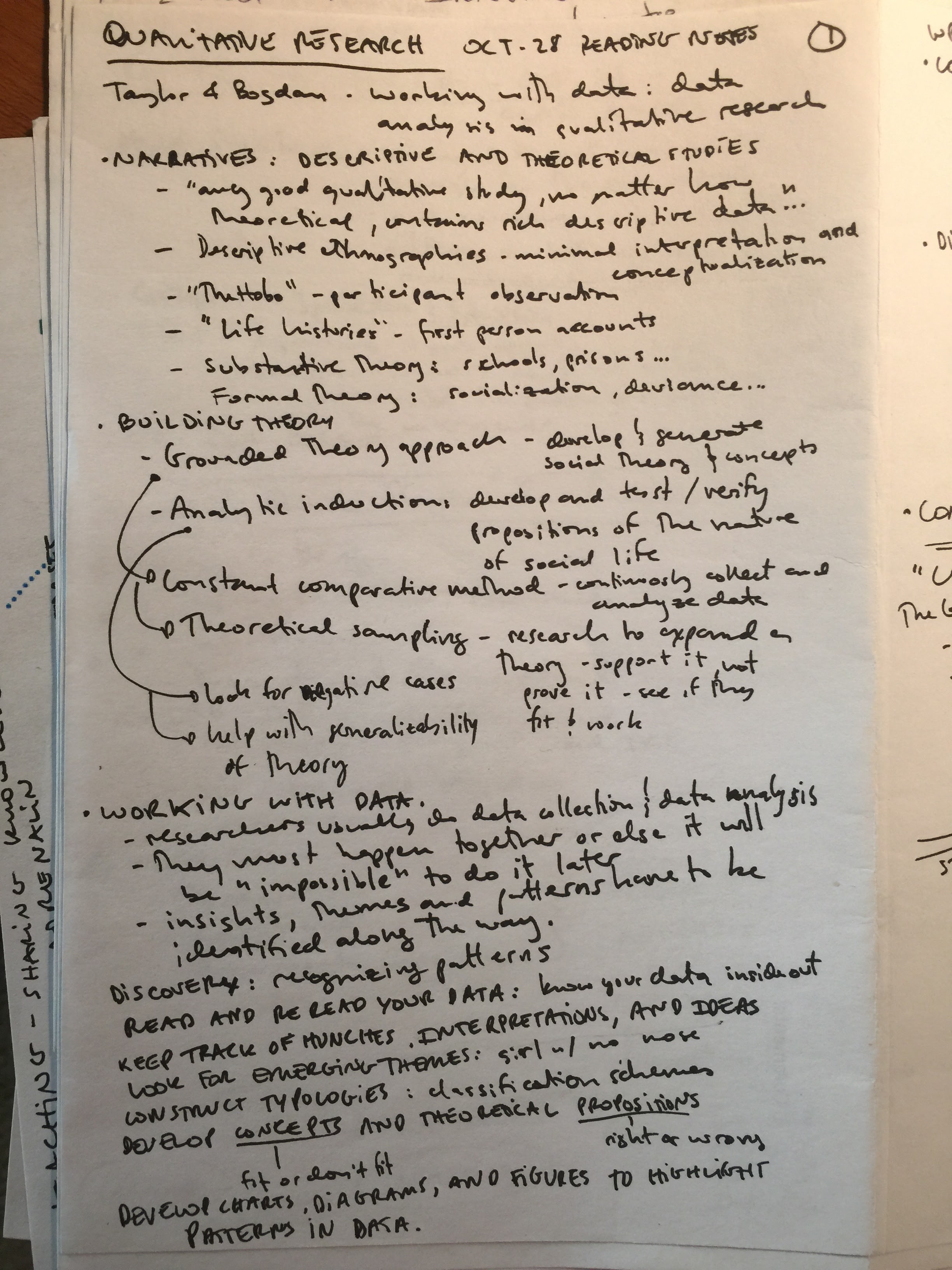

My general experience as a learner in the interview process was a positive one . Going through the motions of creating the questions, interviewing, transcribing, and coding made me feel what the work of a qualitative researcher might look like. I was impressed at the amount of data available collected from such a limited data set, even though I felt that no theory emerged ‘in situ’, understanding that we did not follow the criteria of long and repetitive observations. (Spindler, G., and Spindler, L., 1987).

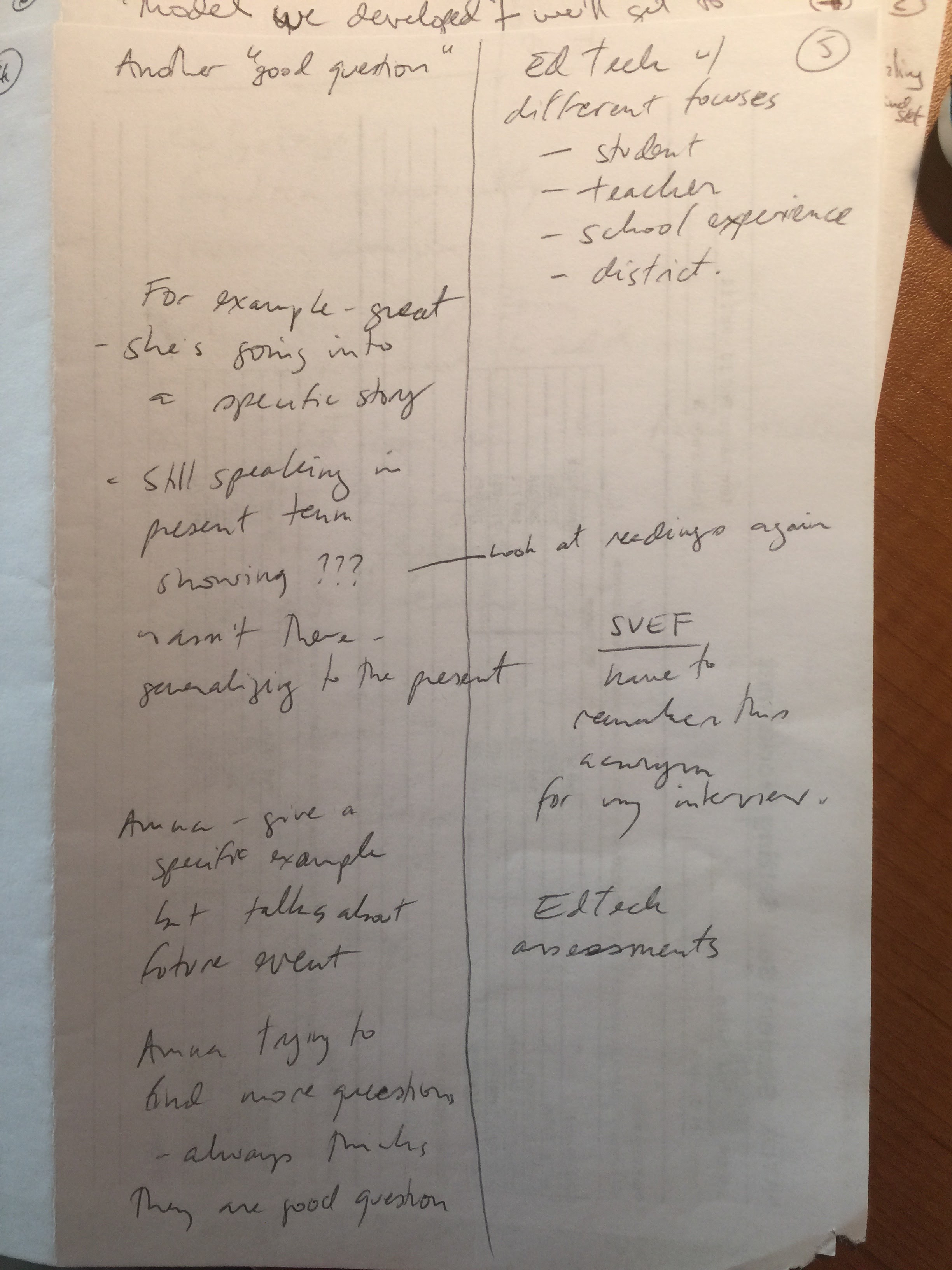

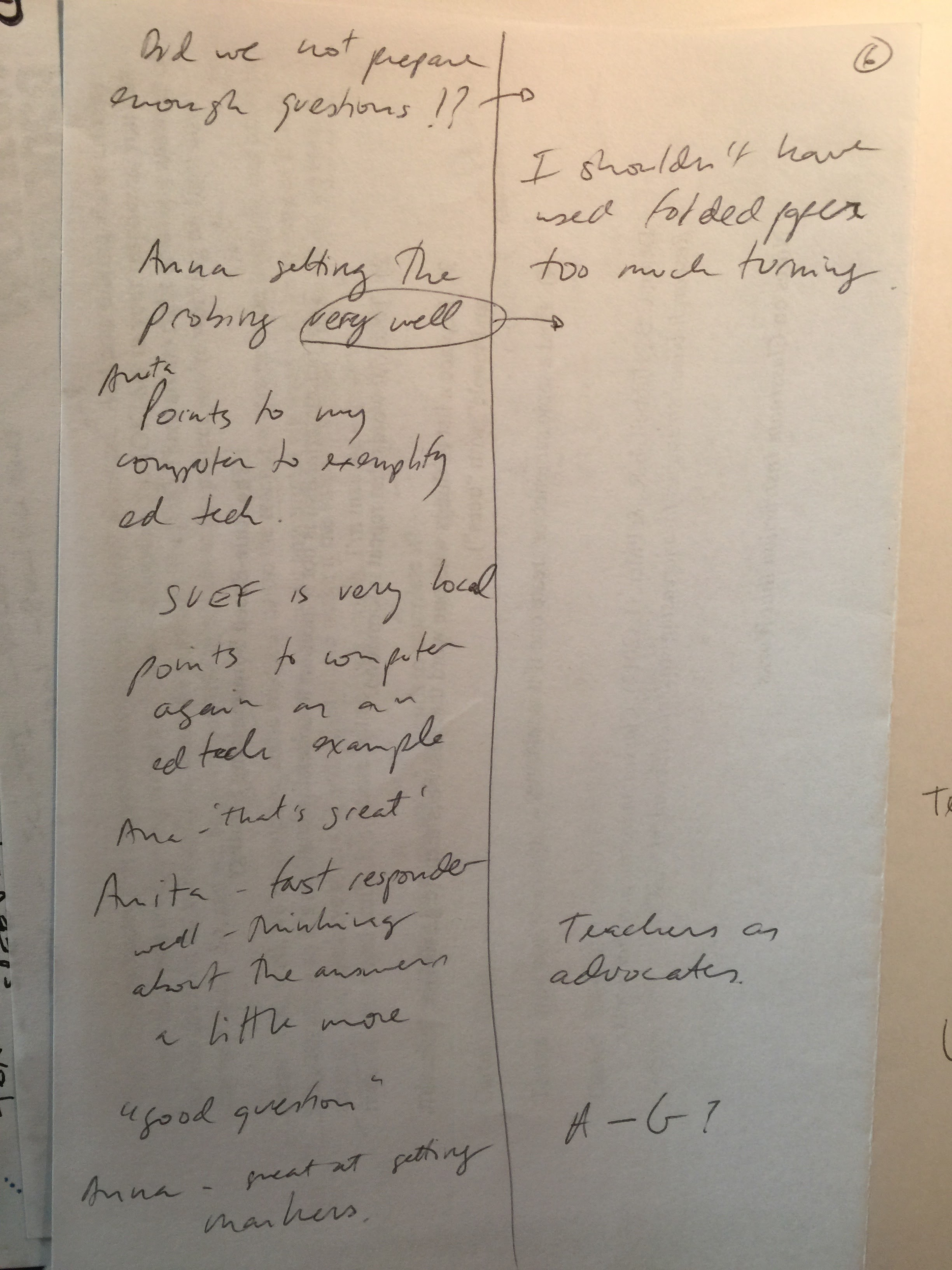

I feel the biggest take away from the entire process was that carefully designed questions are essential for an interesting interview. The questions were carefully crafted and led to capture the information we originally hoped for. I also felt we did not come into the first interview with enough questions – a decision we took during the preparation phase since we knew little about the context we were exploring. We wanted to leave room for probing, which were effective but again, I felt they led to little extra or rich information about the research question. The second interview was better in terms of the number of questions we came in with yet I still felt we were unable to get down to the underlying story – if there was one at all.

Reflecting back upon our research question, I see that we were judging if the organization was effective or not. We were investigating if the iHub program was actually helping schools and/or startups. It felt much more like a quantitative problem than a qualitative one where you would measure their effectiveness in terms of number of pilots they facilitated, how many companies passed the pilot and actually deployed their product in that school amongst other indicators.

As a ‘researcher’ I found that the subject matter we ended up with being less than intriguing. Our initial desire was to observe an EdTech product being introduced into a classroom by a teacher. I wanted to see the product in action, in the classroom, being used by the teachers and students. As we approached the deadline with failed attempts to schedule such a case, we ended up looking at a different phase of the process we wished to observe. A process a organization created to help pilots of startup companies to happen within classrooms. A well planned process that for me, generated little interest. Adapting to this reality, we were unable to find a deep question about the big picture.

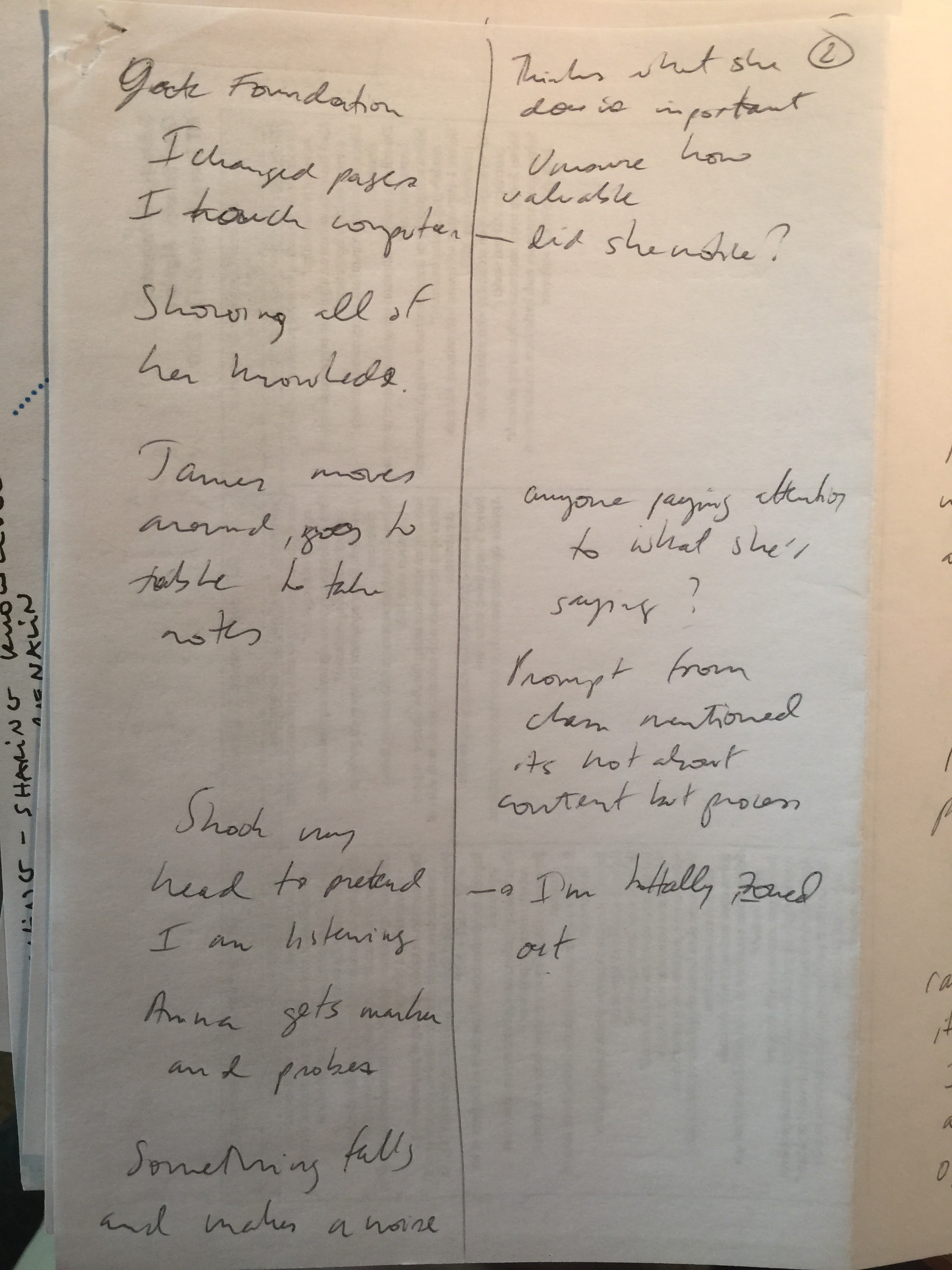

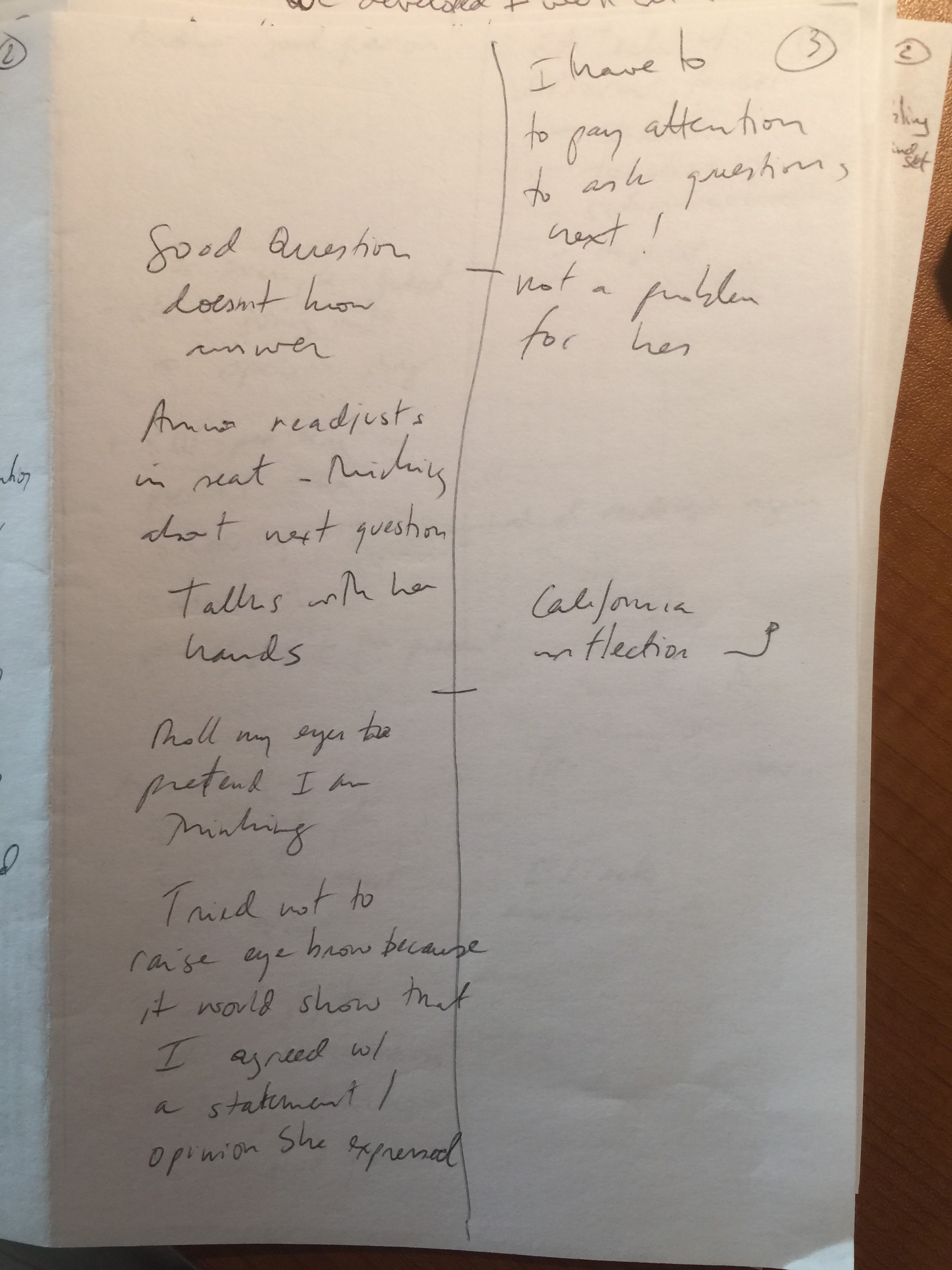

Going back to the entire interview process, while as the observer, I was detached from what was being said. Even though I had to pay attention in order to come up with interesting questions for my upcoming role as the interviewer, I was observing her movements, expressions, gesticulations, and general distracting events that occurred in the space instead. This lack of attention showed up during the session which I led when I asked about other activities her company does. Her answer was short and included the terrible “as I mentioned before” line. I caught on to that and quickly diverted the line of questioning.

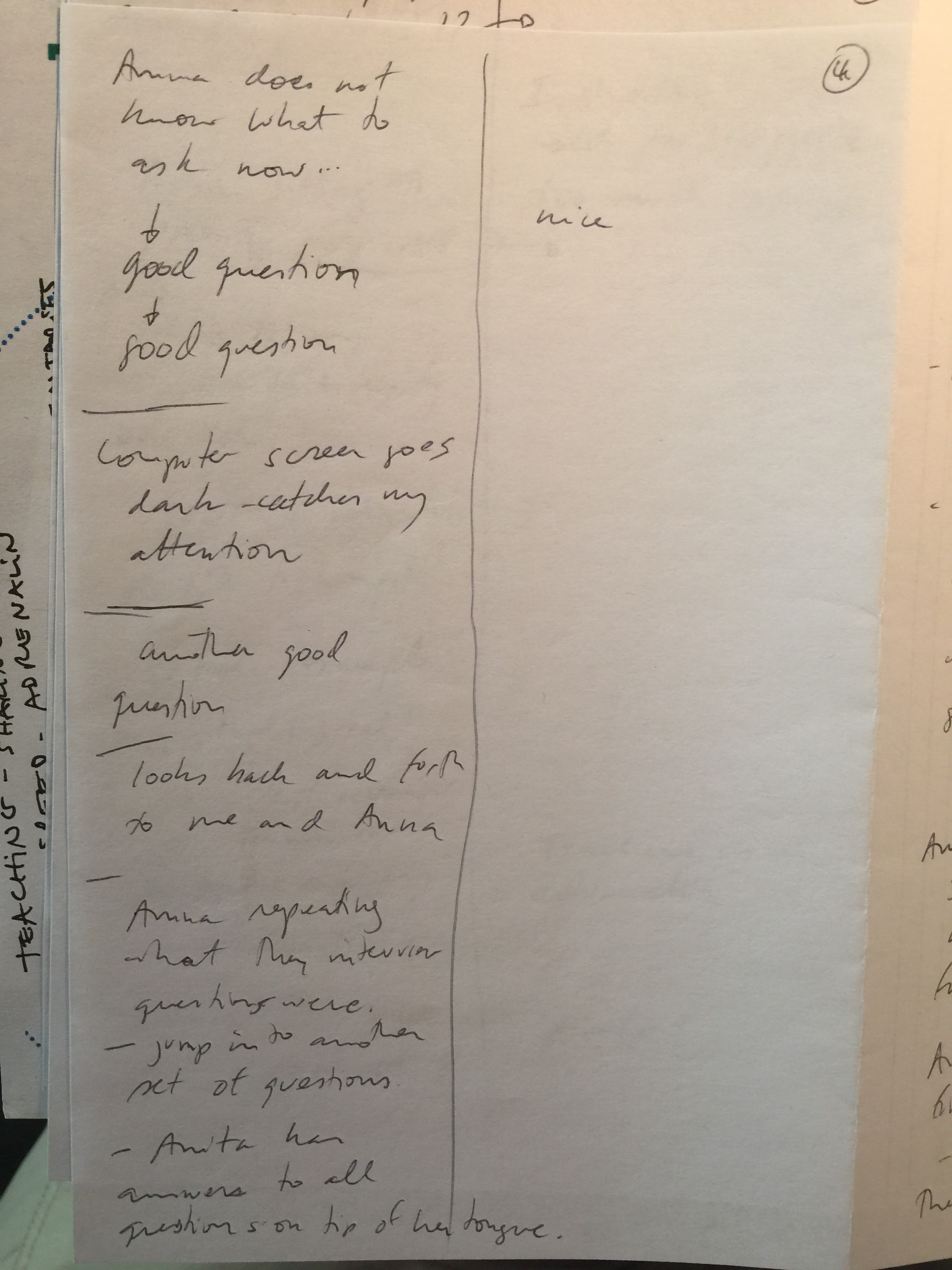

Ana did an excellent job interviewing. She was able to make words fly (Glesne, C., & Peshkin, A.,1992) from Anita who seemed to have read our questions in advance. She would answer the following two or three questions without being prompted. She also said “good question” to about 90% of Ana’s questions. It was the start of the interview and maybe she was trying to be polite. None of my question were followed by that phrase (joking).

As the interviewer I was comfortable with the process even though I now know I have to watch out for excessive ‘hums’ when asking the questions. The partial cause of this is the fact that we had ran out of questions. Fortunately there was enough I did not know about the process that I was able to generate general questions about the operations of the company and probe lightly into certain areas. I was constantly chasing markers and points for clarification to help me devise the next question. Occasionally I took down notes but preferred to listen more closely than try to capture everything – I could always go back to the recordings or ask in the next interview. All in all I felt that my interview questions, all unscripted, were hopefully free of a Subjective I (Peshkin, A., 1991).

For me, the ‘human recorder’ role was pointless. I feel that it would have be more valid to pay attention to general observations on what she was saying and writing down salient quotes rather than trying to capture every other phrase the participant said. Maybe I understood the role incorrectly but it did not seem to help.

During the transcription process I observed how awful oral language looks on paper and how much is lost in this ‘translation’. I noticed that certain words were incomprehensible when listening to the audio in slower speed. I had to play it at normal speed to understand it. I made the connection that words on paper are even ‘slower’ than speech. This granularity provides interesting information yet to approximate the ‘true’ representation of the native’s point of view, data also has to play at ‘normal’ speed. Hence the necessity of multiple methods of data collection to strive towards a ‘valid’ research. You must observe at multiple speeds, levels of granularity and lenses.

Finally I must say that the class session of Week 4 where we mocked interviews, was the most productive class of the quarter thus far. For some reason I thought the structure of the exercise and the readings helped to create a schema I found extremely helpful. They were essential to feeling confident in the interview process – from creating the questions, interviewing, transcribing and coding. There was a clear sense of purpose associated to each step.

References

Spindler, G. & Spindler, L. (1987). Teaching and Learning How to Do the Ethnography of Education. In G. Spindler & L. Spindler (Eds.) Interpretive Ethnography of Education at Home and Abroad. Hillsdale, NJ: Lawrence Erlbaum Associates. pp. 17-22.

Glesne, C., & Peshkin, A. (1992). “Making Words Fly,” Becoming Qualitative Researchers: An Introduction. White Plains, NY: Longman. pp. 63-92.

Peshkin, A. (1991). “Appendix: In Search of Subjectivity — One’s Own,” The Color of Strangers, The Color of Friends. Chicago: University of Chicago. pp. 285-295.