Prompt

Read the responses your colleagues wrote about the reading and react to them.

Reaction

(see responses below)

The reading responders did a clear job of relating Gaudin and Chaliès (2015) framework with van Es and Sherin’s (2009) “video club” and Gröschner, Kiemer and colleagues’ Dialogic Video-Cycle (DVC). While some focused on the methodology of the studies, others raised interesting questions about the research paper and the information it offered. Some common themes emerged from the responses, besides their descriptive nature.

All reviewers, for both the video club and DVC, seem to agree that they adhere to Gaudin and Chaliès framework and were successful in achieving their goals. In all cases, the effect of watching video in PD seem to be positive especially on teacher’s motivation and consequently, the students’ interest in the discipline. Teacher’s metacognitive abilities also were positively affected in both cases. The teacher’s reactions to the PD were also positive, giving them a sense of competency and self-efficacy. In both cases teachers watched videos of their own practice and were scaffolded for “selective attention” and “learning to notice”.

Another common thread was that the responders all felt that there was room for more information in the papers. The decision process of selecting and editing the videos that best fit the PD experience was not fully delineated. For one responder the video selection process was clear, yet only in terms of its mechanics and procedures. Little mention is made about content choices involved in editing the videos. The responders also felt that more information about the facilitator’s role and the methodologies applied during the workshops themselves. Focus was given on the video aspect of the PD, diminishing the level of detail presented about the interactions between the facilitator and the teachers.

Finally, some responders mentioned the desire of more information about how these video-based PDs compare to other non-video-based PDs the teachers have already experienced. In general we can see that teachers are satisfied with the results of this kind of PD but no information is given about what specifically they perceived as different and better about this methodology. One glimpse into this comparative notion is given by a teacher in the control group in the DVC study, who says that they would have desired more direct feedback. The teachers in the study did not report this having watched videos of their own practice and reflected upon them.

I personally found that this exercise was of surmount importance to gain new lenses in the papers we read. It is fascinating how each person notices, deems relevant, and draw different information from the same source material. Clearly previous knowledge, experiences, and context transform the lenses through which each one of us sees the world. Being exposed to these different lenses enhances and expands our own understanding. In addition, reacting to these responses engages us even further with the topic and helps us see patterns we might have not noticed before. In this sense, I believe that video-based PD is an exemplar method of achieving the desired learning objectives with teachers. Videos present a concrete source of information and basis for reflection that is powerful, objective, direct, and close to one’s own practice. Obviously well guided facilitation for the reflection process is required, as in any learning experience, yet I believe that once a teacher becomes aware of how to analyze videos, self-guided learning may happen voluntarily.

Response 1/5

Gaudin and Chaliès (2015) conceptualize the process of video viewing in professional development (PD) in the following four broad categories:

- Teachers’ activity as they view a classroom video (e.g. view video with selective attention; and view video and knowledge based reasoning).

- Objectives of video viewing in the PD context (e.g. build knowledge on “how to interpret and reflect”; build knowledge on “what to do”; hybrid approach; and objectives based on learning goals).

- Types of videos viewed (e.g. unknown teacher activity, peer activity, own practice, selecting and organizing videos in line with learning goals and contexts).

- Effects of video viewing on teacher education and professional development (e.g. teacher motivation, cognition, classroom practice, recommendations for effective video viewing).

In the context of Guadin and Chaliès’ (2015) work, van Es and Sherin (2009) “video club” is a case viewing video with selective attention with a primary focus of “learning to notice” student ideas in elementary math classrooms. This one year video club PD program considered both the participants’ own practice and the practice of their peers as the PD / research team captured classroom teacher of participants and created experiences for teacher participants in meeting this teacher learning goal. The research team had set out to capture how this type of video club would influence teachers’ thinking and practice and was able to capture changes in both teachers’ thinking and practice over the course of the project. The were able to capture how teachers “made space for students’ thinking, … more frequently probed students’ thinking, and … took on the stance of the learning in the context of teaching” (p. 169).

As I read the van Es and Sherin (2009) work, I wanted to know more about how the facilitators designed the content and processes of the PD in the context of the elementary math knowledge, processes, and dispositions that were expected in each of these classrooms. There was little mention as to how the videos were edited, the types of questions that were used to facilitate teachers’ journeys toward the learning goals—which themselves are on the vague side. Noticing student ideas and providing opportunities for students to express them is certainly a step for teachers to understand how students are approaching the mathematics, but it is unclear whether through this video club participation, teachers were developing knowledge as to how to move students along the learning continuum. I think about Schoenfeld and Floden’s “Teaching for Rubust Understanding in Mathematics” dimensions and am searching for greater clarity as to how van Es and Sherin (2009) think about how teachers consider the mathematics, the cognitive demand, the access to mathematical content, students’ agency, authority, and identify, and use of assessment practices in shaping their practice and how students learn math. I’m also curious as to how this particular PD experience coheres or conflicts with other PD experiences that teachers have taken part of and how that knowledge shapes their beliefs and actions over the course of the project period.

Response 2/5

In the following paragraphs I offer an analysis of Gröschner, Kiemer and colleagues’ Dialogic Video-Cycle (DVC) through the lens of Gaudin et al.’s four-principle conceptualization of how video viewing is used in teacher PD. In each area, we can see close alignment between the DVC model and what Gaudin et al. point to in their research analysis as best PD practices regarding video use.

The nature of teachers’ activity as they view a classroom video

In their video viewing conceptualization, Gaudin et al. (2015) point to selective attention as a key component of productive teacher video viewing activity. The video viewing structure in Gröschner, Kiemer and colleagues’ DVC model very much supported selective attention by designating a specific focus during each analysis session. In the first video analysis and reflection session, for example, (workshop 2 of each DVC cycle) the facilitator selected clips and posed questions that guided the participants to focus on the ways in which the teacher in the video activated student engagement. In the second analysis and reflection session (workshop 3 of each DVC cycle) facilitators used video clips from the same lessons, but this time guided the participants’ attention to the ways in which the teacher scaffolded student ideas. This guiding of attention is very much in keeping with Gaudin et al.’s description of selective attention during video viewing.

Gaudin et al. (2015) explain that another important aspect of video viewing during PD is knowledge-based reasoning. The extent to which the teachers in the DVC study exercised knowledge based reasoning during their video viewing is less clear than the selective attention component due to the authors’ somewhat limited description of the actual analysis conversations themselves. We can, however, infer from the few example questions, and the authors’ mention of teachers posing solutions and alternatives, that teachers engaged in description, interpretation, and prediction while watching and discussing the videos. These are in keeping with Gaudin et al.’s description of “first level” reasoning. The structure of the DVCs also suggests that teachers had the opportunity to engage in “second level” reasoning (comparing visualized events with previous events). During the first cycle, they would have had the opportunity to connect and compare what they saw in the video with their own past practice. During the second DVC, the teachers would have had the, perhaps more explicit, opportunity to compare what they observed in the second videos with what they saw in the first videos. Whether, however, the facilitators deliberately capitalized on these opportunities for comparison remains unknown.

Objectives of video viewing in teacher education and professional development.

Gaudin et al. (2015) point to three major objectives for using videos during teacher PD. One is to model how to implement a practice (for example, were a video used in session 1 of the DVC process it quite likely would have been in this camp). A second objective is to teach participants how to interpret and reflect on practice. And the third is a hybrid of the two. In that they were used in more of a problem solving capacity – as examples, rather than exemplars – the videos used in the DVC reflect the objective of learning how to interpret and reflect. If, however, we consider the overarching goals of the DVC, along with the two-cycle structure, the objective of watching the videos becomes more of a hybrid. The teachers were asked to interpret and reflect on what occurred in the videos in the service of refining their practice for the second cycle (and beyond). Though no videos were specifically chosen as exemplars, it is conceivable that exemplary practice might have surfaced in the variety of clips observed. This suggests the possibility that the same videos could have been used to build capacity for best practice (the “normative” objective), as well as reflecting and interpreting (“developmentalist” objective) throughout the DVC process.

The nature of classroom videos viewed in teacher education and professional development – and –

The effects of video viewing on teacher education and professional development

Gaudin et al. (2015) describe three types of videos that can be made available for viewing: videos that feature unknown teachers, peers, or one’s own activity. While they point to research that explores the advantages and disadvantages of each type, they emphasize that watching peer and self videos may be the most productive in that they encourage teachers to “ ‘know and recognize’ themselves” (Leblanc, 2012 as cited in Gaudin et al., 2015, p.51) and “‘move toward’ new and more satisfactory ways of teaching” (Gaudin et al. 2015, p.51). In the DVCs, the teachers watched videos of themselves and their peers conducting specific lessons and were guided to identify and reflect upon the effectiveness of the observed strategies for classroom discourse. The results of their approach mirror the affordances that Gaudin et al. describe, particularly in the area of teacher motivation. In Gröschner et al.’s final round table discussion, for example, teachers who had enrolled in the more traditional PD, in which videos were not viewed, expressed a desire for more direct feedback on their own teaching. In contrast, teachers who had participated in the DVC were satisfied with this element of their experience. In their final analysis, Gröschner et al. found teachers in the DVC group had stronger feelings of competence and satisfaction than those in the control group, which aligns with what Gaudin et al. describe in their analysis of similar research on this topic.

Response 3/5

Gaudin and Chaliès discuss four aspects of video viewing as a strategy for teacher professional development: “the nature of teachers’ activity as they view classroom videos,” “the objectives of video viewing in teacher education and professional development,” “the type of video viewed in teacher education and professional development,” and “the effects of video viewing on teacher education and professional development.” Here we want to consider the Kiemer and Gröschner, et al. study of a PD intervention constructed around video viewing through these four lenses.

With respect to the nature of teachers’ activity, Gaudin and Chaliès look for active, rather than passive, engagement with the video. In particular, they prioritize evidence of selective attention and knowledge-based reasoning. Kiemer and Gröschner do not provide much information about the interactions that took place in the course of their workshops, focusing instead on the evolution of teachers’ answers to questions on the pre-, mid- and post- questionnaires that surrounded the workshops themselves, as well as on the effects of the PD after its conclusion. They do however, talk about wanting to engage the teachers in the same ways that they hope the teachers will go on to engage their students, so they have an activity designed to “active students verbally and to clarify discourse rights” and one to “scaffold students’ ideas.” It is unclear what the content of discussions during those activities was, but from the later feedback sections, it seems that teachers felt they were being given “tips and suggestions about things you can change quickly” which sounds like less of a constructivist/cognitive dissonance approach (which might focus first of selective attention), and more of a knowledge-based reasoning focus (looking at what the teacher does in the video to reason through, and get feedback on, what he or she could do to be more effective).

The objectives of video viewing in the Kiemer and Gröschner study are more clear. They want to help teachers build productive classroom discourse, through open-ended questions and feedback, in order to promote student interest, and thus motivation and learning outcomes. This goal seems to fall into Gaudin and Chaliès’s “normative” bucket, helping teachers to reflect on and develop their practice not with the intent of promoting ongoing self-directed reflection, but rather with a focus on leading teachers to come away with intent and strategies for leading student discussions more “correctly.” On the other hand, they do look at teachers’ perceived autonomy, suggesting an interest in their self-guided learning, yet their desired outcomes are all stated in terms of changes in teacher practice and in student outcomes. Additionally, the videos are all certainly “examples, not exemplars” so they are not shown as “what to do” videos, but are nonetheless used as a jumping off point for discussions of “what to do.”

Next, the nature of the classroom videos are again quite clear. Kiemer and Gröschner use videos of the teacher participants themselves, so, presumably, the teachers see videos of themselves as a main focus but also video of their peers who are also participating in the same workshops. The workshops provide the community of support recommended for viewing videos of one’s own teaching, as well as the atmosphere of productive discourse that is scaffolded by the facilitator. The facilitator also pre-selects the clips from the videos to be watched in the workshop, reflecting the need described by Gaudin and Chaliès for more preparation and scaffolding that when watching others teacher. As all of the participants are mid-career teachers, rather than student teachers, using videos of the teachers themselves also fits into Gaudin and Chaliès’s “continuum of teacher professionalization” which suggests that they are ready for such introspection even while earlier career teachers might not be.

Finally, Gaudin and Chaliès see common effects of video viewing as enhancing teacher motivation and teachers’ selective attention and they make particular note of the fact that “little empirical evidence has been presented on how video use benefits actual classroom practice.” Yet, Kiemer and Gröschner’s second article specifically explores the effect of the PD intervention on teacher practices and student interest and motivation, which they also acknowledge as being unique among research papers in this field. For the most part, they do find positive outcomes relative to their objectives. Teachers used feedback more effectively to promote student discourse in their classes and students were found to have more interest, as well as sense of autonomy and competence. Gaudin and Chaliès’s discussion of indirect evidence about teacher practices and student outcomes (as well as their direct mention of Kiemer and Gröschner’s study in this section) suggests that these findings are in line with Gaudin and Chaliès’s ideals for the effects of video viewing in a successful PD intervention.

Response 4/5

Gaudin and Chaliès (2015) analyzed and categorized 255 studies of the use of video in professional development along the following four dimensions: 1) the nature of the activity teachers engage in when viewing video during professional development, 2) the goals of having teachers view such video, 3) the types of video used, and 4) the effects of viewing video in professional development. Using this four-part conceptualization, one can analyze and summarize any program of professional development, including that known as the “Dialogic Video Cycle” or DVC (Gröschner et. al, 2014; Kiemer et. al, 2015).

The Dialogic Video Cycle is a professional development program that uses video to support teachers in shifting the nature of the discourse in their classrooms. The DVC consists of two cycles of professional development, each of which is comprised of three workshops. In the first workshop, teachers work in collaboration with one another in modifying a lesson plan that they then implement in their classrooms. Implementation of this modified lesson plan is filmed in each teacher’s classroom. Clips from these video records are then selected by the DVC facilitator and shown to teachers in the second and third workshops of the DVC. In workshop #2, teachers focus on the types of questions posed by teachers to students in the videos viewed, paying particular attention to whether the questions posed are either open (e.g., what do you think happens if we heat it up?) or closed (e.g., do we have any right angles here?). In workshop #3, on the other hand, teachers are asked to focus on the sorts of feedback provided by teachers to students in the videos, as well as share ideas for how to take up students’ correct and incorrect answers. The second cycle of the DVC consists of three similar such workshops that revolve around the teaching of a different lesson plan.

While teachers in the DVC do not select the video clips viewed in workshops #2 and #3, consistent with the core features of effective professional development (Desimone, 2009), they play an active role in this particular program. Throughout both workshops, teachers are asked a series of questions by the professional development facilitator and are generally encouraged to reflect on their experience delivering the lesson that was filmed. Additionally, teachers are encouraged to ask clarification questions of the teacher in the video being viewed, which that teacher can then respond to by providing necessary explanations or describing contextual factors in greater depth.

The objective of engaging teachers in the DVC professional development program was to support them in moving towards a more dialogic model of discourse, in which both teachers and students co-construct meaning together. This objective was pursued as dialogic classrooms are believed to do better at enhancing students’ interest in relevant subject matter than classrooms in which the teacher adopts a more didactic, uni-directional pattern of discourse (Kiemer et. al, 2015). As such, the primary objective of this PD program, to encourage teachers to change their discursive practices, was pursued as success in meeting this primary objective was expected to result in success in meeting the second objective, enhancing students’ interest in their learning.

The video viewed by teachers in the DVC consists of records of the teachers themselves teaching a lesson they had modified previously in collaboration with one another. Specifically, teachers view video-clips selected “on the basis of the criteria of productive classroom discourse” (Kiemer et. al, 2015, p. 96). Stated differently, selected video-clips are chosen as they will presumably engender rich conversation among teachers about both the questioning behaviour of teachers in the video viewed (workshop #2) and the nature of the feedback provided by teachers in response to student contributions (workshop #3).

According to Kiemer et. al (2015), as a result of the DVC professional development, teachers’ practice did, as hypothesized, change in notable ways. While teachers did not come to ask more open-ended questions as a result of having taken part in the DVC, they did demonstrate significant improvement with regards to the type of feedback provided to students. At the conclusion of the DVC, teachers who took part in this particular development program provided less feedback that simply told students if an answer they had given to some question was right or wrong (i.e., simple feedback) and increasingly gave feedback that highlighted what was right or wrong about an answer, as well as how such an answer could be improved (i.e., constructive feedback). Additionally and as expected, students in the classrooms of teachers who participated in the DVC PD demonstrated an increased interest in the subjects that their teachers came to teach in a more dialogic manner (Kiemer et. al, 2015).

Response 5/5

The implementation of what van Es and Sherin (2009) call “video clubs” has elements that can be critiqued by Gauding & Chaliès’s (2015) four main conceptualizations of the use of video viewing in professional development. The video clubs are an example of using all four principles in varying degrees, but van Es and Sherin note that the focus was on analyzing student thinking rather than implementation of new methods or changing of teachers’ beliefs (p. 159). This focus for the video clubs has both affordances and limitations when analyzing it against the four principles framed by Gaudin and Chaliès.

First, the nature of the teacher activity while viewing the videos did have a specific focus on describing what they identified as student thinking and gave the teachers a structure to interpret using evidence. Attention to this principle was analyzed and showed some of the highest learning opportunity for teachers. In fact, Gaudin and Chaliès highlighted video clubs as a model for the elements that contributed to increasing a teacher’s capacity to reason (p. 46).

Second, video clubs had specific objectives in the professional development around student thinking. However, the paper did not report whether the researcher had in mind the objective of “best practices.” The clips were selected for the “potential to foster productive discussions of student mathematical thinking” (van Es & Sherin, 2009, p. 160), but it was not clear whether there was attention to the practices that may have attributed to higher levels of discourse around a problem. As van Es and Sherin report an increase teachers attending to student thinking in their analysis, it was not clear whether their learning goal of focusing on student thinking could have been better served by having teachers critically discuss the practices that are associated with student thinking. In this, it is not clear whether a hybrid objective could have better served their main focus.

Third, Gaudin and Chaliès note the limitations of having teachers analyze peer’s professional practice (p. 51). In video clubs, the analysis does show a shift in focus of the student in discussions, but it is difficult to infer whether watching a peer could have dampened the depth of these discussions. It was also not clear how teachers might have been scaffolded into watching each other or whether there were practices that could have been critiqued to further increase teacher’s perceptions of student thinking. Gaudin and Chaliès features studies that conclude that first videos should be selected and organized by viewing an unknown teacher (p. 52). Mainly, the video clubs go directly into viewing peer videos, and it could have had an effect on the shift in the conversations and depth of teacher analysis that they are reporting in their study.

Fourth, van Es and Sherin do show an association between video clubs and practices such as the following: attention to student thinking while teaching, knowledge of curriculum, changes in teachers’ instructional practices, and opportunities for student thinking. Although not all the aspects of the effects of video viewing that Gaudin and Chaliès discuss are explicitly addressed in video clubs, there does seem to be an increase in the teachers’ motivation and cognitive abilities. For example, some teachers report learning more about the curriculum and others “positioning themselves as learners in the classroom” (p. 171).

In all, although I may be looking at aspects that are not considered in video clubs proposed by the four conceptualizations, overall the video clubs have a design and many outcomes that are sound when analyzed from Gaudin and Chaliès’s framework. I found video clubs as having a good base for future professional development programs using video viewing. Considering the four principles, fine-tuning video clubs could have great promise for teacher learning.

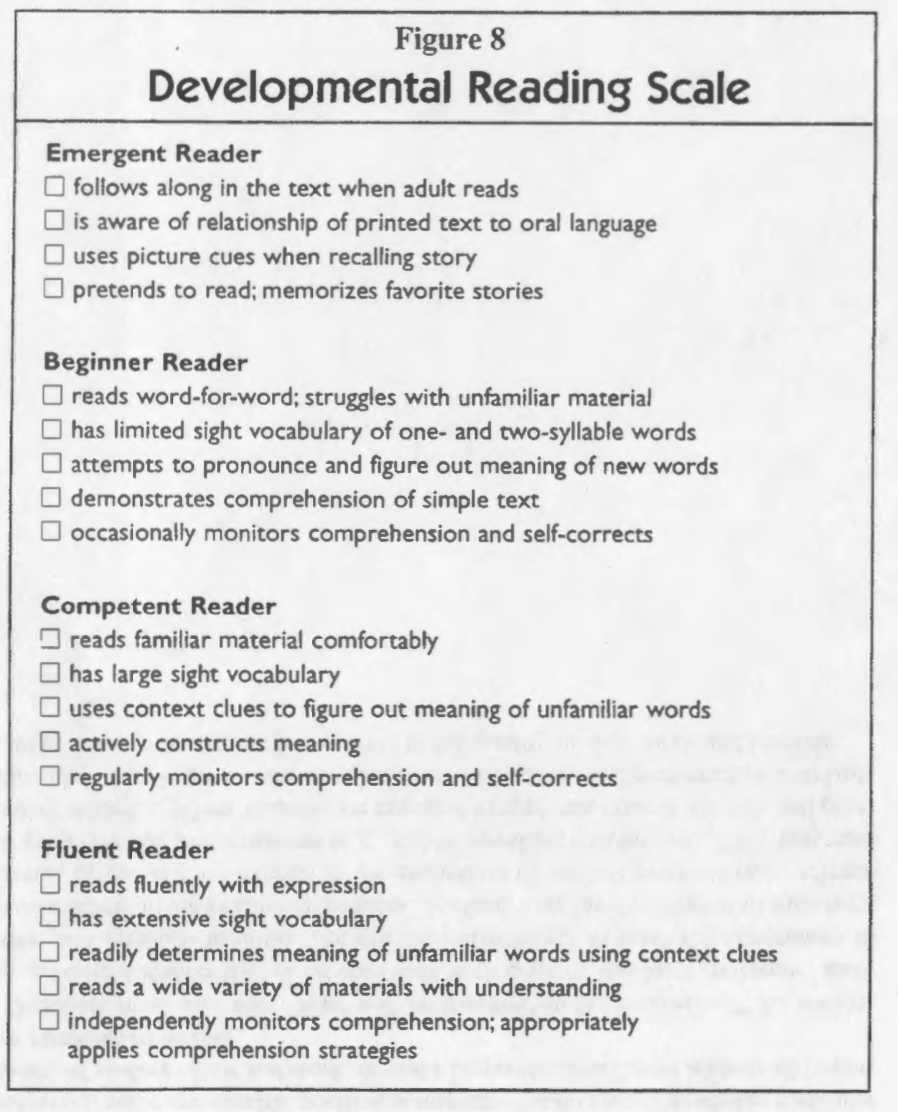

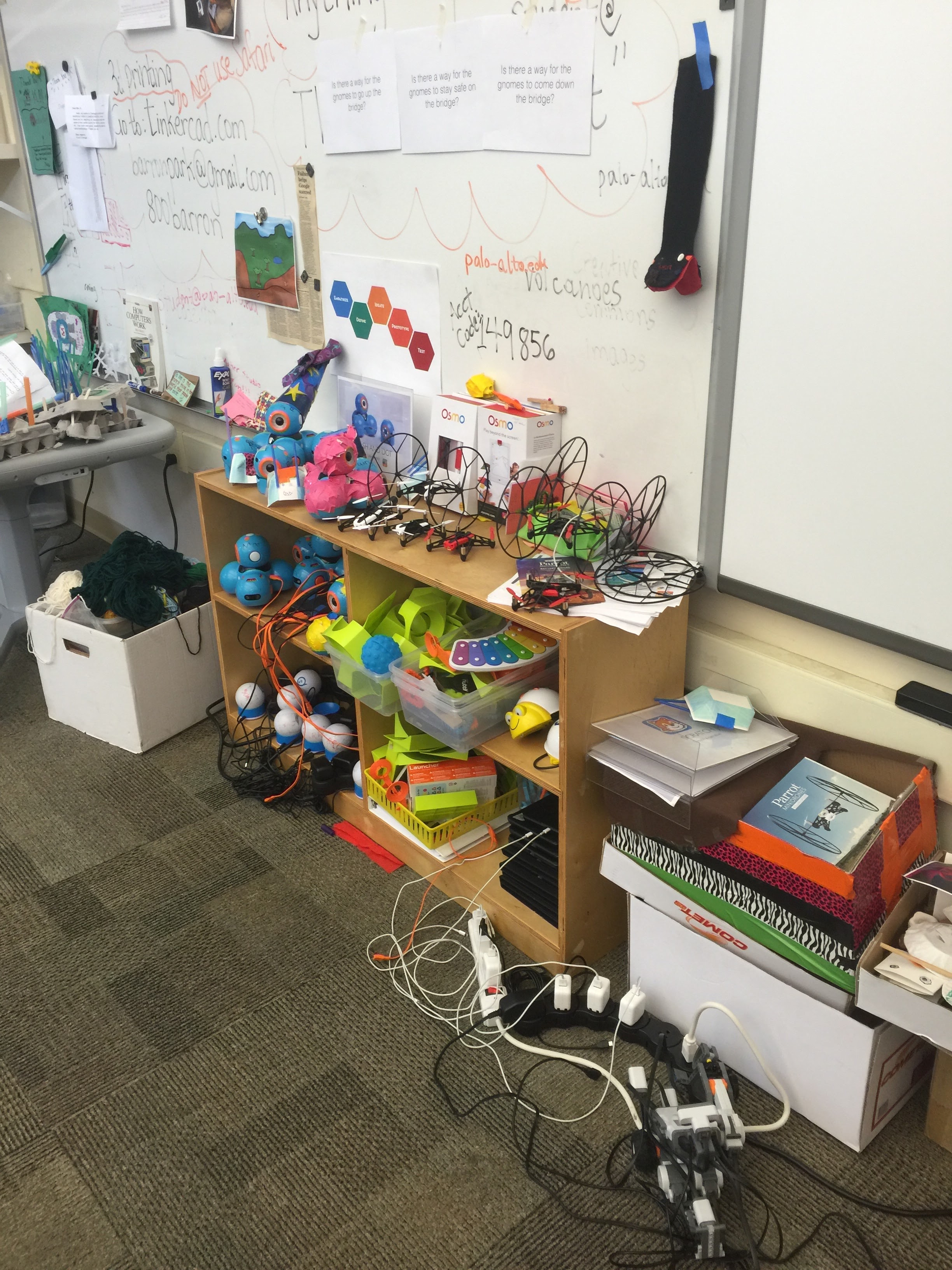

General view of the maker space

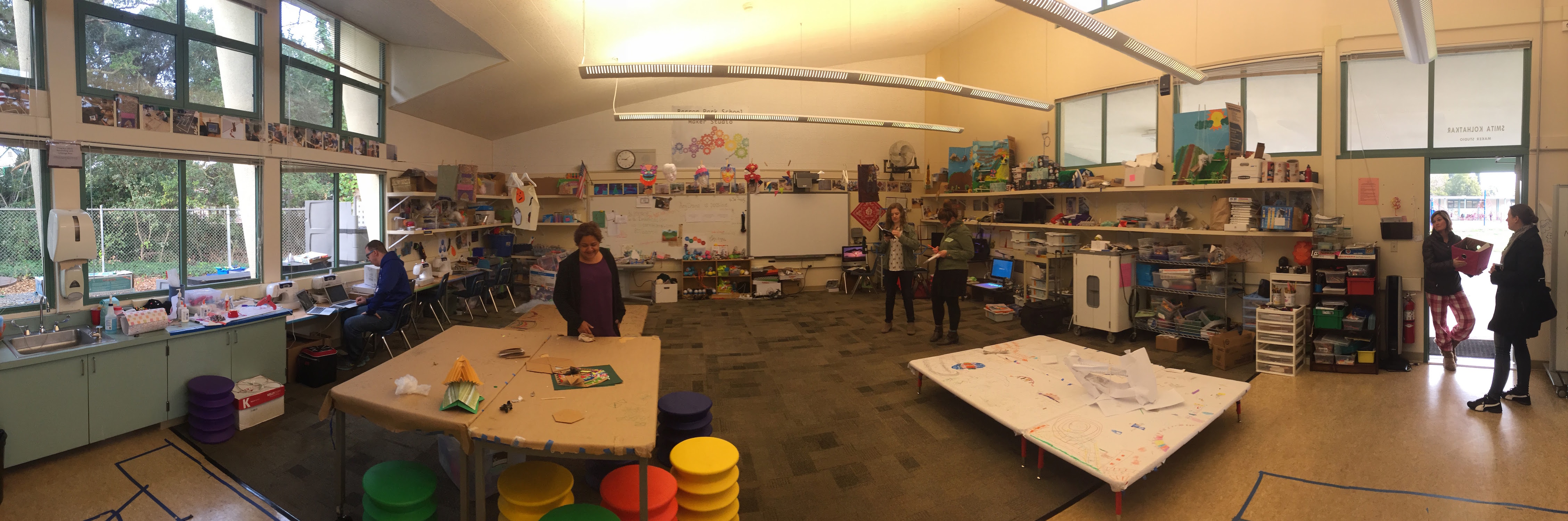

General view of the maker space 3D printer, object scanner, Lego Mindstorms

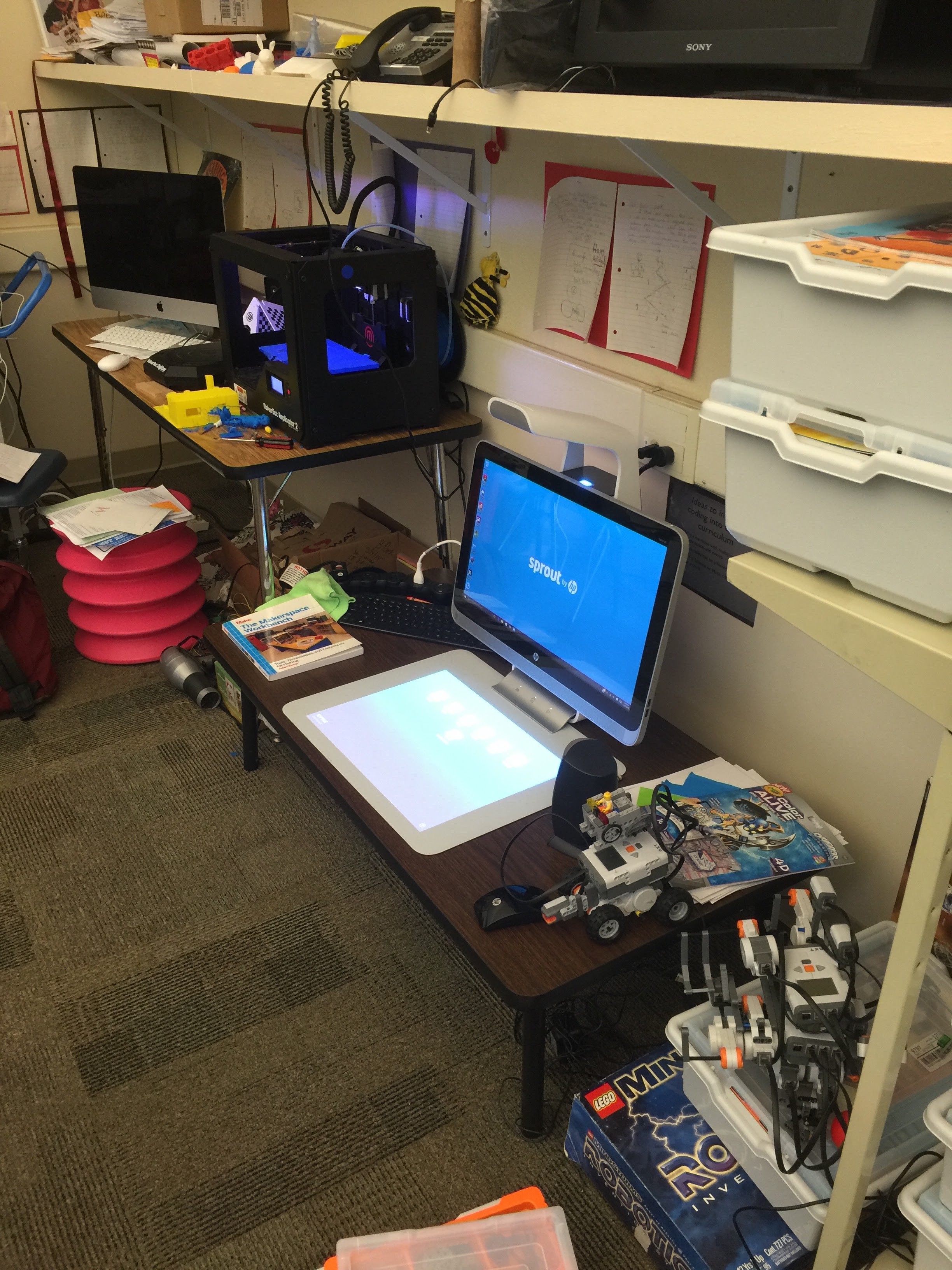

3D printer, object scanner, Lego Mindstorms Smartboard, document camera, 3D printer

Smartboard, document camera, 3D printer  Robots, Lego Mindstorm, and mini-drones

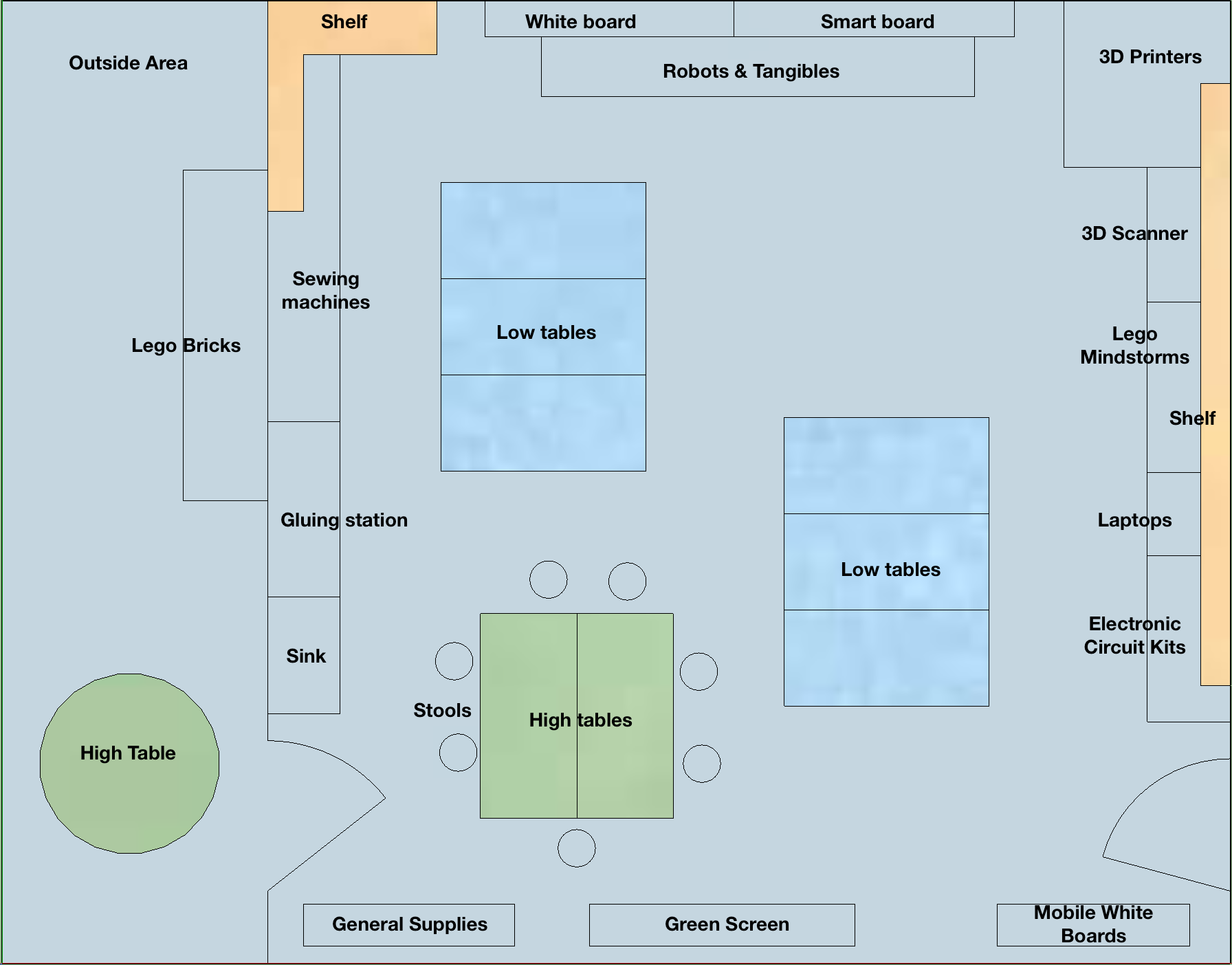

Robots, Lego Mindstorm, and mini-drones Makerspace floorplan

Makerspace floorplan

Figure 2: Playing with a destructible turtle house

Figure 2: Playing with a destructible turtle house Figure 3: Talking sensors with Engin

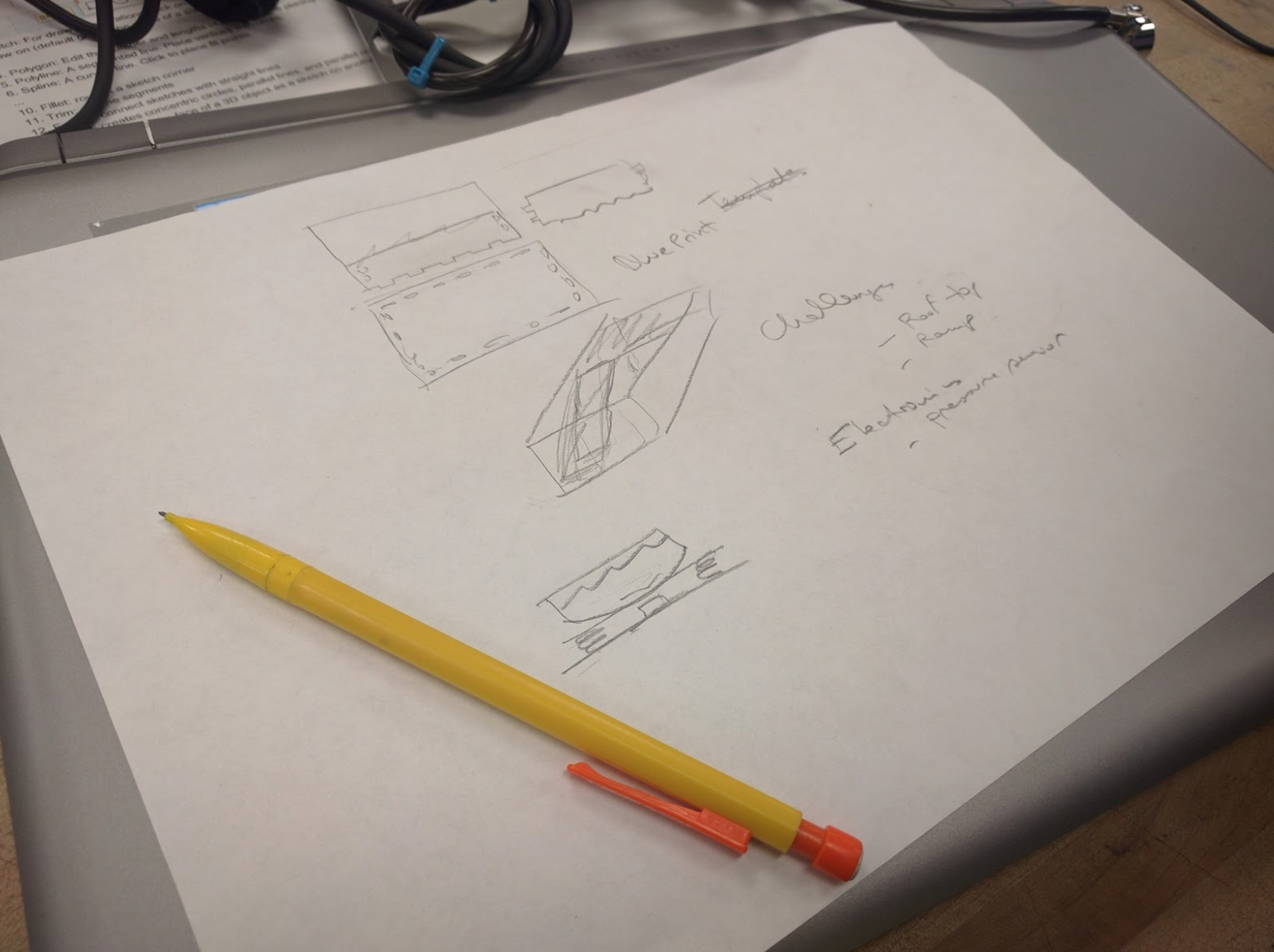

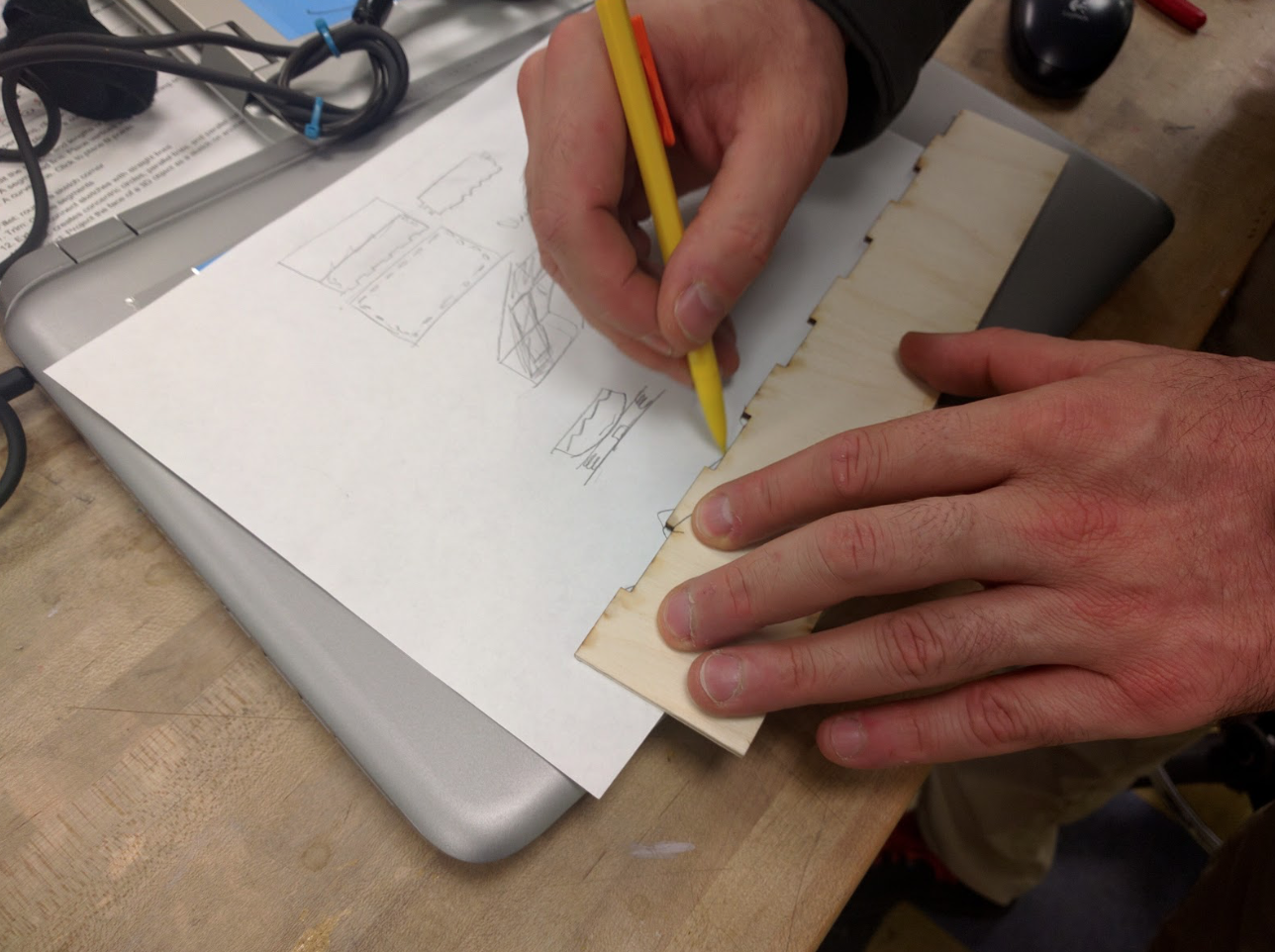

Figure 3: Talking sensors with Engin Figure 4: Moving from a blueprint to a template kit

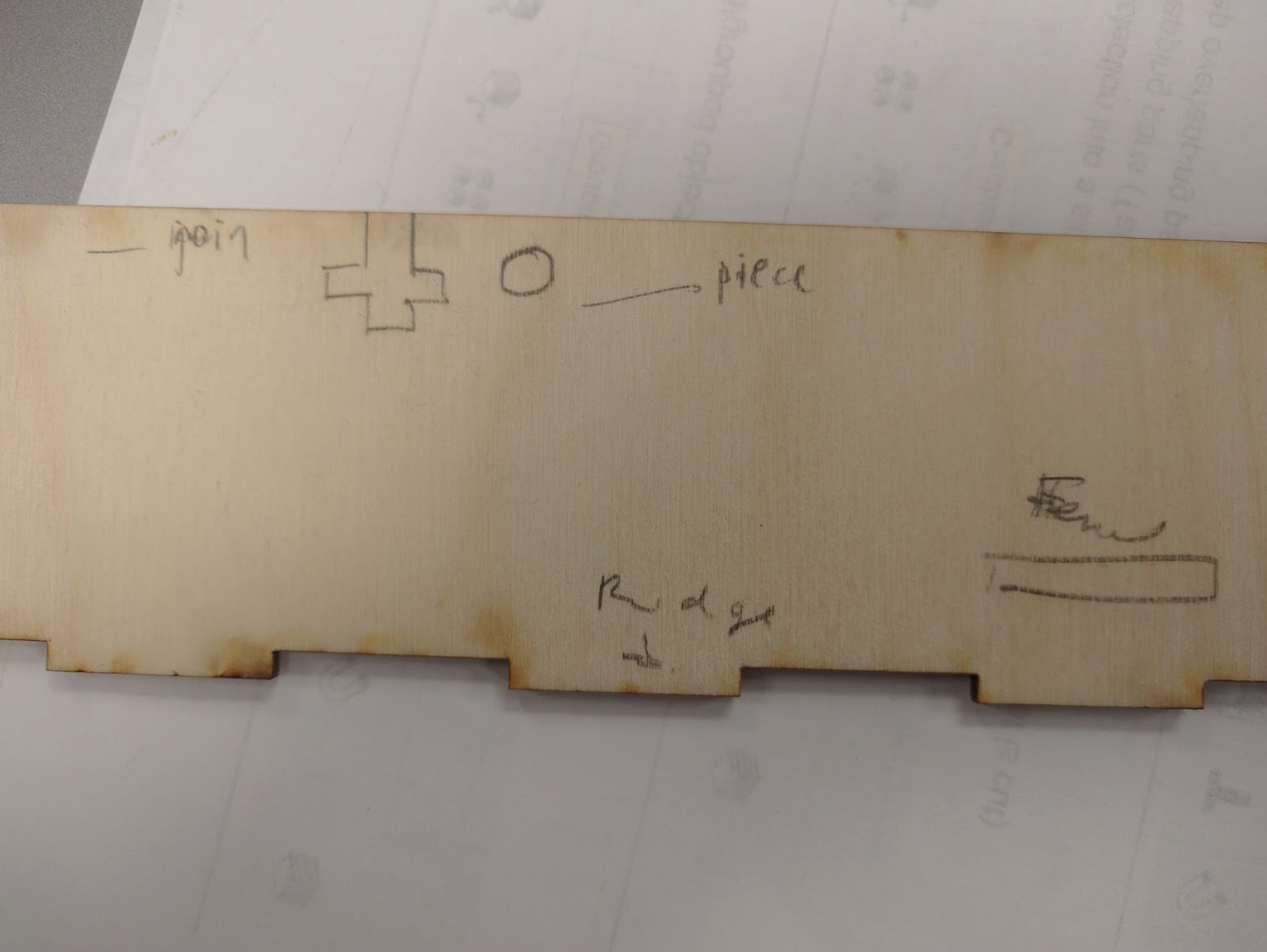

Figure 4: Moving from a blueprint to a template kit Figure 5: Planning the joins and labels for the template kit

Figure 5: Planning the joins and labels for the template kit Figure 6: Starting to draw the prototype in Coreldraw

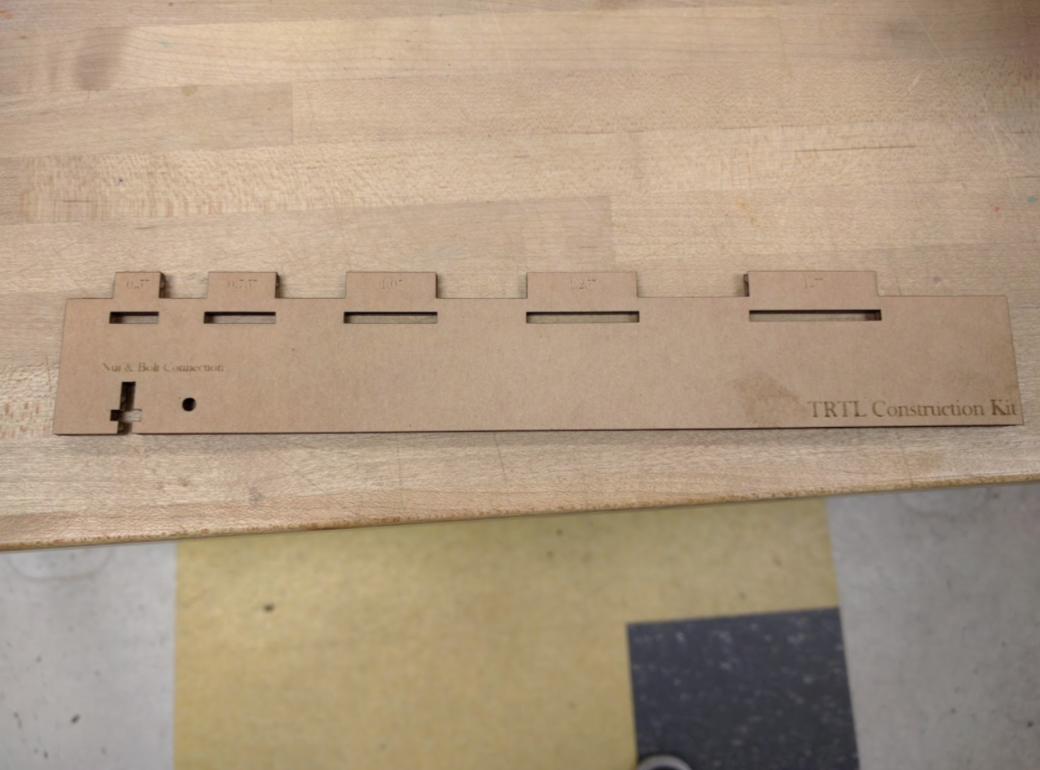

Figure 6: Starting to draw the prototype in Coreldraw Figure 7: A cardboard prototype

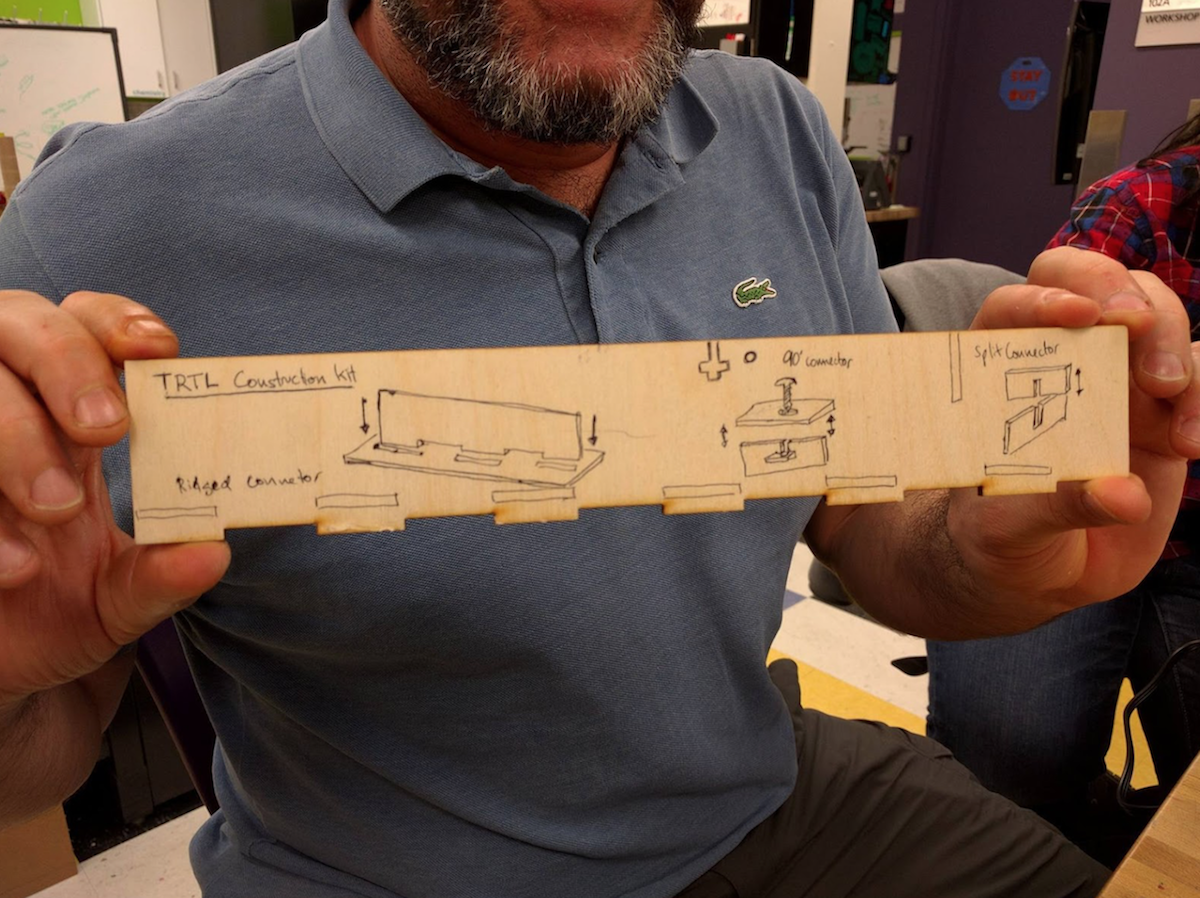

Figure 7: A cardboard prototype wFigure 8: Iterating on the instructional diagrams for the next prototype

wFigure 8: Iterating on the instructional diagrams for the next prototype