Curriculum Critique – tynker.com – Programming 100

Curriculum Construction – Winter 2016 – Lucas Longo

Tynker is an online platform that aims to teach programming to children from 7 to 14 years of age or grades 1 through 8, through interactive tutorials in creating the logic for video games. Virtual characters guide the student through each challenge, showing step by step what has to be done. These challenges increase in complexity and gradually introduces new concepts and commands available in the environment. The tool is extremely attractive in term of design and flexible enough to attend to the various age groups it is intended to. An interface for teachers is also available to create lessons, register students, and assign the several lessons they offer in their course catalog. Finally, the teachers can track the student’s progress and response to quizzes presented during the challenges.

I analyzed in more detail their first programming course that introduces the basic mechanics of controlling the game such as moving a character on the screen, verifying if a character is touching another object and react accordingly, and the concept of repetition or loops. The student must drag and connect instruction ‘bubbles’ that will create a chain of commands the character will perform once the ‘play’ button is pressed. If the commands are correctly positioned, the goal is reached and the student progresses to the next challenge. At the end of each lesson a short multiple-choice quiz is presented to the student to ensure that some basic concepts and terminology were understood.

Nowhere in the website there is an explicit declaration of what particular curriculum ideology they based their design. The nature of the interactions and affordances provided by the tool show that there is very little presentation of underlying concepts, but the immediate engagement with acting upon, using, and testing their instructions. One might argue that several ideologies are present in the curriculum – which includes the technological tools, the nature of the challenges, and ways of engaging with the concepts. “If you are going to teach a kid to swim, put them in a swimming pool” – Dewey’s constructivist concepts and the project based learning methodology is evident. The challenges also move on a clear learning progression offering the students a very scaffolded continuity of experiences that build upon each other and provide the basic concept that the student will need in subsequent ones.

“From this point of view, the principle of continuity of experience means that every experience both takes up something from those which have gone before and modifies in some way the quality of those which come after.” (Dewey, 1938, p.35)

Cognitive Pluralists would argue that the subject matter itself, programming, taps into one of our innate abilities. “As a conception of knowledge, Cognitive Pluralism argues that one of the human being’s distinctive features is the capacity to create and manipulate symbols.” (Eisner, 1994, p.79) It is also a very practical activity where you learn by doing.

“Its meaning has shifted from a noun to a verb; intelligence for more than a few cognitive psychologists is not merely something you have, but something you do.” (Eisner, 1994, p.81).

Another powerful concept the curriculum exhibits with its video-game based interface and activities, Nodding’s (1992) care framework describes why students might engage with the content.

“We need a scheme that speaks to the existential heart of life – one that draws attention to our passions, attitudes, connections, concerns, and experienced responsibilities.” (Noddings, 1992, p.47).

I believe that we can make a generalization nowadays that children are fascinated by video-games and thus might find it relevant and interesting to design their own games. In the process, they are exposed to the concepts of programming, video game creation, and even design, once they start customizing their characters and game environments.

The implicit assumption of this curriculum is that programming is an important skill to learn for the future. Explicitly, the curriculum matches several Common Core Mathematics, Common Core ELA, and CSTA Computer Science standards that students develop in each lesson. Clear charts map lessons to Common Core standards by grade. Through their lessons/activities, students are able to learn and explore several Math and English Language concepts. The company has several courses beyond the Programing one I explored that include English, Science, and Social Studies projects. Each course contains several lessons, exercises, and activities the students can complete.

The design of the lessons are such that teachers do not have to a deep knowledge of programming to use it. As their website (tinker.com, 2016) puts it, “Built for Educators. No Experience Required.” since their “Comprehensive Curriculum” has “Ready-to-use lesson plans and STEM project templates for grades K-8.” The requirement for using this curriculum is one computer or tablet per child and an internet connection. Even though this might not be a reality in all schools, I believe it is a matter of time that the “1 laptop per child” dream to come true. In any case, the site also provides challenges for students to complete on their own computer or tablet, in the case the school might not provide adequate access. In other words, parents could use this site/curriculum to encourage their children to engage with programming.

The lessons provided are all geared clearly towards and support the intended goals and learning activities. For example, to introduce concepts of angles, the student has to program a spaceship to trace the lines of a star by giving it commands to go forward, turn at a certain angle, and repeat the process again until the star is complete. I felt that an explanation on how to figure out how many degrees each turn should be depending on how many points a star has was missing. The aim of the activity was to introduce the concept of loops and was intended for a second grader, therefore it might have been by design that they left out this explanation. I would have to pay and get access to the more advanced lessons to find out how they introduced such derivations – which apparently are present in the organized course catalog.

The assessment tool presents in a clear manner how students are progressing through the activities. A chart indicates the learning outcomes per student with icons indicating their speed and accuracy in completing each task. I was not able to have access to actual assessment tool which made me curious about how detailed these assessment results are. I would like to know if the teacher could see which exact questions the students got wrong. My guess is that it does provide it simply because of how carefully and throughly thought through the tool is implemented.

One unintended consequence I foresee in implementing this tool in the classroom is that younger students might get distracted with the character customization capabilities the tool offers. I’ve seen this happen firsthand when teaching using Scratch, a similar tool which this one bases it’s block programing style. Students end up spending a significant time playing with the color of the hair, clothes, and other such customizations instead of attending to the task at hand. The tool though, cleverly limits what the student can do in each exercise and establishing a time limit when customizing the characters. Only in more advanced lessons can the students more freely engage with all the features the tool offers.

If we apply Wiggins & McTighe’s (2005) WHERETO evaluation of learning activities, one could say that the tool attends to them all:

W = help the students know Where the unit is going and What is expected.

The lessons have clear goals and measures of success.

H = Hook all students and Hold the interests

I was actually entertained by the challenges presented and attracted by the design of the scenarios and characters.

E = Equip students, help them Experience the key ideas and Explore the issues

The interactive nature of the challenges do provide a rich tool to attend to these criteria.

R = Provide opportunities to Rethink and Revise the understandings and work

The challenges themselves provide opportunities to redo and revise their programs in order to achieve their goals.

E = Allow students to Evaluate their work and its implications

The evaluation of the work comes directly from attaining the goals therefore the feedback is immediate. The quizzes also provide an opportunity for the students to evaluate what they have learned and reflect upon them, even if on their own.

T = Be Tailored (personalized) to the different needs, interests, and abilities of learners

The different lessons attend to different levels and abilities the students might have and the tool is flexible enough to allow students to go as far as they wish with their programming explorations.

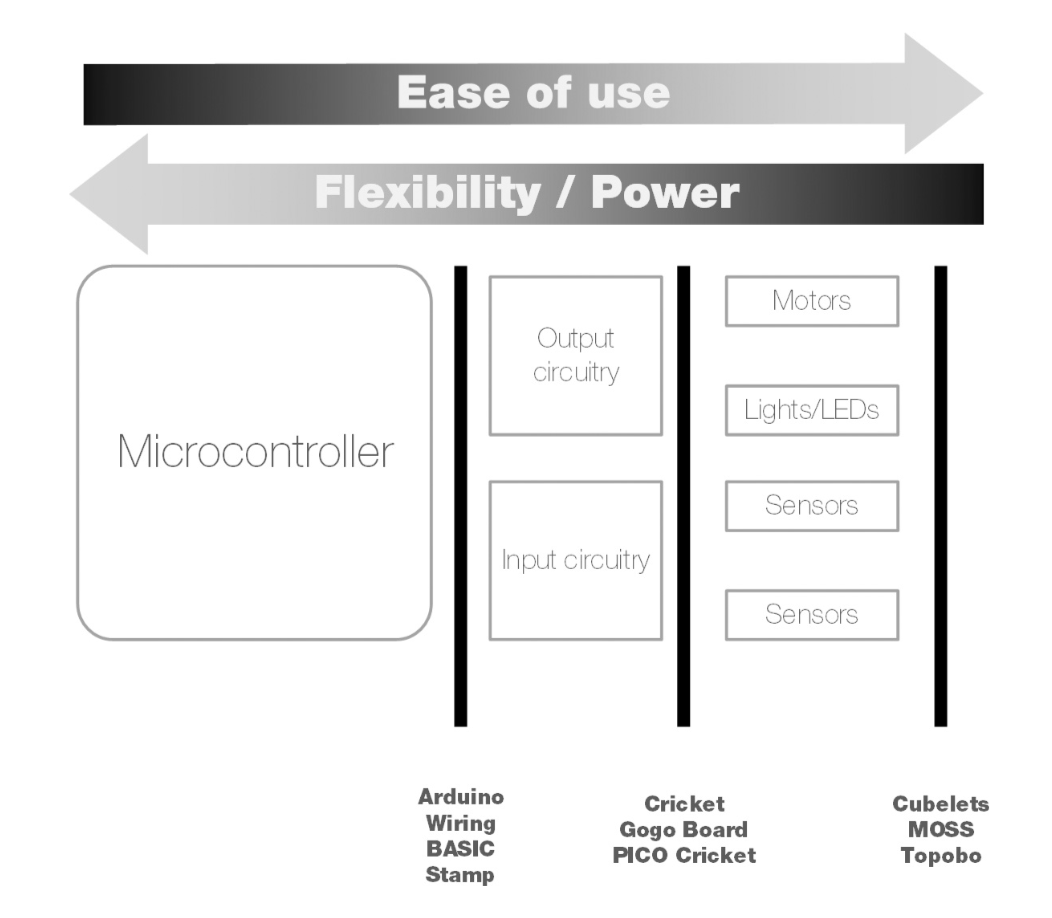

In conclusion, I was very impressed with the quantity, quality, and breadth of the tool. The introductory lessons are easy enough and scaffolded enough for the ages it is intended to. There is a high ceiling as well in the sense that you can move from block-based programming to actual programing in Java and connecting to robots to enhance the tangibility of the learning experience.

“The artistry in pedagogy is partly one of placement – finding the place within the child’s experience that will enable her to stretch intellectually while avoiding tasks so difficult that failure is assured.” (Eisner, 1994, p.70)

References

Dewey, J. (1938/1997). Experience and Education. New York: Simon & Schuster.

Eisner, E. (1994). The Educational Imagination: On the Design and Evaluation of School Programs. (3rd. Edition). New York: MacMillan. pp. 47-86.

Noddings, N. (1992). The Challenge to Care in Schools. New York: Teachers College Press.

Wiggins, G., & McTighe, J. (2005). Understanding By Design. (Expanded 2nd edition) Alexandria, VA: Association for Supervision and Curriculum Development.