Assignment

Round 2! What is your new and improved Point of View?

Response

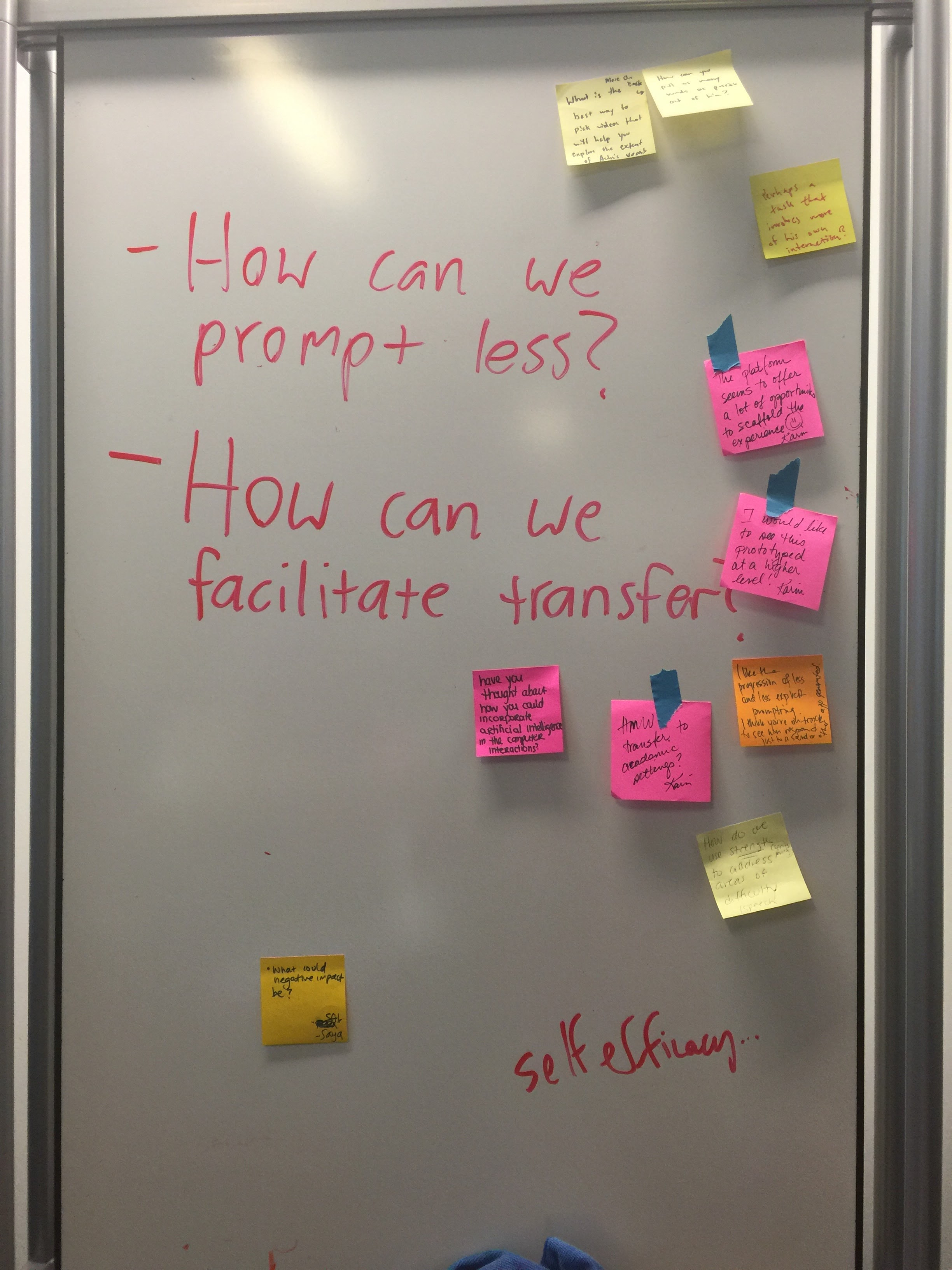

Our point of view has stayed the same – after these weeks of work, we really want to continue working with Achu and work on the same learning goals.

HMW support ACHU generate more words and even sentences?

We have three new prototypes that we are excited to see working with him this week:

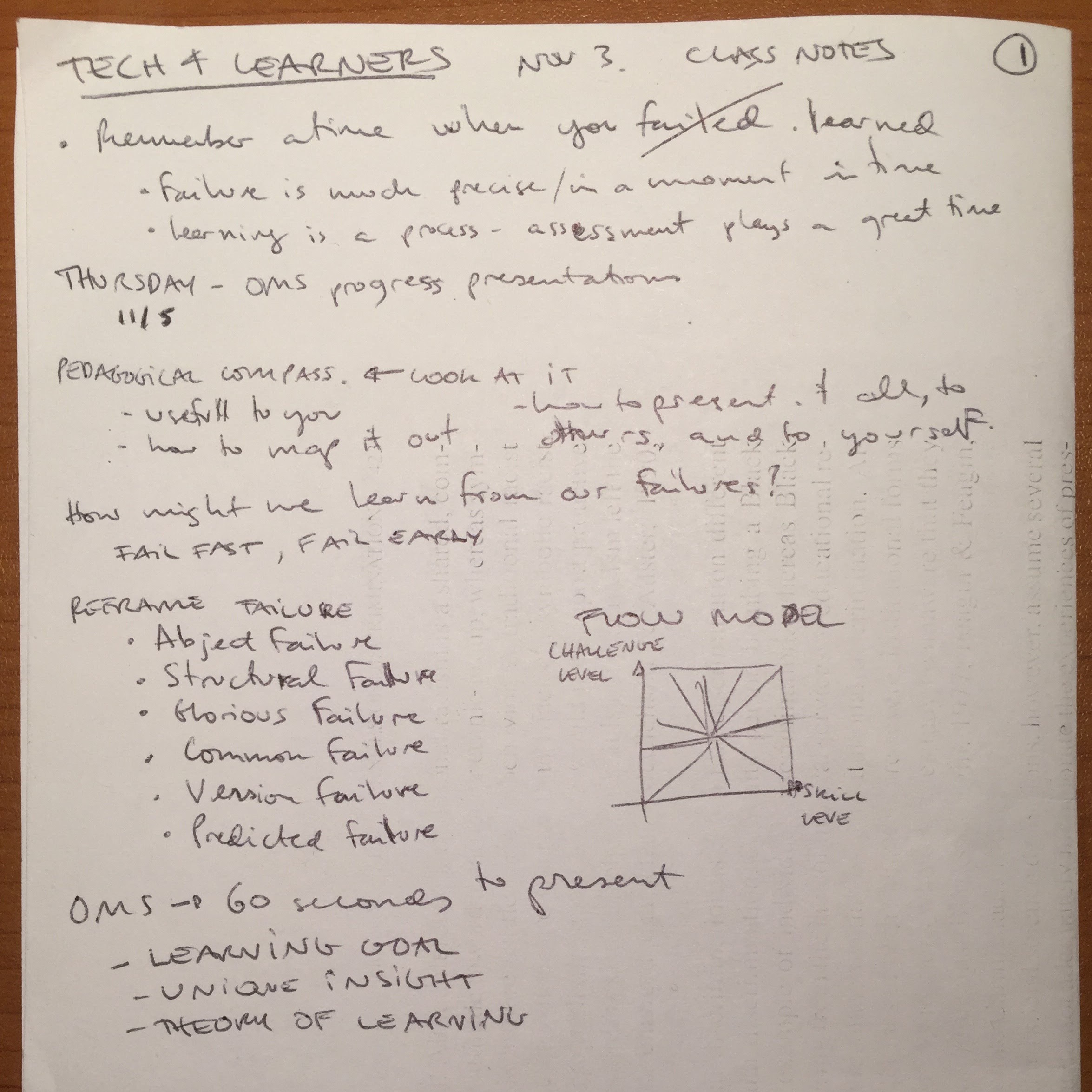

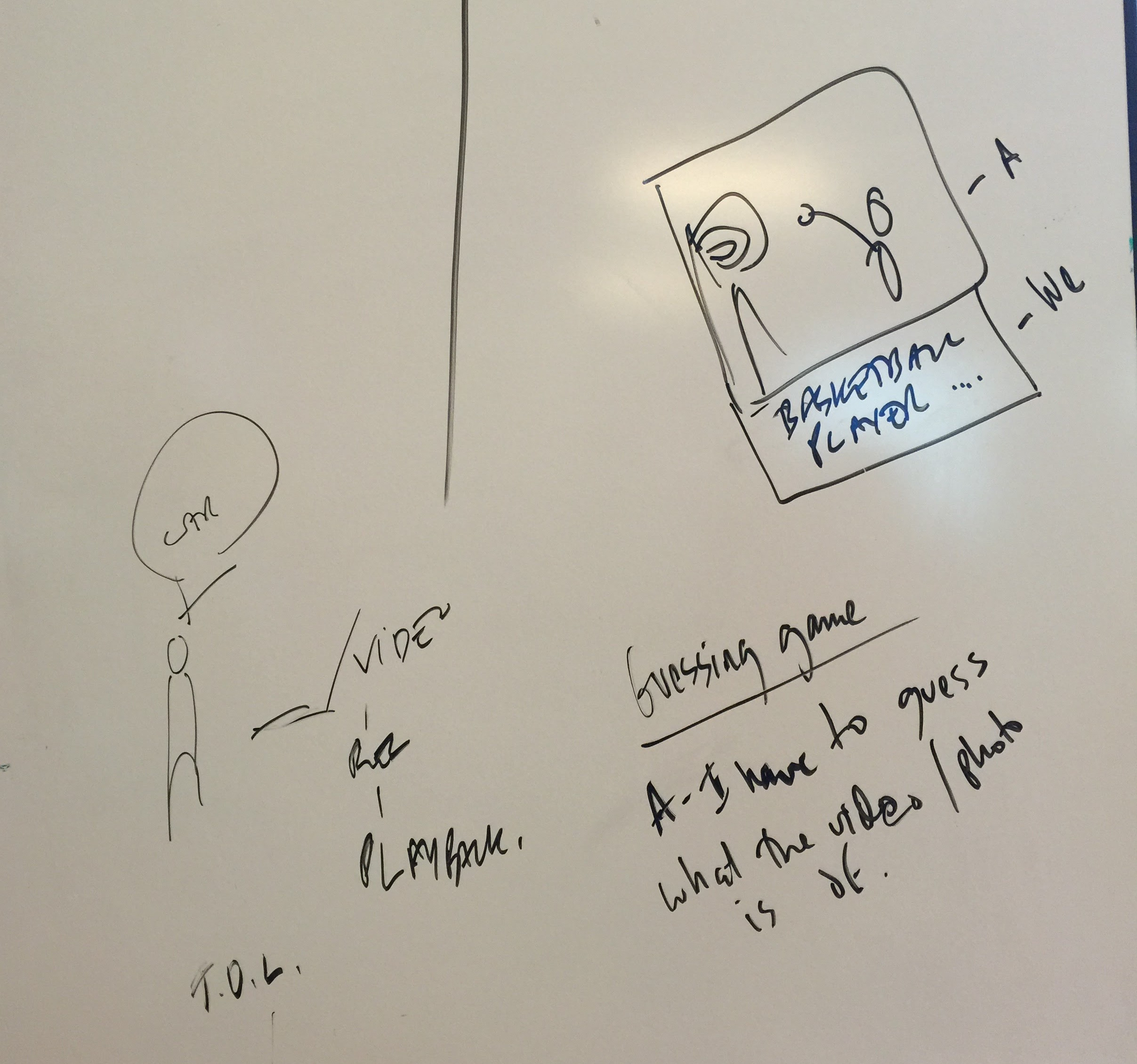

- First, we want to build on our previous video narration idea. However, we also want to incorporate the protégé effect, and the idea that while Achu might not find it always natural to speak for himself, but might find it compelling to speak if it is to speak to someone else/to help someone else.

For this idea, we are creating a character who will introduce themselves to Achu, and explain that they absolutely need Achu’s help to describe what is on the screen below because they cannot see. First, they will ask Achu to describe a picture, then we will ask Achu to describe a video.

This is meant to be a scaffolded exercise, and we maintain the idea of recording Achu as in previous prototypes and play that back to him.

- Based on Marina’s feedback, we want to build on Achu’s strengths, one of which is the ability to solve/put-together puzzle pieces really well. We are designing a game that requires Achu to put together sentences, where each word is on a puzzle piece that only fits with the others in certain ways.

- We want to test out an existing cat app with Achu, popular among children, whereby one speaks to the app, your voice is recorded and the cat replays it as if you spoke it. Beyond the entertainment value, we want to test this out to see if this might work as a warm-up exercise for Achu to generate more words. We also want to test our hypothesis that hearing his own words will give Achu a better sense of the value of his own words.