And here we go!! 10 weeks of educational intensity 🙂

Author Archives: lucaslongo

LDT Seminar – Final – Fall Quarter Reflection

Assignment

- Evaluate your own contributions to seminar based on this rubric.

- Explore your own learning inside and outside of class in a brief reflection paper (1-2 pages).

|

Below Expectations |

Meets Expectations |

Exceeds Expectations |

|

|

Attendance* |

Misses two or more seminars. Comes late or leaves early. Does not inform instructor of absence in advance. |

Attends all of seminar, or misses one, with very good excuse (e-mailed to instructor ahead of time). Always on time. |

Organizes extra learning opportunities for other learners. |

|

Assignments** |

Assignments are late, incomplete, or poorly executed. |

Assignments are turned in on time. All outside work is turned in on time (or ahead of time). Assignments address the assignment components, but appear rushed or have errors. |

In-class and out-of-class assignments are completed thoughtfully and thoroughly. In out-of-class work, attention is paid to content, spelling, grammar, and flow. |

|

Participation |

Rarely speaks, or rarely listens. Carries on side conversations or other off- topic activities (for example on the computer). |

Mostly listens, but speaks sometimes. Or mostly speaks, but listens sometimes. |

Speaks and listens actively in class. Builds on the ideas of others. Challenges own thinking and that of others. Seeks to make connections between concepts in class and to outside experiences. |

Response

Self evaluation:

Attendance

Meets Expectations – Attends all of seminar, or misses one, with very good excuse (e-mailed to instructor ahead of time). Always on time.

The reason why I did not evaluate myself as “Exceeds Expectations – Organizes extra learning opportunities for other learners” is because I did not have the time or put in the effort to help my peers. Maybe if I was aware of this evaluation at the beginning of the quarter, I would have attempted to do so. Yet I see that I had the opportunity to teach Reuben some iOS development – which he expressed interest in. I simply did not have the time to do so. I expect that next quarter I might not have the time either but will make an effort to be more aware of these teaching/knowledge sharing opportunities. To counter this argument though, I’ve maintained a blog (www.lucaslongo.com) where I registered all of my reading and class notes as well as all the assignments I created during the quarter. Even though the blog’s main purpose was to document my work and have a repository for future consultation, I believe that my peers could potentially benefit from these notes.

Assignments

Exceeds Expectations – In-class and out-of-class assignments are completed thoughtfully and thoroughly. In out-of-class work, attention is paid to content, spelling, grammar, and flow.

With the exception of the 3 out of the 4 final papers for Tech 4 Learners, all my assignments were turned in on time and thoughtfully created. I must say that I have never studied so attentively or engaged so deeply with the content while in an educational environment.

Participation

Exceeds Expectations – Speaks and listens actively in class. Builds on the ideas of others. Challenges own thinking and that of others. Seeks to make connections between concepts in class and to outside experiences

My participation in class is a way in which I learn. Exposing my ideas and thoughts in class help me validate my understanding of the content. I see it as a technique to engage with the content and to stimulate myself to challenge what I already know. I honestly cannot remember a class in which I did not make a contribution.

Explore your own learning inside and outside of class in a brief reflection paper (1-2 pages):

These past 10 weeks of classes have been the most intense learning experience I’ve ever had. The quarter system provides a sense of urgency and speed in absorbing the material that a semester system leaves lax. There is no opportunity to catch up – if you leave the ball drop, it seems impossible to recover. The fact that I was taking 18 units distributed amongst 7 classes also contributed to this feeling of a massive knowledge dump into my brain. Yet I feel that the teaching quality and pedagogical level of the course delivery was key in making this all possible. I surmounted the task and feel like I am definitely more knowledgeable after this quarter.

One of the main reasons why I chose to come to Stanford and go for the LDT Master’s program was to understand how education really works, how we learn, how to teach, and what one must consider in diving into the complex task of education. This quarter showed me that I came to the right place. It also showed me that education is much more complex than I previously knew. My respect towards K-12 teachers grew exponentially as the quarter went by, along with my amazement and incredulity that the profession is not valued as the most challenging of them all. How is such a vital role in our society be undervalued in most cases? Why do teachers, who have the most profound effect on our children’s future, be one of the lowest paying professions around? Education is the one thing that no-one can take away from us.

Reflecting upon each course I took, I can say that each minute spent in classroom, each line of text read, each group discussion, and each assignment completed added to what I desired to learn and to the way I see the world. Let’s go through each course to illustrate the main take aways:

Topics in Brazilian Education

Even though I was born and raised in Brazil, I never attended the Brazilian educational system. When I was 3 years old I started attending the American school in São Paulo. When I was 12, I moved to Italy where I attended the British school in Milan. Back in São Paulo, a year later, I continued onto the British school in São Paulo. Undergraduate studies – Rensselaer in Troy, NY. Graduate school – NYU. Now Stanford.

This created a vacuum in my knowledge about the Brazilian educational system and its history. This class was an eyeopener in terms of what has happened in recent history in Brazil and what still needs to be done. Even though the course was the least organized of all of my courses this quarter, it showed me that public education in Brazil is an afterthought for the government. Huge investments were made in higher education, but K-12 was marginalized. The feeling is that kids go to school to get fed and so that the parents can go to work and receive financial aid from the government.

I now understand why the Lemann Fellowship exists. It’s stated mission is to improve Brazilian public education by providing funds to those who get into the top schools in the world. It previously seemed like an altruistic move but it’s more of a real and endearing necessity for Brazil.

Introduction to Teaching

This course presented me with the formal techniques and considerations teachers must attend to in their profession. I was amazed at how complex teaching really is – especially at the K-12 level where teachers must not only have PCK, but must also differentiate between student cognitive levels and cultural backgrounds, manage the classroom behavior and dynamics, and perform formative assessment continuously – all at the same time. Teachers are my new heroes. Reading through Lampert’s “Teaching Problems and the Problems of Teaching” shows how complex teaching simple math division can be. It goes down to the choice of what number should be presented in an exercise. It requires planning, constant evaluation, and thinking on your feet constantly to ensure the learning objectives are met.

The wealth of terminology learned in this class was also extremely helpful. I knew nothing about teaching before this class. I must confess I had either never heard of or did not know the full meaning of the terms we covered in class: didactic/direct instruction, facilitation, coaching, ZPD, transfer, metacognition, prior knowledge, scaffolding, APA Style, Bloom’s Taxonomy, modeling, guided practice, PCK, differentiation, formative assessment, summative assessment, the black box, teach for the test, learning progression, rubric, formal and informal learning environments, funds of knowledge, and teacher professional development. Wow… I can’t believe how much I’ve learned from this one class. Truly amazing.

Tech 4 Learners

The main takeaway from this class was the danger of the technocentric view of education – which I must admit I suffered from. I came to LDT with a notion that I would be able to get all of my school’s content, put it online, and only need the teacher once I had to update the course content. This course showed me that a human teacher and human peers interacting in real life are essential for effective learning to take place. I definitely now see that MOOCs by themselves are not the way to go – there must be a component of human interaction, of peer communication, and of timely commitment towards a final learning objective.

In parallel, this class gave me the opportunity to work once again with children with special needs. While at ITP, I took a course called “Inclusive Game Design” where we created a game for a child with cerebral palsy. It was one of the most rewarding experiences I’ve had in designing a tool. To see the child interact with the game in the way we intended was simply breathtaking. This was repeated in this class where our rapid prototypes were able to evolve and adapt towards our goal of helping our learner.

Terminology and concepts acquired from this class: backwards design, technocentrism, growth mindset and the perils of praise, four-phase model of interest development, joint media engagement, the protégé effect, and tangible user interfaces.

Understanding Learning Environments

This course provided me with the foundations of learning theory and cognitive development along with the main theorists of our times. The most interesting concept for me was Lave & Wenger’s Legitimate Peripheral Participation concept and the notion that learning is what happens in the interaction of masters, apprentices, their actions, and the environment/context in which they are situated. It was interesting to see how much education is based on psychology, philosophy and cognitive development – something I can now see as obvious. I would have had to ask for elaboration if someone told me so in the past. Having read, even if extremely little of, Skinner, Piaget, Montessori, Vygotsky, Dewey, Freire, and several others gave me confidence to talk about education in a more meaningful manner.

Introduction to Qualitative Research Methods

This was yet another course that presented me with completely new knowledge. Being an engineer and working with software for most of my life, research was never something present – not to mention qualitative research. My initial reaction to this course was “wow, I can get a job that entails observing the real world in extreme detail and then writing about it in the most interesting manner possible!?” I was thrilled to learn that this kind of research even existed. It gave me a framework for looking at the world, to understanding bias, creating interview questions, capturing data, analyzing it and presenting it. It made me think about writing effectively based on evidence, creating propositions, elaborating theories, and extracting meaning.

Key concepts: I as a camera, turtles all the way down, grounded theory, probing, coding, propositions and validity.

LDT Seminar

This course made me reflect primarily on the reasons why I came to LDT. What is the problem I want to look at? Is it a real problem? Does it matter? How do I define the problem? It made me understand the importance of reading research, how to research and follow the reference sections for even further reading. It made me talk to experts and learners to understand what has already been done and what still needs to be done.

It also made me appreciate my diverse and profoundly interesting cohort. How much everyone brings to the table. It left me wanting to know them better and more intensely. It showed me that we can’t always do it ourselves and that collaborating can generate something that is invariably greater than the sum of the parts. It made me think about my role in society and in the immediate community that I am living in.

Human-Computer Interaction Seminar

This was the course that had the least impact of all this quarter, simply because of its lecture format with no group discussion or interaction – only quick Q&A sessions at the end of each session. The quality of the lecturers and the content presented was amazing though. The most memorable ones were:

- Wendy Ju: Transforming Design: Interaction with Robots and Cars

- Janet Vertesi: Seeing Like a Rover: Visualization, embodiment, and teamwork on the Mars Exploration Rover mission

- Sean Follmer: Designing Material Interfaces: Redefining Interaction through Programmable Materials and Tactile Displays

All in all this was an intense quarter which presented me with a wealth of knowledge I had never experienced before. I am extremely pleased with my decision in coming to LDT and am anxious for the next 3 quarters. I always say that I could stay in school forever. Somehow I feel that it is up to me to find a way to do so – maybe not by getting a third Masters degree or dive into a PhD (for now) – but get involved in a company, research group or organization where my thirst for learning is continuously fed.

Tech 4 Learners – Final – Learning Tool Evaluation

Assignment

Choose any digital learning tool currently on the market. Explore it, poke at it, twist it and see if you can break it (in a pedagogical sense, not a technical one). When you have a good sense of what it does, write a description of the tool, including the intended learners, content, and approach to learning. What are its strengths and weaknesses? How should it be evaluated? How could it be improved or extended? 2-3 pages

Response

Synopsis

Udemy is an online course marketplace who’s mission is to “help anyone learn anything” according to their website which also states that every course is “available on-demand, so students can learn at their own pace, on their own time, and on any device.” The platform caters to learners and businesses offering over 35,000 courses ranging from photography to mobile development. At it’s core, Udemy offers an online course publication tool that allows instructors to create their courses and put them up for sale both on Udemy’s marketplace and the instructor’s own website. The instructor sets the selling price and shares the revenues with Udemy at varying rates, depending on who initiated the sale. The instructor keeps 97% of the revenue if the sale originated from their own website and 50% if the sale originated from Udemy’s website.

Learning

Besides the obvious focus on the students, Udemy has a significant focus on the instructor, offering several resources to aid instructors in creating courses. To start with, Udemy offers a free “How to Create Your Udemy Course” which utilizes the platform itself to deliver it. A support website is also available offering several articles such as “Getting Started: How do I create my Udemy Course?”. There is also a closed Facebook group is available for instructors to share experiences, get help and learn from each other. These resources focus on planning, producing, publishing, and promoting the instructor’s courses.

The designers seem to believe that instructors, as learners of the tool, need to understand how teaching online is different from teaching in a classroom. The support material focuses on guiding the instructors on best practices, media quality, and pedagogical styles that best work in this environment. On top of these resources prior to creating a course, Udemy enforces a course review process once the course is ready. This process entails a detailed inspection of the quality of the media, the course content organization, as well as the frequency of different media utilized. For example, a course with only text, only slides, or only videos – will be rejected. A mix of media, quizzes, and presentation styles is therefore valued by Udemy as essential for the learners (students) to succeed.

Content

Judging by the content presented, designers see as barriers to developing an understanding of the subject matter is course planning and digital literacy. Starting with guiding the instructors on learning objectives and general planning of the course, the designers offer basic pedagogical knowledge. Moving on to the production of the course, the designers offer detailed instructions and specifications on audio and video size and quality as well as filming and editing tips, for example. Publishing instructions are also offered guiding instructors on pricing strategies, free course previews and other information about how to make the course more attractive to students. Finally, Udemy provides suggestions on how to drive sales of the course.

Following the content on creating the course, Udemy continues with guides on how to utilize the tools they offer on their digital platform. A strict “Course Quality Checklist” is presented as well as the “Udemy Studio Code of Conduct” which details what is allowed, encouraged as well as what is frowned upon. Interestingly enough, the last session in the “Udemy Teach” section of their site includes “Coding Exercises” which talks about how to create exercises, validate and checking student’s code, and a few example exercises for Javascript, Html, and CSS. This shows a tendency of online courses be heavily geared towards programming courses. My personal guess to why this happens: programming instructors and students have a higher digital literacy and comfort around technology. It is probably harder to find a tech savvy Yoga instructor that publishes an online course as it is to find a Yoga student looking for a strictly online course on Udemy. A quick search shows 155 Yoga courses versus 557 ‘programming’ courses along with 683 ‘development’ courses. Times are changing.

Technology

The features the designers are leveraging in this implementation revolve around cloud storage and Ajax. Cloud storage means that all the content is uploaded to Udemy’s platform and stored in their environment – including videos – for no extra charge to the instructor. This allows complete control of the content and delivery quality of the courses. Ajax is a ‘modern’ technique of creating web pages that allow dynamic loading of content, draggable elements, and addition of new sections without the necessity of reloading the page. This provides a fluid and intuitive interface that makes the job of creating the course content actually pleasurable.

On the student’s end, the interface is also intuitive, clean, and easy to use. Each section of the course is presented without distractions and provides clear actionable items to control the playback.

Assessment

The success of this tool is publicized on their web page with numbers such as 9 million students, 35 thousand courses, 19 thousand instructors, 35 million course enrollments, 8 million minutes of video content, and 80 languages. Although these are all big numbers by any standard, I would also be interested in looking at the following numbers:

- Growth rate of the number of instructors joining the platform

- Time between account creation and course publication

- Average number of courses published per instructors

- Average revenue per course

- Course completion rates by students

I would also be very interested in interviewing instructors who have published courses on other platforms to understand what Udemy’s course publication tool is doing right or wrong. From personal trial and error, I’ve found Udemy’s interface the easiest to use and the one that provides the most scaffolds for the instructor. Their review process is also extremely helpful with attention to minimal details showing that there are actual humans reviewing the course content. This ensures course quality for the students and gives the company a high level of credibility as well as showing their care towards the learner.

Evaluation

Scale: 0 – Absent, 1 – Minimal, 2 – Strong, 3 – Exemplary

The tool is making effective use of unique features of this technology.

2 – Strong: Udemy’s uses the latest Html techniques to provide a good user experience. I would have judged it exemplary if there were a drawing tool embedded in the platform – something like a white board that would record my strokes and voice over from within the tool.

The features of the tool demonstrate an understanding of the target learner.

3 – Exemplary: Udemy course publication tool is setup in a way that it asks for information from the instructor in a structured and familiar manner using terminology commonly used by teachers such as course goals, course summary and other features one would expect in a pedagogical tool.

The design of the tool suggests an understanding of the challenges unique to learning the target content.

3 – Exemplary: Udemy’s wide variety of content, tools and possible interactions amongst instructors show a great care towards the main driver in education – the instructor. They understand that teachers, educators, and subject matter experts may not have all the TPACK necessary to become an online instructor. To supplement this, they try to provide content in various formats with several examples and support for them.

Tech 4 Learners – Final – Pedagogical Compass

Assignment:

It is important that you:

- provide some explanation to the user of the compass as to what it means,

- reflect on the relationships between the different concepts,

- provide references (citations) to scholarship, so that users of the compass can pursue further enlightenment,

- articulate which way is “North” to you, and why?

Response:

Tech 4 Learners – Final – Advice to a Future Learning Tool Designer

“Activities, tasks, functions, and understandings do not exist in isolation; they are part of broader systems of relations in which they have meaning. These systems of relations arise out of and are reproduced and developed within social communities, which are in part systems of relations among persons. The person is defined by as well as defines these relations. Learning thus implies becoming a different person with respect to the possibilities enabled by these systems of relations. To ignore this aspect of learning is to overlook the fact that learning involves the construction of identities.” (Lave and Wenger, 1991, Ch. 2)

Regardless of your current profession and experience, you have been impacted by education and technology. As we progress in our society, we must think how might we deliver the best educational content, implement the most effective teaching methodologies (pedagogy), and the utilize tools that engage both learners and educators in meaningful learning experiences. Education is one of the most complex issues in our society and has been since the beginning of civilization. Without education, how does a community, a company, a country, and the human race progress? This paper, along with the Pedagogical Compass (https://prezi.com/zgdhgwrlealw/) will present an overall view of who are the stakeholders, how education happens for educators, how learning happens, and what might we select as relevant content for the future.

Even if you are not directly involved in education, you certainly have faced the need to teach someone, explain how something works, train a new employee, present your research, your work, or your thoughts. With this in mind, we propose to look at educational tools with a set of lenses that might provide an encompassing view when designing effective learning tools. The Pedagogical Compass looks at what we teach (North), how we teach (South), how we learn (East), and who we learn from (West). Through these four cardinal positions we might facilitate and hopefully stir your thought processes based on current research, learning theories, and experiments done in the field.

If we look at user experience designers, we generally consider a tool’s graphical layout, the affordances provided by the tool, it’s usability or ease of use, and finally the service and/or outcome the tool offers. Game designers go a step further in looking at how the user repetitively engage with the tool, reward systems, and how the gamer learns and progresses in the gameplay. One effective framework to use is the “Core Loop” which looks at every step of engagement one has within a game. It involves a cycle which starts with 1) assessment of the current scenario, 2) choosing the correct action, 3) aiming your action appropriately, 4) launching your action, 5) being rewarded (or not) by the consequences of your action. Once rewarded, you go back to step 1 where you assess your next move. By identifying the elements in each of the loop’s nodes we are able to better visualize the process and hopefully improve it. What happens between these nodes should also be considered in order to change the speed of the loop’s cycle.

This approach can be particularly useful in designing a learning tool. The learner, when engaging with new content or knowledge that must be acquired, will first assess what is known, what resources are available and what needs to be achieved. Second step is to choose a potential approach to absorbing the content such as reading, taking notes, and discussing the subject matter with colleagues. Once the action is chosen, one must aim at the appropriate content to engage with, launch your action and finally be rewarded by learning, understanding, and/or comprehending the content. We then continue back to the first step where we assess once again what we know, what we should do, how to apply it, take action, and be rewarded by the results. Yet designing a learning tool is not limited to the learner’s core loop. Learning happens to someone, within a social and cultural context, setup by a teacher, guide, or environment, and the interactions of these elements.

Going back to our Pedagogical compass, let’s first look at what we teach. Is it useful teaching quantum physics to a learner who’s talents lie primarily in the artistic realm? Will a certain content be helpful to get a job or to function better in society? It seems more than plausible to “focus what and how we teach to match what people need to know” (NETP, 2010). Therefore, when designing a learning tool, we must first consider the ultimate goal – the learning objective and outcomes. This approach has been coined by Walters & Newman, 2008 as backward design:

“This backward approach encourages teachers and curriculum planners to first think like an assessor before designing specific units and lessons, and thus to consider up front how they will determine whether students have attained the desired understandings.” (Walters & Newman, 2008)

“One starts with the end—the desired results (goals or standards)— and then derives the curriculum from the evidence of learning (performances) called for by the standard and the teaching needed to equip students to perform.” (Walters & Newman, 2008)

By preemptively defining how we will evidence the intended learning, we might do a better job when designing and refining each step and activity along the learning/teaching experience.

Now that we have our learning plan in place, how might we effectively transmit this to our learners? How do we teach more effectively? Can we simply use technology to do so? Can we eliminate the teacher from the process? This technocentric approach, where one believes that technological tools alone will transfer knowledge to students is widely criticized. Yet technology makes us rethink education and the role of the teacher in a more profound way:

“Combating technocentrism involves more than thinking about technology. It leads to fundamental re-examination of assumptions about the area of application of technology with which one is concerned: if we are interested in eliminating technocentrism from thinking about computers in education, we may find ourselves having to re-examine assumptions about education that were made long before the advent of computers. (One could even argue that the principal contribution to education made thus far by the computer presence has been to force us to think through issues that themselves have nothing to do with computers.) ” (Papert, 1987)

Therefore we must not only look at the tool but how we use it, and how we interact with the learners when engaging with the content. Learning is a continuous process, a technique acquired that will leverage further and future learning – learning how to learn. Learning that it is possible. One is not born with a certain and immutable level of intelligence. Believing this fixed notion of intelligence is potentially harmful and limits learners to put in the effort into the task. If the learner believes that progression is not possible, it becomes a self-fulfilling prophecy. The simple act of praising or criticizing one’s ‘intelligence level’ instead of nurturing the process of learning may prevent learners from having a ‘growth mind-set’ and promoting self-guided interest in development of one’s knowledge base:

“I think educators commonly hold two beliefs that do just that. Many believe that (1) praising students’ intelligence builds their confidence and motivation to learn, and (2) students’ inherent intelligence is the major cause of their achievement in school. Our research has shown that the first belief is false and that the second can be harmful—even for the most competent students. ” (Dweck, 2007)

“Understanding that interest can develop and that it is not likely to develop in isolation is essential. Further articulating the contribution of interest to student learning and its relation to other motivational variables has potentially powerful implications for both classroom practice and conceptual and methodological approaches to the study of interest. ” (Hidi & Renninger, 2006)

Now that we have glossed over what we should learn and how we might teach it, we can look at how do we actually learn. Learning is a natural and innate process. We learn how to speak, how to walk, how to interact with our environment, and how to behave in society. We learn not only in formal environments such as schools and training centers, but also in the interaction with others. If we look at children playing video games, research shows that they are naturally learning how to play the game, how to collaborate, and interact with each other with the goal of enriching their experience:

“For these reasons, we do not appeal to the games-are-highly-motivating explanation, but we do see a reason that young people play games and get them tangled up with the rest of their lives, and this reason is cultural. The phrase that best helps us explain it comes from one of our participants, Mikey, who in talking about games said, “It’s what we do.” The “we” he was referring to was kids these days, the young people of his generation.” (Stevens, Satwicz, McCarthy, 2008)

Another powerful concept is that we learn by teaching. What better way to understand a concept but to explain it to someone else? Not only must we utilize our metacognition to access the key elements, but we must articulate in a clear manner so that others can grasp the knowledge at hand. On top of that, humans naturally seem to care more about helping others than helping themselves. An increased level of responsibility and engagement with the content when teaching others is tapped into – it’s called the Protégé Effect. The research looked at how children taught a Teachable Agent (TA) and how this affected their own content acquisition.

“We then introduce TAs, which combine properties of agents and avatars. This sets the stage for two studies that demonstrate what we term the protégé effect: students make greater effort to learn for their TAs than they do for themselves.” (Chase, Chin, Oppezzo, Schwartz, 2009)

“Given our hypothesis that the protégé effect is due to social motivations, we would expect students in the programming condition to be less inclined to acknowledge ” (Chase, Chin, Oppezzo, Schwartz, 2009)

Finally, but not less importantly, we should look at who we learn from, beyond the teacher in it’s most traditional definition. Research shows, along with our common knowledge, that we learn from our peers, from our environment, from the media that we consume and the interactions we engage in while doing so: Joint Media Engagement – the new co-viewing (Takeuchi and Reed Stevens, 2001):

“The variety of ways that we saw young people arrange themselves to play games surprised us, especially since most of these ways were interpersonally and emergently organized by the young people themselves.” (Stevens, Satwicz, McCarthy, 2008)

“Parents, teachers, and other adults may wish to share educational resources with their children, but teaching with media and new technologies doesn’t always come naturally, not even for experienced instructors. Provide guidance for the more capable partner in ways that don’t require a lot of prior prep or extra time, actions that can help ensure that the intended benefits of the resource are realized. ” (Takeuchi and Reed Stevens, 2001)

With this is mind, the role and actions of the teacher is greatly expanded and complicated since it must consider not only what is happening inside the classroom but also outside the classroom. Engaging students, triggering and maintaining their interest in the content is a great challenge that can be modeled by the Four-Phase Model of Interest Development developed by Hidi & Renninger, 2006, which looks deeply into how interest progresses from an initial casual level of engagement to a more deeply involvement with the subject matter, where the teacher’s role is to provide positive feelings towards the content, generate curiosity to encourage further research, provide opportunities for learning by offering content and pointers towards meaningful resources, and a guide on research to enable learning progression. By providing this, the interest level of a learner will move from Triggered Situational Interest to Maintained Situational Interest to Emerging Individual Interest and finally to a Well Developed Individual Interest.

Designing learning tools might be the most complex challenge we face in our society, not only from a pedagogical standpoint. We must look at the scalability of teaching, content relevance, socio-cultural implications, cognitive developmental stages, interaction with peers, policy, assessments, teacher professional development, costs, and implementation – to list a few. We invite you to become part of this ever evolving field, take on the challenge of creating a better future for humanity, develop, implement and research how might we help spreading knowledge across the world in an effective, considerate and meaningful way. We need designers, teachers, engineers, developers, psychologist, philosophers, doctors, lawyers, leaders, and anyone with a desire and drive to share knowledge and improve the tools we have to do so.

Tech 4 Learners – Final – Notes

Reading Notes

National Education Technology Plan

- Focus on technology but need to use it for PD

- Focus Areas:

- Learning

- Assessment

- Teaching

- Infrastructure

- Productivity

Understanding by Design

- Backwards design or backwards planning

- Clear learning objectives

- How could we incorporate game design practices into education?

Computer Criticism vs. Technocentric Thinking

- Ed Tech is not the silver bullet – must come with pedagogy and PD

In-Game, In-Room, In-World

- Kids learn plenty from each other

- Kidification of education

The Perils and Promises of Praise

- Growth mindset

- Constructive praise – effort and process not ability itself (you’re so smart!)

Four-Phase Model of Interest Development

- Model

- Triggered Situational Interest

- Maintained Situational Interest

- Emerging Individual Interest

- Well Developed Individual Interest

- Teacher’s interest is probably best predictor of effective teaching

- Teacher’s role is to provide:

- Positive feelings

- Generate curiosity

- Provide opportunities

- Guide on research

The New Coviewing: Joint Media Engagement

- Design Guide

- Mutual engagement

- Dialogue inquiry

- Co-creation

- Boundary crossing

- Intention to develop

- Focus on content, not control

- Challenges

- Parents too busy

- Parents unaware of needs

- Don’t enjoy the same content

- Desired interactions not always triggered

- Little continuity into other family activities

- Distraction are always present

- Design principles

- Kid driven

- Multiple plains of engagement

- Differentiation of roles

- Scaffolds to scaffold

- Trans media storytelling

- Co-creation

- Fit

- “What goes on between people around media can be as important as what is designed into the media”

Teachable Agents and the Protégé Effect

- Care more about pleasing others than oneself, so having someone you need to help enhances learning through teaching this person

Tangible Bits: Beyond Pixels

- Tangible User Interfaces

Horizon Reports

- re-teaching our teachers how and what to teach

Paper Planning

Pedagogical Compass

North – what we teach

- Content relevance

- “focus what and how we teach to match what people need to know ” (NETP, 2010)

- “It leverages the power of technology to provide personalized learning and to enable continuous and lifelong learning. ” (NETP, 2010)

- “Build tools and experiences that revolve around a child’s existing interests, not just prescribed topics. To do so, producers need to design mechanisms that make children’s interests visible and can assist adults in responding to them. ” (Takeuchi and Reed Stevens, 2001)

- “Joint media engagement can be a useful support for developing literacy, including basic reading ability, cultural literacy, scientific literacy, media literacy, and other 21st century skills.” (Takeuchi and Reed Stevens, 2001)

- Assessment

- “technology-based assessments can provide data to drive decisions on the basis of what is best for each and every student and that, in aggregate, will lead to continuous improvement across our entire education system. ” (NETP, 2010)

- “This backward approach encourages teachers and curriculum planners to first think like an assessor before designing specific units and lessons, and thus to consider up front how they will determine whether students have attained the desired understandings.” (Walters & Newman, 2008)

- Teacher’s interest

- Teacher’s interest is probably best predictor of effective teaching – Lucas

South – how we teach

- Teacher Professional Development

- “Professional educators are a critical component of transforming our education systems, and therefore strengthening and elevating the teaching profession is as important as effective teaching and accountability. ” (NETP, 2010)

- Curriculum construction

- Backwards design or backwards planning – Clear learning objectives

- “One starts with the end—the desired results (goals or standards)— and then derives the curriculum from the evidence of learning (performances) called for by the standard and the teaching needed to equip students to perform. ” (Walters & Newman, 2008)

- Backwards design or backwards planning – Clear learning objectives

- Using technology wisely

- “Assigning roles to participants so that tasks and content match up to individual maturity is another way of ensuring that everyone is suitably challenged and/or entertained.” (Takeuchi and Reed Stevens, 2001)

- “Parents, teachers, and other adults may wish to share educational resources with their children, but teaching with media and new technologies doesn’t always come naturally, not even for experienced instructors. Provide guidance for the more capable partner in ways that don’t require a lot of prior prep or extra time, actions that can help ensure that the intended benefits of the resource are realized. ” (Takeuchi and Reed Stevens, 2001)

- “Mark Weiser’s seminal paper on Ubiquitous Computing [54] started with the following paragraph:

“The most profound technologies are those that disappear. They weave themselves into the fabric of everyday life until they are indistinguishable from it.”

I do believe that TUI is one of the promising paths to his vision of invisible interface. ” (Ishii, 2008) - “Combating technocentrism involves more than thinking about technology. It leads to fundamental re-examination of assumptions about the area of application of technology with which one is concerned: if we are interested in eliminating technocentrism from thinking about computers in education, we may find ourselves having to re-examine assumptions about education that were made long before the advent of computers. (One could even argue that the principal contribution to education made thus far by the computer presence has been to force us to think through issues that themselves have nothing to do with computers.) ” (Papert, 1987)

- Student feedback

- Praise effort and not ability:

- “I think educators commonly hold two beliefs that do just that. Many believe that (1) praising students’ intelligence builds their confidence and motivation to learn, and (2) students’ inherent intelligence is the major cause of their achievement in school. Our research has shown that the first belief is false and that the second can be harmful—even for the most competent students. ” (Dweck, 2007)

- “Maybe we have produced a generation of students who are more dependent, fragile, and entitled than previous generations. If so, it’s time for us to adopt a growth mind-set and learn from our mistakes. It’s time to deliver interventions that will truly boost students’ motivation, resilience, and learning. ” (Dweck, 2007)

- Praise effort and not ability:

- Managing motivation and student interest

- “In fact, teachers often think that students either have or do not have interest, and might not recognize that they could make a significant contribution to the development of students’ academic interest (Lipstein & Renninger, 2006)” (Hidi & Renninger, 2006)

- “In general, findings from studies of interest suggest that educators can (a) help students sustain attention for tasks even when tasks are challenging—this could mean either providing support so that students can experience a triggered situational interest or feedback that allows them to sustain attention so that they can generate their own curiosity questions; (b) provide opportunities for students to ask curiosity questions; and (c) select or create resources that promote problem solving and strategy generation. ” (Hidi & Renninger, 2006)

- “Understanding that interest can develop and that it is not likely to develop in isolation is essential. Further articulating the contribution of interest to student learning and its relation to other motivational variables has potentially powerful im- plications for both classroom practice and conceptual and methodological approaches to the study of interest. ” (Hidi & Renninger, 2006)

- Trends (NMC Horizon Reports)

- Blended Learning

- Open Educational Resources

- Digital Literacy

- Integrating Technology in Teacher Education

- Rethinking Roles of teacher

East- how we learn

- Access to education

- “The underlying principle is that infrastructure includes people, processes, learning resources, policies, and sustainable models for continuous improvement in addition to broadband connectivity, servers, software, management systems, and administration tools.” (NETP, 2010)

- Growth mindset

- “Other students believe that their intellectual ability is something they can develop through effort and education. They don’t necessarily believe that anyone can become an Einstein or a Mozart, but they do understand that even Einstein and Mozart had to put in years of effort to become who they were.” (Dweck, 2007)

- Learn from culture

- “For these reasons, we do not appeal to the games-are-highly-motivating explanation, but we do see a reason that young people play games and get them tangled up with the rest of their lives, and this reason is cultural. The phrase that best helps us explain it comes from one of our participants, Mikey, who in talking about games said, “It’s what we do.” The “we” he was referring to was kids these days, the young people of his generation.” (Stevens, Satwicz, McCarthy, 2008)

- Four-phase model of interest development

- Triggered Situational Interest

- Maintained Situational Interest

- Emerging Individual Interest

- Well Developed Individual Interest

- Learn by teaching – protégé effect

- “We then introduce TAs, which combine properties of agents and avatars. This sets the stage for two studies that demonstrate what we term the protégé effect: students make greater effort to learn for their TAs than they do for themselves. ” (Chase, Chin, Oppezzo, Schwartz, 2009)

- “Given our hypothesis that the protégé effect is due to social motivations, we would expect students in the programming condition to be less inclined to acknowledge ” (Chase, Chin, Oppezzo, Schwartz, 2009)

West – who we learn from

- Technocentric views

- Learn from peers

- “The variety of ways that we saw young people arrange themselves to play games surprised us, especially since most of these ways were interpersonally and emergently organized by the young people themselves. ” (Stevens, Satwicz, McCarthy, 2008)

- “In fact, shared attentional focus on media in real time is a powerful interactional resource not found in most contemporary asynchronous social media, and researchers across a range of disciplines highlight the importance of joint attention for learning and meaning- making (e.g., Barron, 2000, 2003; Brooks & Meltzoff, 2008; Bruner, 1983, 1995; Goodwin, 2000; Meltzoff & Brooks, 2007; Stevens & Hall, 1998; Tomasello, 1999, 2003). ” (Takeuchi and Reed Stevens, 2001)

- “Stevens, Satwicz, and McCarthy’s (2008) naturalistic studies of siblings and friends playing video games together at home examined the spontaneous instances of teaching and learning that players set up among themselves during gaming sessions, as well as how their in-room interactions connect with what’s going on inside the game and in their lives outside the home (e.g., school). ” (Takeuchi and Reed Stevens, 2001)

- Learn from teachers who’s roles are to provide: (Hidi & Renninger, 2006)

- Positive feelings

- Generate curiosity

- Provide opportunities

- Guide on research

- Parents (coviewing)

- “To get families to use a new platform with any regularity, it should easily slot into existing routines, parent work schedules, and classroom practices. There are, after all, only so many hours in the day to accommodate new practices.” (Takeuchi and Reed Stevens, 2001)

- “What children learn and do with media depends a lot on the content of the media, but they depend perhaps as much on the context in which they are used or viewed, and with whom they are used or viewed.” (Takeuchi and Reed Stevens, 2001)

- Society

Pedagogical Compass Planning

Act 1 – Why should you read this paper?

Want to become a learning tool designer? Care about learner? Care about teachers? Care about reducing the digital literacy gap?

Who are you? Teacher? Policy maker? School leader? Designer? Engineer? Developer?

The compass:

– North – what we teach

– South – how we teach

– East – how we learn

– West – who we learn from

Act 2 – Evidence

How is LX design similar and different from:

UX designer – consider:

– User

– Usability

– Task at hand

Game Designer

– Learning the game – onboarding instructions

– Engagement – motivation, interest, reward systems, core loop

– Game mechanics

Learning Experience Designer

– Learning objectives

– Differentiation

– Cognitive developmental stages

– Cultural context

– Joint media engagement and co-viewing

– Learning from peers – protégé effect, learn by teaching

Act 3 – Conclusion

LX is probably the most complex type of design there is. Have to consider:

– The learner

– The teacher

– The environment

– The peers

– The cultural context

– Assessment

– Learning objectives

– Policy

– Costs

– Implementation

– Scalability

Read the Terms and Conditions

Qualitative Research – Final Reflection

Assignment

Individual Process Paper Requirements

This paper is a final reflection on the process of doing qualitative research. In this paper you should:

- Describe your growth as a qualitative researcher over the past 10 weeks using concrete details and examples to demonstrate areas of growth as well as areas you are still mastering

- Reveal how you are pushing yourself toward new understandings, especially concerning the complexity of the research process

- Connect your experience to class readings and class discussions. Show us some key topic areas you are grappling with… Be sure to use proper APA format

You may want to revisit past RDRs and show how your thinking has progressed. You may want to reflect on topics such as contextual interpretation, subjectivity, ethics, the analysis process, validity, and rigor.

Process papers should be between 4 and 8 double-spaced pages, not to exceed 8 pages.

Group mini-products will be evaluated separately from individual process papers. We will average the group grade on the mini-product with the individual grade on the process paper.

Response

Abstract

This paper is a review of the learning process I have gone through this quarter in this class in the form of a qualitative research paper. I propose to expose my journey from someone who had barely ever thought about research, let alone qualitative research, to someone who is now able to appreciate the power of this method of analysis of the world around us. Instilled with my own bias and metacognition, I will describe what were the salient concepts acquired through the readings, class activities, and assignments.

The research question I want to answer is: “How does Lucas understand the qualitative research process?”

Introduction

As an engineer undergraduate, research for me was far into the realms of Doctoral students and the confines of microfilms in the libraries. As a worker, I was always involved in project management and the implementation of software systems. Always very hands on and practical work with little need to do or consume research.

Coming into the LDT program I had to decide between qualitative and quantitative research methods. My reasoning was that I’ve got some statistical background from Industrial Engineering and that I had virtually no contact with qualitative research. I have not regretted this decision and feel that the course has provided me with valuable skills for observing the world and for consuming and producing qualitative research. It has given me a whole new set of lenses to critique my own design and thought process.

Hopefully this paper will illustrate the main take aways from the course along with evidencing my learning process and methods. By no means I am intend to claim that this qualifies as true qualitative research as the process of data collection and analysis was not initiated as such – it was an afterthought that induces a top down approach to finding meaning. I came in with what I wanted to find in the data and found it. My personal bias is also exacerbated by the fact that I am a full participant-observer (Taylor & Bogdan, 1998). I tried to be as objective as possible and hopefully attended to at least some of the “Criteria for a Good Ethnography” (Spindler & Spindler, 1987, pp.18-21):

- Observations are contextualized: I attempted to describe my individual process in this paper yet leaving out the in-class description since the intended audience of this paper were part of this context.

- Hypothehis emerge in situ: the learning process and this paper shows evidently that I came in with no prior knowledge of the subject and came out with what I feel like a solid basis for future work.

- Observation is prolonged and repetitive: is a quarter long enough? Was I really observing repetitively my own actions? I could argue towards both ends of the spectrum where if I was not consciously observing myself with the purpose of this research paper, the observations were not made. On the other hand, my blog, assignments, and memory serve me with sufficient data for this analysis.

- Native view of reality in attended: well, I don’t think I can go more native that being the native myself.

- Elicit sociocultural knowledge in a systematic way: the process of maintaining every interaction with course documented in my blog could be considered a systematic approach to eliciting my sociocultural knowledge even though there is no record of sociocultural factors that might have affected my learning.

- Data collection must occur in situ: in the sense that I am collecting data from myself, I would consider that all data collection was collected by me, for me, and within myself.

- Cultural variations are a natural human condition: I was unable to find throughout the process that my cultural background somehow affected my learning. Even though I am from Brazil, my education has been entirely within the American and British systems, allowing me to feel ‘at home’ in this context and with the readings presented.

- Make what is implicit and tacit to informants explicit: hopefully I am able to layout implicit behaviors and communications patterns in this paper by detailing my thought process behind each claim.

- Interview questions must promote emic cultural knowledge in its most natural form: I used the questions presented in the description of this assignment as a guide during my self-mental-interview. I feel like they were sufficient to elicit what I have learned.

- Data collection devices: I used pencil, paper, camera, and the blog as devices to collect my data.

Surprisingly, according to this analysis above, this paper could very well be qualified as a qualitative research paper. As discussed in the last class of this course, there are several examples of alternative and artistic research such as poems, performances, novels, and documentaries. ‘The field allows it all’ (notes from week 10 class, 2015). All in all I felt that this was a valid approach to structure and present the data collected, even though the data collection itself was not originally intended for the purpose of this paper – but for the purpose of learning.

Methodology

Data Collection

The structure of the course involved a series of readings, mini-lectures, in-class group discussions, individual papers, and practice of qualitative research. The main topics covered were presented in a logical progression (Appendix A) that scaffolded our understanding towards the existing base knowledge about the field. A series of readings were assigned to support our in-class discussions and to present the current research and thinking about each topic. Written assignments were used to assess the class’ progression through the course. Finally, we conducted a short practice version of qualitative data collection and then ensued to analyze the data and present a mini-product.

My methodology for absorbing the content was primarily to be engaged with the content by attending all classes, reading and writing all that was assigned. While reading and during class I noted down important concepts that jumped out at me on paper. I was testing the notion that by going analogue and physically writing down my thoughts I might get the benefits of embodied learning: “The embodied interaction with things creates mechanisms for reasoning, imagination, “Aha!” insight, and abstraction. Cultural things provide the mediational means to domesticate the embodied imagination.” (Hutchins, 2006, p.8) These notes were then photographed and put in my blog (lucaslongo.com) for archival purposes.

Data Analysis

For this paper, I wallowed through the data – my notes – and interviewed myself mentally about the entire experience. I produced amended notes that summarized general knowledge pieces I have absorbed (Appendix B). These notes were initial guides as to the subject matter to be included in this paper. They also inspired me by presenting me with the opportunity to experience grounded-theory (Taylor & Bogdan, 1998) in the sense that writing this paper in a qualitative research paper was the best way to present what I have learned from this course.

In being a hyper-metacognitive participant observer in this research process, I will now present the main propositions from the readings and the practice research process.

Findings

The assignment of conducting qualitative research was a crash course in the field. Even though highly structured and scaffolded by the educators, the process allowed for experiencing the multiple steps, processes and analysis required. The progression of observing, preparing interview questions, interviewing, making sense of the data, and finally writing it up felt like a genuine simulation of the real thing.

In particular, we had very little time to come up with a context we wanted to observe and define a research question that interested us. For me that was and still seems to be the hardest step of research: what is an interesting question to ask? Is there a problem to be found? How much research has already been done in this area? Do I know enough about the context to be able to extract meaning from it? But I guess this key and the seed of all research, alluding to the “1% inspiration, 99% perspiration” mantra that echoes in my head from my undergraduate studies.

The observation and interview processes did not draw up many insights for me other than the interview questions preparation phase. I had never structured an interview before and found that the strategies discussed in class and in the readings were extremely helpful for understanding how to better extract information from the informants. Probing and markers were the concepts that most stood out for me as techniques that I will take with me.

The process of analyzing the data and writing up the product showed me how much data was collected from a simple one hour observation and two hour long interviews. I was also surprise as to how much meaning can be extracted from micro-analyzing what was said by the informant. Not to mention the fact that our final conclusion or theory, truly emerged from the data. My group was worried that as much as we discussed, we did not feel like we had anything interesting to say about our context. At the last moment, when arranging the propositions, a general cohesive thought emerged from them, allowing to generate a conclusion that was both backed by evidence and that had meaning for us. I was initially skeptical about the method of coding exhaustively the data yet I was completely debunked in my convictions having experienced it first hand.

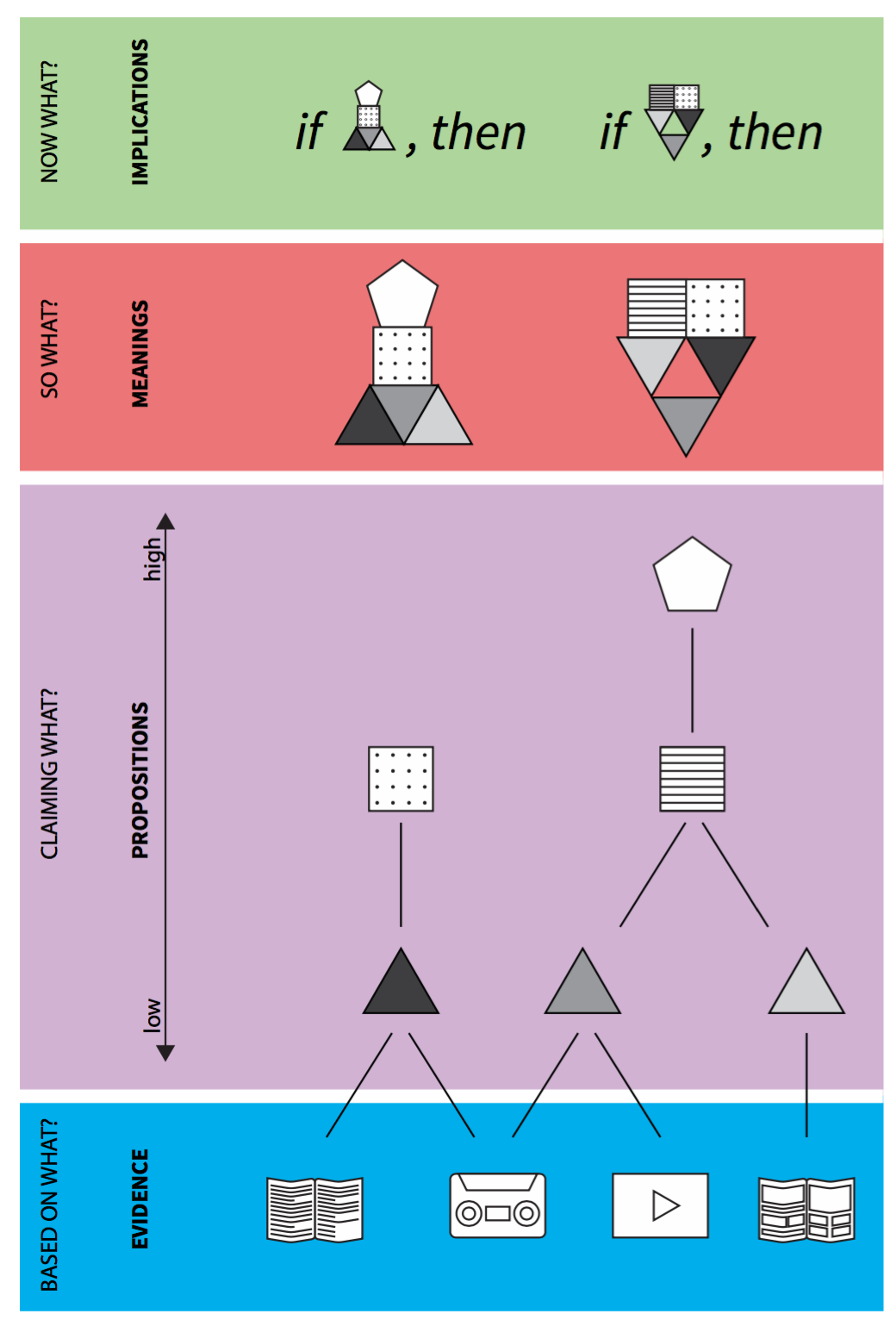

Finally, one framework that I found very helpful in the process was Petr’s diagram (Appendix C) that made the process somehow tangible in my mind. It is a great representation of grounded theory and the qualitative research process. Obviously this diagram was backed up by our readings and fruitful class discussions, without which it would not have had such an impact on me. It especially helped my in thinking about and creating propositions, the claims that we could back up with evidence all the way to the future implications of our findings: “Turtles all the way down”.

Conclusion

Throughout this paper, I attempted to summarize the learning process I went through and what I learned from this course. It has further consolidated my learning about qualitative research, validated some of my learning methods, and made me aware of all the pedagogical techniques designed into the course itself. Considering I would not have been able to engage in a meaningful conversation with other qualitative researches prior to this course, I consider this experience a success in learning (and being taught) about the field. Thank you.

Looking ahead I see room for improvement in my writing skills especially in citing previous research. This ties into to my technique of reading and note taking. I look back at the readings and find no highlights of meaningful phrases. My notes as a photograph on the blog are not searchable. Because of this I had to go back into the readings again to pull out citations. I had to try to understand my sometimes messy handwriting and make sense of it. With this in mind I am abandoning hand written notes in favor of going straight to digital.

I also feel that I have to work on my own master’s project research question and start to plan out my research. I feel that this class gave me significant skills, techniques, and concepts to be able to do so. My entrepreneur traits have a tendency to look for a solution with a top down approach. Now I have grounded theory to reduce my anxiety of getting to ‘The’ solution – I see that I must dive into context I want to meddle in, observe it exhaustively, understand how the natives navigate, analyze and then finally be better equipped to propose, claim and who knows solve a problem.

Appendix A – Course Progression

Concepts

- The Nature of Qualitative Research

- Qualitative Methods — Why and When

- Data Collection: Observation

- Data Collection: Interviewing

- Examining Subjectivity

- Analysis: Making Sense of the Data

- Considering Validity and Rigor

- Ethical Issues

Readings

See Reference section below

Assignments

- RDR #1: The Observation Process

- Qualitative Research Critique

- RDR #2: The Interview Process

- Draft of “mini-products”

- Qualitative Product Paper

- Qualitative Process Paper (this paper)

Appendix B – Amended Notes

Notes I generated in preparation for this paper:

Paper’s Structure:

Act 1

Tell story from Week 1 – Week 10

Novelty of the subject

Act 2

Readings

Observation/Interview

Data analysis

Act 3

Main takeaways

Strengths and weaknesses

Room for improvement

Wallowing through blog notes:

Main take aways from the class:

- Qualitative research – or research itself.

- The power of writing

- Frameworks and concepts

- Turtles all the way down

- I as a camera

- Grounded theory

- Probing

- Criteria for good ethnography

- Participant observer – cool! Almost like spy work

- Finding a research problem – that’s the hardest part I think

- Interview preparation

- Interview behavior

- Coding – did not believe in it at first

- Propositions – Petr’s diagram

- Validity – just be clear how you wrote it – Geisha

- Learning acquires you – Legitimate Peripheral Participation

Pushing myself

- Improve on writing skills

- Read and read and read more research

- Identify my own research problem

- Tension between researching and creating solutions

- Stand on giant’s shoulder and do something?

- Become a giant for others to be able to do something?

- Interview process I think I’d do well

- Need to practice more in extracting meaning from data, not so instinctive for me – never has been – I take facts for face value – maybe a good quality for less-biased field data collection and data analysis.

Appendix C – Petr’s Research Diagram

References

Note: references are not in alphabetical order to preserve chronological sequence

Reading Assignments:

The Nature of Qualitative Research

Merriam, S. (2002). Introduction to Qualitative Research. In S. Merriam & Associates (Eds.) Qualitative Research in Practice. San Francisco: Jossey-Bass. pp. 3-17.

Miles, M.B., & Huberman, A.M. (1994). Qualitative Data Analysis: An Expanded Sourcebook. (Second Edition). Thousand Oaks, CA: Sage. pp. 1-12.

Spindler, G. & Spindler, L. (1987). Teaching and Learning How to Do the Ethnography of Education. In G. Spindler & L. Spindler (Eds.) Interpretive Ethnography of Education at Home and Abroad. Hillsdale, NJ: Lawrence Erlbaum Associates. pp. 17-22.

Creswell, J. (2003). “A Framework for Design,” Research design: Qualitative, Quantitative and Mixed Methods Approaches (2nd edition). Thousand Oaks, CA: Sage. pp. 3 -24.

Becker, H. (1996). The Epistemology of Qualitative Research. In R, Jessor, A. Colby, & R. Shweder (Eds.) Ethnography and Human Development. Chicago: University of Chicago. pp. 53-71. (link)

Geertz, C. (1973). “Thick Description, “The Interpretation of Cultures. New York: Basic Books. pp.3-30. (link)

Data Collection

Taylor, S., & Bogdan, R. (1998). “Participant Observation, In the Field,” Introduction to Qualitative Research Methods. (Third Edition). New York: John Wiley & Sons. pp. 45-53, 61-71.

Glesne, C., & Peshkin, A. (1992). “Making Words Fly,” Becoming Qualitative Researchers: An Introduction. White Plains, NY: Longman. pp. 63-92.

Weiss, R. (1994). “Interviewing,” Learning from Strangers: The Art and Method of Qualitative Interview Studies. NY: Free Press. pp. 61-83, 107 – 115.

Subjectivity

Peshkin, A. (1991). “Appendix: In Search of Subjectivity — One’s Own,” The Color of Strangers, The Color of Friends. Chicago: University of Chicago. pp 285-295.

Peshkin, A. (2000). The Nature of Interpretation in Qualitative Research. Educational Researcher 29(9), pp. 5-9. (link)

Analysis

Taylor, S., & Bogdan, R. (1998). “Working With Data,” Introduction to Qualitative Research Methods. (Third Edition). New York: John Wiley & Sons. pp. 134-160.

Charmaz, K. (1983). “The Grounded Theory Method: An Explication and Interpretation,” In R.

Emerson (Ed.) Contemporary Field Research: A Collection of Readings. Boston: Little, Brown. pp. 109-126.

Graue, M. E., & Walsh, D. (1998). Studying Children in Context: Theories, Method, and Ethics. Thousand Oaks: Sage. pp. 158-191 and 201-206.

Page, R., Samson, Y., and Crockett, M. (1998). Reporting Ethnography to informants. Harvard Educational Review, 68 (3), 299-332.

Emerson, R., Fretz, R., & Shaw, L. (1995). “Processing Field Notes: Coding and Memoing,” Writing Ethnographic Field Notes. pp. 142 – 168.

Validity and Rigor

Johnson, R. (1997). Examining the Validity Structure of Qualitative Research. Education, 118, pp. 282-292.

Wolcott, H. (1990). On Seeking –and Rejecting– Validity in Qualitative Research. In E. Eisner & A. Peshkin (Eds.) Qualitative Inquiry in Education: The Continuing Debate. New York: Teachers College. pp. 121-152.

AERA (2006). Standards for Reporting on Empirical Social Science Research in AERA Publications. Educational Researcher 35(6), pp. 33-40.

Anfara, Jr., V., Brown, K, & Mangione, T. (2002). Qualitative Analysis on Stage: Making the Research Process More Public. Educational Researcher 31(7), pp. 28-38. (link)

Ethics

Altork, K. (1998). You Never Know When You Might Want to Be a Redhead in Belize. In K. deMarrais (Ed.) Inside Stories: Qualitative Research Reflections. Mahwah, NJ: Lawrence Erlbaum. pp. 111-125.

Lincoln, Y. (2000). Narrative Authority vs. Perjured Testimony: Courage, Vulnerability and Truth. Qualitative Studies in Education 13(2), pp. 131-138.

Products of Qualitative Research

Cohen, D. (1990). A Revolution in One Classroom: The Case of Mrs. Oublier. Educational Evaluation and Policy Analysis 12(3), pp. 311-329. (link)

McDermott, R. (1993). Acquisition of a Child by a Learning Disability. In S. Chaiklin & J. Lave (Eds.) Understanding Practice. Cambridge: Cambridge University. pp. 269-305. (link)

Rosenbloom, S., & Way, N. (2004). Experiences of Discrimination among African American, Asian American, and Latino Adolescents in an Urban High School. Youth and Society 35(4), pp. 420- 451. (link)

Other readings:

Edwin Hutchins (2006). Learning to navigate. In S. Chaiklin & J. Lave. (Eds.). Understanding practice: Perspectives on activity and context, pp. 35-63. New York: Cambridge University Press.

Qualitative Research – Final Product

Great working with James and Ana in this project!

Text version below and nicely formatted version here: Final Product.pdf

ABSTRACT

In this qualitative study, individuals involved with the Learning Innovation Hub (iHub) were studied to address the research question, “How does iHub facilitate collaboration between educators and entrepreneurs to promote education technology innovation and adoption?” To this end, an observation of the iHub fall 2015 orientation and two interviews with iHub Manager Anita Lin were conducted over the course of three weeks. iHub was found to facilitate collaboration between teachers and startups by seeing teachers as key agents in edtech adoption and focusing on teacher needs. iHub, in turn, does not focus on other stakeholders in the education ecosystem beyond teachers. This raises concerns about iHub’s impact on outcomes for learners.

(Keywords: education technology; edtech innovation; edtech adoption; iHub)

1 INTRODUCTION

Technology has the potential to revolutionize the ways in which we teach and learn. In recent years, a surge of education technologies has pushed more products into the hands of educators and learners than ever before. In fact, investments in edtech companies, too, have skyrocketed; during just the first half of 2015, investments totaled more than $2.5 billion, markedly surpassing the $2.4 billion and $600 million invested in 2014 and 2010, respectively (Appendix A) (Adkins, 2015, p. 4). In the 2012-13 academic year, the edtech market represented a share of $8.38 billion, up from $7.9 billion the previous year (Richards and Stebbins, 2015, p. 7). But how do educators find the education technologies that actually improve learning outcomes in a space increasingly crowded with many players and products?

The Learning Innovation Hub (iHub) is a San-Jose-based initiative of the Silicon Valley Education Foundation (SVEF) in partnership with NewSchools Venture Fund. Funded by the Bill & Melinda Gates Foundation, iHub aims to provide an avenue “where teachers and entrepreneurs meet.” iHub seeks to develop an “effective method for testing and iterating the education community’s most promising technology tools.” (iHub website).

To this end, iHub coordinates pilot programs of edtech products in real school settings. The iHub model involves:

(1) recruiting early-stage edtech startups with in-classroom products to apply to the program,

(2) inviting shortlisted companies to pitch before a panel of judges,

(3) selecting participating startups,

(4) matching startups with a group of about four educators who will deploy products in their classrooms,

(5) jointly orienting educators and entrepreneurs prior to the adoption of the technology in the classroom, and

(6) guiding communication among participants throughout the pilot and feedback phase.

iHub plays a unique role in the edtech ecosystem of Silicon Valley given its position as a not-for-profit program that does not have a financial stake in the startups. As such, we are interested in better understanding iHub’s impact on improving learning outcomes through technology. This study seeks to address the following research question:

How does iHub facilitate collaboration between educators and entrepreneurs to promote education technology innovation and adoption?

2 METHODOLOGY

We followed a prescribed sequence from framing our research question through data collection and analysis. Although we did not conduct a formal literature review on the research topic, members of the research team began the project with prior experience of education technology use and adoption in the classroom. We also conducted an informal observation of the organization prior to the official start of the project; we attended the iHub Pitch Games, during which the startups were selected for the participation in the fourth cohort. Our subject was selected based on a combination of convenience sampling and alignment of interest in the subject within our team.

2.1 Data Collection

We used three primary sources of data collection: online documentation, an observation, and interviews. This source triangulation roots the reliability of our findings and affords us various insights into the native view in order to understand iHub’s strategies for facilitating collaboration between educators and entrepreneurs.

We began our official data collection through the iHub website, which lays out the overarching priorities of the iHub program. The website afforded us a preliminary understanding what the program does, which we continued to access throughout the duration of the study. With this written information, we were able to compare what the program claims to do to what the program actually does, as demonstrated in the observation and what the program says it does, as elucidated by the interviews.

We continued our data collection by conducting a one-hour observation of iHub’s fall orientation (Appendix B). The orientation represents the first in-person point of contact between participating educators and entrepreneurs of the fourth iHub cohort. Coordinated by SVEF staff and spearheaded by Lin, it served as the ideal occasion for observation, as it showcased iHub’s role as a facilitator of communication and collaboration between educators and entrepreneurs. Uniting everyone together in the same room, the orientation dealt with everything from high-level discussions of the goals of iHub down to the administrative details of the initiative. Both raw and amended notes were kept by all three researchers.

In the two weeks following the orientation observation, we conducted two one-hour-long interviews of Lin. Lin was selected as the ideal interview subject given her accessibility as gatekeeper to the research team, her position as iHub Manager, and her deep understanding of the iHub initiative. A peer-reviewed interview guide was used in both interviews, though interviewers let questions emerge in situ as appropriate. The first interview sought to garner an understanding of the overarching goals and priorities of both SVEF as the parent organization and iHub as the specific program of interest. We explored what the organization does, what their processes look like, and Lin’s role within iHub. While the interview uncovered some of the areas for deeper discussion, we were intentional in keeping inquiries of the first interview at an introductory level and saving probes for the second interview. The second interview, in turn, honed in on a more granular discussion of iHub’s role in the technology adoption process and learning outcomes. Both interviews were voice-recorded, and approximately thirty minutes of each interview were transcribed (Appendix C).

2.2 Data Analysis

Our data analysis process went hand-in-hand with our data collection process, allowing us to make adjustments of our concepts, themes, and categories throughout our research. While we did not create memos per se, individual research descriptions and reflections served to clarify and elucidate some of the themes and insights that emerged throughout the process. Raw and amended notes and interview transcriptions were coded with the following jointly designed list of codes:

- Educator feedback

- Entrepreneur feedback

- Examples of success

- Examples of challenges

- Focus on early-stage startups

- Focus on educators

- Funding partnerships

- Metrics for success

- Neutrality

- Opportunities for improvement

- Organizational design

- Stakeholder alignment

- Tension between decision makers

- The iHub model/framework

From there, we were able to identify themes and patterns in our data. As our research question seeks to understand a phenomenon, a grounded theory approach proved most appropriate. This grounded theory method, we derived the propositions described below.

3 FINDINGS

3.1 iHub sees teachers as key agents in edtech adoption.

iHub sees teachers as key agents in edtech adoption. While the organization understands that entrepreneurs, school principals, district managers, and policy makers are all stakeholders in this process, they view teachers are the strongest drivers:

What we have heard from teachers and from districts, is that a lot of times for a school for adopt or…use a product across their school, it’s because a group of teachers have started of saying “I’ve been using this product. I really like this product. Hey, like friend over there! Please use this product with me,” and they are like, “Oh! Yeah we like it,” and kind of builds momentum that way (Interview Transcripts, 2015).

Under this notion that teachers can be strong advocates of edtech products, iHub is looking to adjust its curriculum around teachers as the key agents:

“So we kind of have been thinking about how do we build capacity of teachers to advocate for products they think are working well” (Interview Transcripts, 2015).

They also initiate their pilot cycles with the teachers defining what their current needs are, prior to selecting the entrepreneurs that are going to participate:

“So we send out to our teachers and they’ve kind of, I would say vaguely, have defined the problem of need, and we’d like to kind of like focus them on the future.”

Innovation then, is driven by what the teachers need in the classroom. These teachers are hand picked based on their proficiency in adopting technology and likelihood of giving better feedback and needs statements: