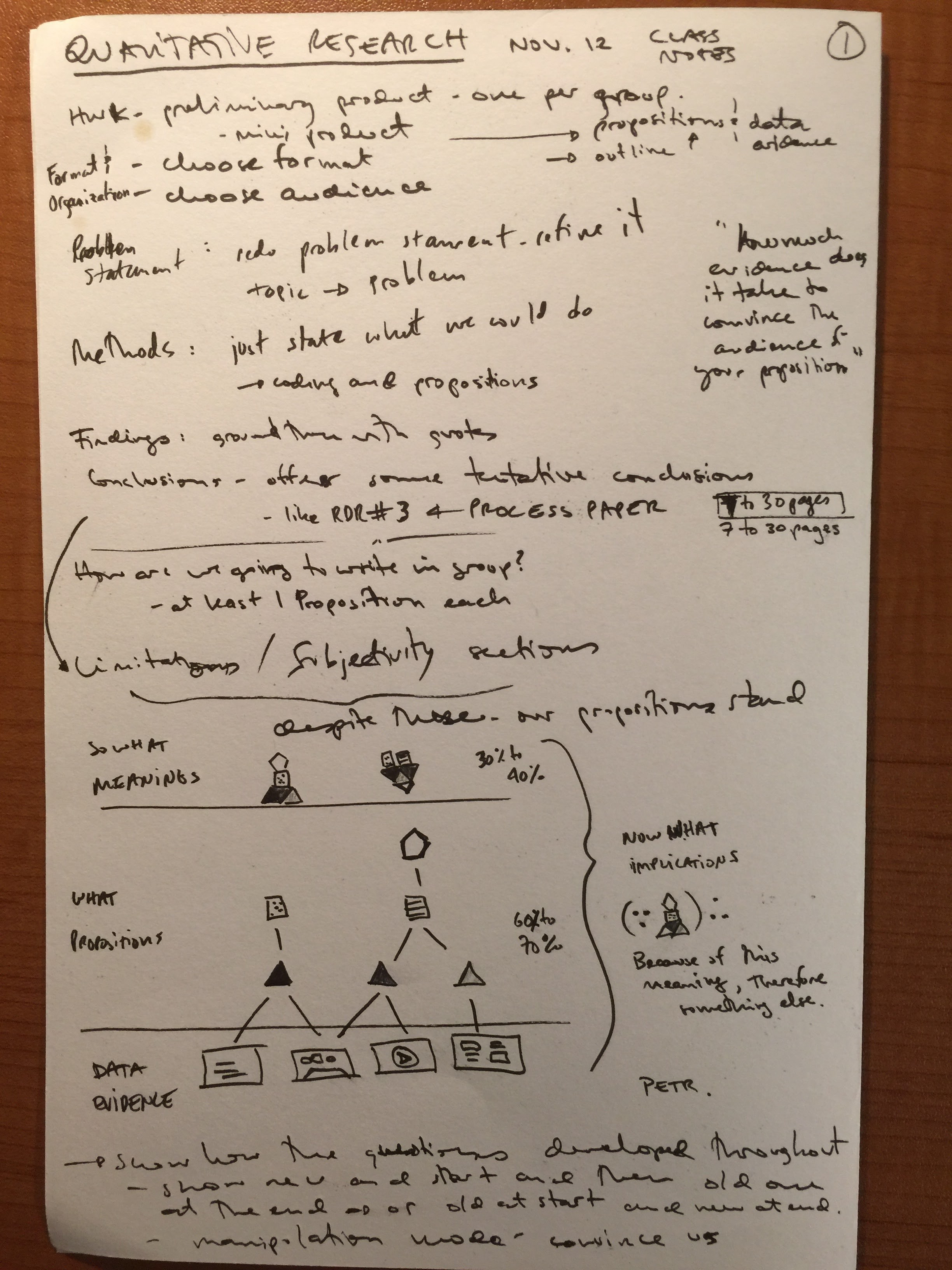

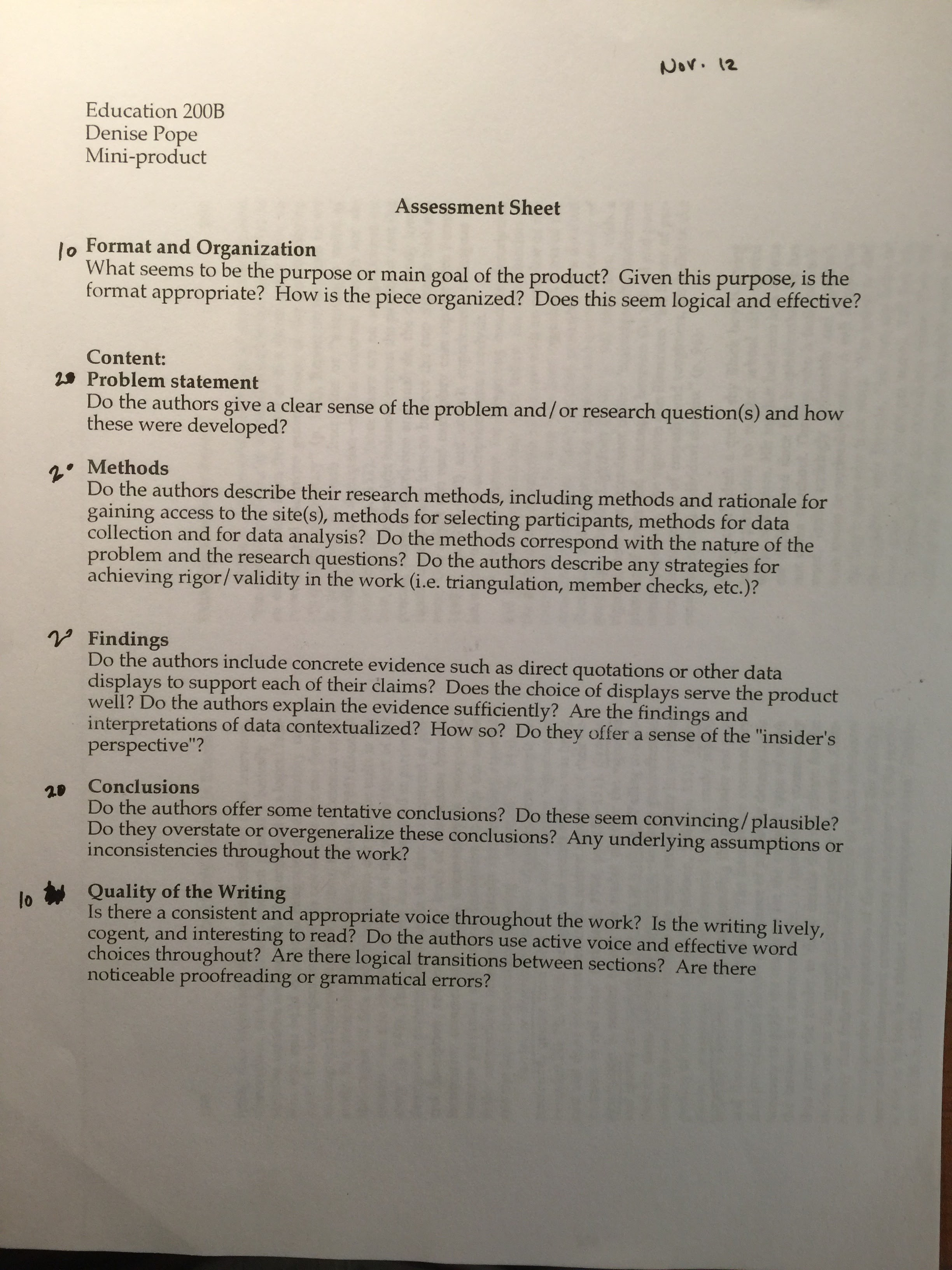

Assignment

Share what you are building / testing.

Response

SAL (Soren, Alex, Lucas) – Achu

As we mentioned last time, we had three new prototypes that we showed Achu for our third and final visit.

Prototype 1: We created a character (Tom, the speaking cat) who will introduce themselves to Achu, and explain that they absolutely need Achu’s help to describe what is on the screen below because they cannot see. First, they will ask Achu to describe a picture, then we will ask Achu to describe a video.This is meant to be a scaffolded exercise, and we maintain the idea of recording Achu as in previous prototypes and play that back to him.

Note on Interaction: does not move to the next screen, unless, Achu says something.

Frame 1:

T: Hello, Achu! How are you?

T: I am your friend!

T: My name is Tom.

T: I need your help. Will you help me?

T: I can’t see!

T: Can you tell me what is next on the page?”

Frame 2:

T: Achu, what is the word on the screen?

pause

T: What does it say?”

pause

T: Can you say it out loud for me?

pause

pause

T: Achu, please help me out, what does it say?

A: “CAR”

T: Thank you for telling me that Achu! You are really helping me!

T: Shall we go on?

Frame 3:

T: Achu, what is the sentence on the screen?

pause

T: What does it say?

pause

T: Achu, please help me out, what does it say?

A: “THE CAR IS FAST”

T: Thank you for telling me that Achu! You are really helping me! This is great!

Frame 4:

T: Achu, there will be a video now playing on the screen.

T: I cannot see the video. Can you tell me what is happening in the video?

play clip 1

T: Achu, please help me out, what happened in the video?

pause

T: I cannot not see what happened – can you tell me?

pause

T: Achu, please help me out, what happened in the video?

A: “The four friends met”

T: Thank you for telling me that Achu! You are really helping me! This is great!

play clip 2

T: Achu, I could not see what happened now in the video?

pause

T: Achu, please tell me. What happened?

pause

A: “Cars were racing”

T: Thank you for telling me that Achu! You are really helping me! This is great!

play clip 3

T: Achu, I could not see – what happened at the end of the video?

pause

T: Achu, please tell me. Help me out! What happened?

A: “The blue car won”

T: Thank you for telling me that Achu! You are really helping me! This is great!

Prototype 2: Based on Marina’s feedback, we want to build on Achu’s strengths, one of which is the ability to solve/put-together puzzle pieces really well. We designed a game that requires Achu to put together sentences, where each word is on a puzzle piece that only fits with the others in certain ways. In the game, we took turns using pieces of the puzzle (that were words for the sentence), and we could not move to the next puzzle piece until we read all of the words.

Prototype 3: We tested an existing cat app with Achu, popular among children, whereby one speaks to the app, your voice is recorded and the cat replays it as if you spoke it. Beyond the entertainment value, we want to test this out to see if this might work as a warm-up exercise for Achu to generate more words. We also want to test our hypothesis that hearing his own words will give Achu a better sense of the value of his own words.

Prototype 4: Given Achu’s facility with typing, we wanted to see if Achu would respond well to texting to someone in a different room (and thus see if this was an idea we could incorporate in our learning game/app.